Apache Kafka Reference Guide

This reference guide demonstrates how your Quarkus application can utilize Quarkus Messaging to interact with Apache Kafka.

1. Introduction

Apache Kafka is a popular open-source distributed event streaming platform. It is used commonly for high-performance data pipelines, streaming analytics, data integration, and mission-critical applications. Similar to a message queue, or an enterprise messaging platform, it lets you:

-

publish (write) and subscribe to (read) streams of events, called records.

-

store streams of records durably and reliably inside topics.

-

process streams of records as they occur or retrospectively.

And all this functionality is provided in a distributed, highly scalable, elastic, fault-tolerant, and secure manner.

2. Quarkus Extension for Apache Kafka

Quarkus provides support for Apache Kafka through SmallRye Reactive Messaging framework. Based on Eclipse MicroProfile Reactive Messaging specification 2.0, it proposes a flexible programming model bridging CDI and event-driven.

|

This guide provides an in-depth look on Apache Kafka and SmallRye Reactive Messaging framework. For a quick start take a look at Getting Started to Quarkus Messaging with Apache Kafka. |

You can add the messaging-kafka extension to your project by running the following command in your project base directory:

quarkus extension add messaging-kafka./mvnw quarkus:add-extension -Dextensions='messaging-kafka'./gradlew addExtension --extensions='messaging-kafka'This will add the following to your build file:

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-messaging-kafka</artifactId>

</dependency>implementation("io.quarkus:quarkus-messaging-kafka")|

The extension includes |

3. Configuring SmallRye Kafka Connector

Because SmallRye Reactive Messaging framework supports different messaging backends like Apache Kafka, AMQP, Apache Camel, JMS, MQTT, etc., it employs a generic vocabulary:

-

Applications send and receive messages. A message wraps a payload and can be extended with some metadata. With the Kafka connector, a message corresponds to a Kafka record.

-

Messages transit on channels. Application components connect to channels to publish and consume messages. The Kafka connector maps channels to Kafka topics.

-

Channels are connected to message backends using connectors. Connectors are configured to map incoming messages to a specific channel (consumed by the application) and collect outgoing messages sent to a specific channel. Each connector is dedicated to a specific messaging technology. For example, the connector dealing with Kafka is named

smallrye-kafka.

A minimal configuration for the Kafka connector with an incoming channel looks like the following:

%prod.kafka.bootstrap.servers=kafka:9092 (1)

mp.messaging.incoming.prices.connector=smallrye-kafka (2)| 1 | Configure the broker location for the production profile. You can configure it globally or per channel using mp.messaging.incoming.$channel.bootstrap.servers property.

In dev mode and when running tests, Dev Services for Kafka automatically starts a Kafka broker.

When not provided this property defaults to localhost:9092. |

| 2 | Configure the connector to manage the prices channel. By default, the topic name is same as the channel name. You can configure the topic attribute to override it. |

The %prod prefix indicates that the property is only used when the application runs in prod mode (so not in dev or test). Refer to the Profile documentation for further details.

|

|

Connector auto-attachment

If you have a single connector on your classpath, you can omit the This auto-attachment can be disabled using: |

4. Receiving messages from Kafka

Continuing from the previous minimal configuration, your Quarkus application can receive message payload directly:

import org.eclipse.microprofile.reactive.messaging.Incoming;

import jakarta.enterprise.context.ApplicationScoped;

@ApplicationScoped

public class PriceConsumer {

@Incoming("prices")

public void consume(double price) {

// process your price.

}

}There are several other ways your application can consume incoming messages:

@Incoming("prices")

public CompletionStage<Void> consume(Message<Double> msg) {

// access record metadata

var metadata = msg.getMetadata(IncomingKafkaRecordMetadata.class).orElseThrow();

// process the message payload.

double price = msg.getPayload();

// Acknowledge the incoming message (commit the offset)

return msg.ack();

}The Message type lets the consuming method access the incoming message metadata and handle the acknowledgment manually.

We’ll explore different acknowledgment strategies in Commit Strategies.

If you want to access the Kafka record objects directly, use:

@Incoming("prices")

public void consume(ConsumerRecord<String, Double> record) {

String key = record.key(); // Can be `null` if the incoming record has no key

String value = record.value(); // Can be `null` if the incoming record has no value

String topic = record.topic();

int partition = record.partition();

// ...

}ConsumerRecord is provided by the underlying Kafka client and can be injected directly to the consumer method.

Another simpler approach consists in using Record:

@Incoming("prices")

public void consume(Record<String, Double> record) {

String key = record.key(); // Can be `null` if the incoming record has no key

String value = record.value(); // Can be `null` if the incoming record has no value

}Record is a simple wrapper around key and payload of the incoming Kafka record.

Alternatively, your application can inject a Multi in your bean and subscribe to its events as the following example:

import io.smallrye.mutiny.Multi;

import org.eclipse.microprofile.reactive.messaging.Channel;

import jakarta.inject.Inject;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

import org.jboss.resteasy.reactive.RestStreamElementType;

@Path("/prices")

public class PriceResource {

@Inject

@Channel("prices")

Multi<Double> prices;

@GET

@Path("/prices")

@RestStreamElementType(MediaType.TEXT_PLAIN)

public Multi<Double> stream() {

return prices;

}

}This is a good example of how to integrate a Kafka consumer with another downstream, in this example exposing it as a Server-Sent Events endpoint.

|

When consuming messages with |

Following types can be injected as channels:

@Inject @Channel("prices") Multi<Double> streamOfPayloads;

@Inject @Channel("prices") Multi<Message<Double>> streamOfMessages;

@Inject @Channel("prices") Publisher<Double> publisherOfPayloads;

@Inject @Channel("prices") Publisher<Message<Double>> publisherOfMessages;As with the previous Message example, if your injected channel receives payloads (Multi<T>), it acknowledges the message automatically, and support multiple subscribers.

If you injected channel receives Message (Multi<Message<T>>), you will be responsible for the acknowledgment and broadcasting.

We will explore sending broadcast messages in Broadcasting messages on multiple consumers.

|

Injecting |

4.1. Blocking processing

Reactive Messaging invokes your method on an I/O thread.

See the Quarkus Reactive Architecture documentation for further details on this topic.

But, you often need to combine Reactive Messaging with blocking processing such as database interactions.

For this, you need to use the @Blocking annotation indicating that the processing is blocking and should not be run on the caller thread.

For example, The following code illustrates how you can store incoming payloads to a database using Hibernate with Panache:

import io.smallrye.reactive.messaging.annotations.Blocking;

import org.eclipse.microprofile.reactive.messaging.Incoming;

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.transaction.Transactional;

@ApplicationScoped

public class PriceStorage {

@Incoming("prices")

@Transactional

public void store(int priceInUsd) {

Price price = new Price();

price.value = priceInUsd;

price.persist();

}

}The complete example is available in the kafka-panache-quickstart directory.

|

There are 2

They have the same effect. Thus, you can use both. The first one provides more fine-grained tuning such as the worker pool to use and whether it preserves the order. The second one, used also with other reactive features of Quarkus, uses the default worker pool and preserves the order. Detailed information on the usage of |

|

@RunOnVirtualThread

For running the blocking processing on Java virtual threads, see the Quarkus Virtual Thread support with Reactive Messaging documentation. |

|

@Transactional

If your method is annotated with |

4.2. Acknowledgment Strategies

All messages received by a consumer must be acknowledged.

In the absence of acknowledgment, the processing is considered in error.

If the consumer method receives a Record or a payload, the message will be acked on method return, also known as Strategy.POST_PROCESSING.

If the consumer method returns another reactive stream or CompletionStage, the message will be acked when the downstream message is acked.

You can override the default behavior to ack the message on arrival (Strategy.PRE_PROCESSING),

or do not ack the message at all (Strategy.NONE) on the consumer method as in the following example:

@Incoming("prices")

@Acknowledgment(Acknowledgment.Strategy.PRE_PROCESSING)

public void process(double price) {

// process price

}If the consumer method receives a Message, the acknowledgment strategy is Strategy.MANUAL

and the consumer method is in charge of ack/nack the message.

@Incoming("prices")

public CompletionStage<Void> process(Message<Double> msg) {

// process price

return msg.ack();

}As mentioned above, the method can also override the acknowledgment strategy to PRE_PROCESSING or NONE.

4.3. Commit Strategies

When a message produced from a Kafka record is acknowledged, the connector invokes a commit strategy. These strategies decide when the consumer offset for a specific topic/partition is committed. Committing an offset indicates that all previous records have been processed. It is also the position where the application would restart the processing after a crash recovery or a restart.

Committing every offset has performance penalties as Kafka offset management can be slow. However, not committing the offset often enough may lead to message duplication if the application crashes between two commits.

The Kafka connector supports three strategies:

-

throttledkeeps track of received messages and commits an offset of the latest acked message in sequence (meaning, all previous messages were also acked). This strategy guarantees at-least-once delivery even if the channel performs asynchronous processing. The connector tracks the received records and periodically (period specified byauto.commit.interval.ms, default: 5000 ms) commits the highest consecutive offset. The connector will be marked as unhealthy if a message associated with a record is not acknowledged inthrottled.unprocessed-record-max-age.ms(default: 60000 ms). Indeed, this strategy cannot commit the offset as soon as a single record processing fails. Ifthrottled.unprocessed-record-max-age.msis set to less than or equal to0, it does not perform any health check verification. Such a setting might lead to running out of memory if there are "poison pill" messages (that are never acked). This strategy is the default ifenable.auto.commitis not explicitly set to true. -

checkpointallows persisting consumer offsets on a state store, instead of committing them back to the Kafka broker. Using theCheckpointMetadataAPI, consumer code can persist a processing state with the record offset to mark the progress of a consumer. When the processing continues from a previously persisted offset, it seeks the Kafka consumer to that offset and also restores the persisted state, continuing the stateful processing from where it left off. The checkpoint strategy holds locally the processing state associated with the latest offset, and persists it periodically to the state store (period specified byauto.commit.interval.ms(default: 5000)). The connector will be marked as unhealthy if no processing state is persisted to the state store incheckpoint.unsynced-state-max-age.ms(default: 10000). Ifcheckpoint.unsynced-state-max-age.msis set to less than or equal to 0, it does not perform any health check verification. For more information, see Stateful processing with Checkpointing -

latestcommits the record offset received by the Kafka consumer as soon as the associated message is acknowledged (if the offset is higher than the previously committed offset). This strategy provides at-least-once delivery if the channel processes the message without performing any asynchronous processing. Specifically, the offset of the most recent acknowledged message will always be committed, even if older messages have not finished being processed. In case of an incident such as a crash, processing would restart after the last commit, leading to older messages never being successfully and fully processed, which would appear as message loss. This strategy should not be used in high load environment, as offset commit is expensive. However, it reduces the risk of duplicates. -

ignoreperforms no commit. This strategy is the default strategy when the consumer is explicitly configured withenable.auto.committo true. It delegates the offset commit to the underlying Kafka client. Whenenable.auto.commitistruethis strategy DOES NOT guarantee at-least-once delivery. SmallRye Reactive Messaging processes records asynchronously, so offsets may be committed for records that have been polled but not yet processed. In case of a failure, only records that were not committed yet will be re-processed.

|

The Kafka connector disables the Kafka auto commit when it is not explicitly enabled. This behavior differs from the traditional Kafka consumer. If high throughput is important for you, and you are not limited by the downstream, we recommend to either:

|

SmallRye Reactive Messaging enables implementing custom commit strategies. See SmallRye Reactive Messaging documentation for more information.

4.4. Error Handling Strategies

If a message produced from a Kafka record is nacked, a failure strategy is applied. The Kafka connector supports three strategies:

-

fail: fail the application, no more records will be processed (default strategy). The offset of the record that has not been processed correctly is not committed. -

ignore: the failure is logged, but the processing continue. The offset of the record that has not been processed correctly is committed. -

dead-letter-queue: the offset of the record that has not been processed correctly is committed, but the record is written to a Kafka dead letter topic.

The strategy is selected using the failure-strategy attribute.

In the case of dead-letter-queue, you can configure the following attributes:

-

dead-letter-queue.topic: the topic to use to write the records not processed correctly, default isdead-letter-topic-$channel, with$channelbeing the name of the channel. -

dead-letter-queue.key.serializer: the serializer used to write the record key on the dead letter queue. By default, it deduces the serializer from the key deserializer. -

dead-letter-queue.value.serializer: the serializer used to write the record value on the dead letter queue. By default, it deduces the serializer from the value deserializer.

The record written on the dead letter queue contains a set of additional headers about the original record:

-

dead-letter-reason: the reason of the failure

-

dead-letter-cause: the cause of the failure if any

-

dead-letter-topic: the original topic of the record

-

dead-letter-partition: the original partition of the record (integer mapped to String)

-

dead-letter-offset: the original offset of the record (long mapped to String)

SmallRye Reactive Messaging enables implementing custom failure strategies. See SmallRye Reactive Messaging documentation for more information.

4.4.1. Retrying processing

You can combine Reactive Messaging with SmallRye Fault Tolerance, and retry processing if it failed:

@Incoming("kafka")

@Retry(delay = 10, maxRetries = 5)

public void consume(String v) {

// ... retry if this method throws an exception

}You can configure the delay, the number of retries, the jitter, etc.

If your method returns a Uni or CompletionStage, you need to add the @NonBlocking annotation:

@Incoming("kafka")

@Retry(delay = 10, maxRetries = 5)

@NonBlocking

public Uni<String> consume(String v) {

// ... retry if this method throws an exception or the returned Uni produce a failure

}

The @NonBlocking annotation is only required with SmallRye Fault Tolerance 5.1.0 and earlier.

Starting with SmallRye Fault Tolerance 5.2.0 (available since Quarkus 2.1.0.Final), it is not necessary.

See SmallRye Fault Tolerance documentation for more information.

|

The incoming messages are acknowledged only once the processing completes successfully. So, it commits the offset after the successful processing. If the processing still fails, even after all retries, the message is nacked and the failure strategy is applied.

4.4.2. Handling Deserialization Failures

When a deserialization failure occurs, you can intercept it and provide a failure strategy.

To achieve this, you need to create a bean implementing DeserializationFailureHandler<T> interface:

@ApplicationScoped

@Identifier("failure-retry") // Set the name of the failure handler

public class MyDeserializationFailureHandler

implements DeserializationFailureHandler<JsonObject> { // Specify the expected type

@Override

public JsonObject decorateDeserialization(Uni<JsonObject> deserialization, String topic, boolean isKey,

String deserializer, byte[] data, Headers headers) {

return deserialization

.onFailure().retry().atMost(3)

.await().atMost(Duration.ofMillis(200));

}

}To use this failure handler, the bean must be exposed with the @Identifier qualifier and the connector configuration must specify the attribute mp.messaging.incoming.$channel.[key|value]-deserialization-failure-handler (for key or value deserializers).

The handler is called with details of the deserialization, including the action represented as Uni<T>.

On the deserialization Uni failure strategies like retry, providing a fallback value or applying timeout can be implemented.

If you don’t configure a deserialization failure handler and a deserialization failure happens, the application is marked unhealthy.

You can also ignore the failure, which will log the exception and produce a null value.

To enable this behavior, set the mp.messaging.incoming.$channel.fail-on-deserialization-failure attribute to false.

If the fail-on-deserialization-failure attribute is set to false and the failure-strategy attribute is dead-letter-queue the failed record will be sent to the corresponding dead letter queue topic.

4.5. Consumer Groups

In Kafka, a consumer group is a set of consumers which cooperate to consume data from a topic. A topic is divided into a set of partitions. The partitions of a topic are assigned among the consumers in the group, effectively allowing to scale consumption throughput. Note that each partition is assigned to a single consumer from a group. However, a consumer can be assigned multiple partitions if the number of partitions is greater than the number of consumer in the group.

Let’s explore briefly different producer/consumer patterns and how to implement them using Quarkus:

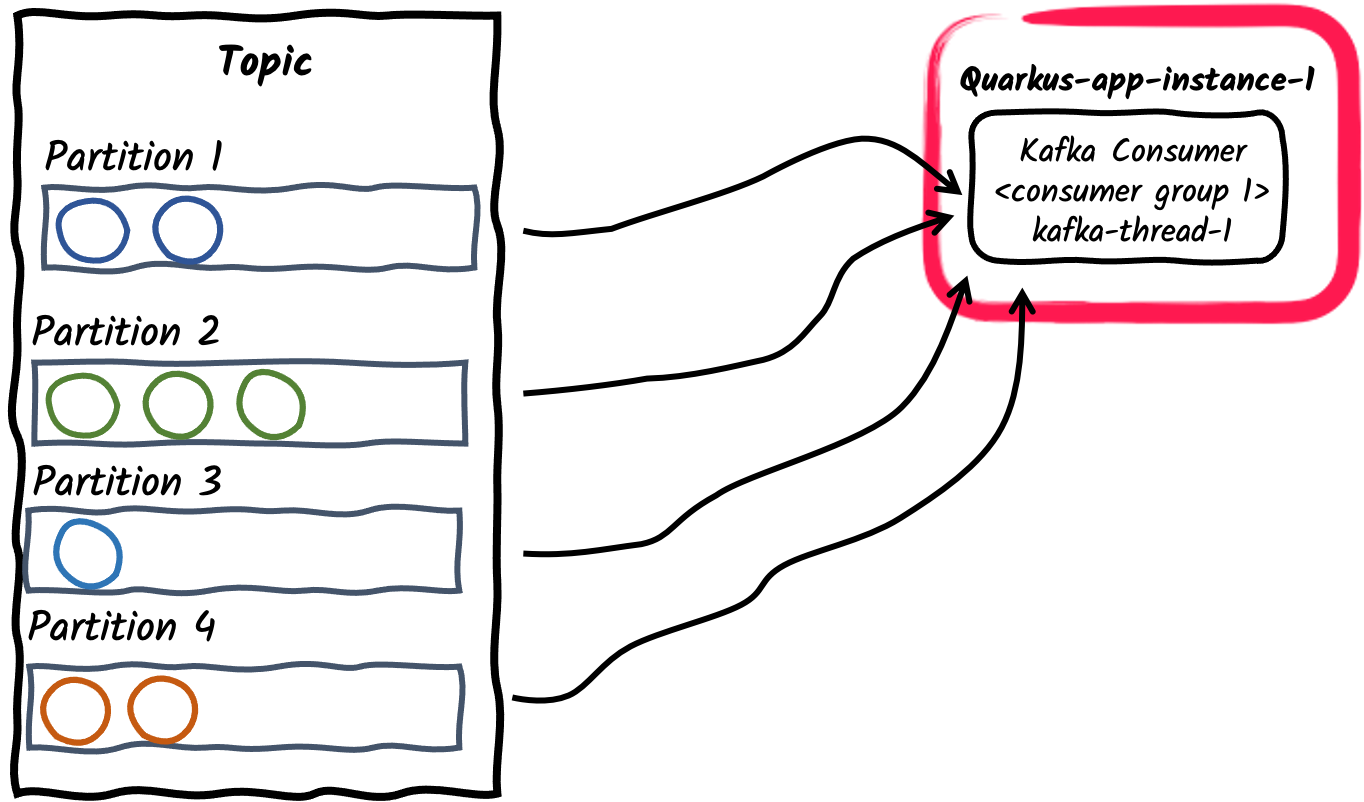

-

Single consumer thread inside a consumer group

This is the default behavior of an application subscribing to a Kafka topic: Each Kafka connector will create a single consumer thread and place it inside a single consumer group. Consumer group id defaults to the application name as set by the

quarkus.application.nameconfiguration property. It can also be set using thekafka.group.idproperty.

-

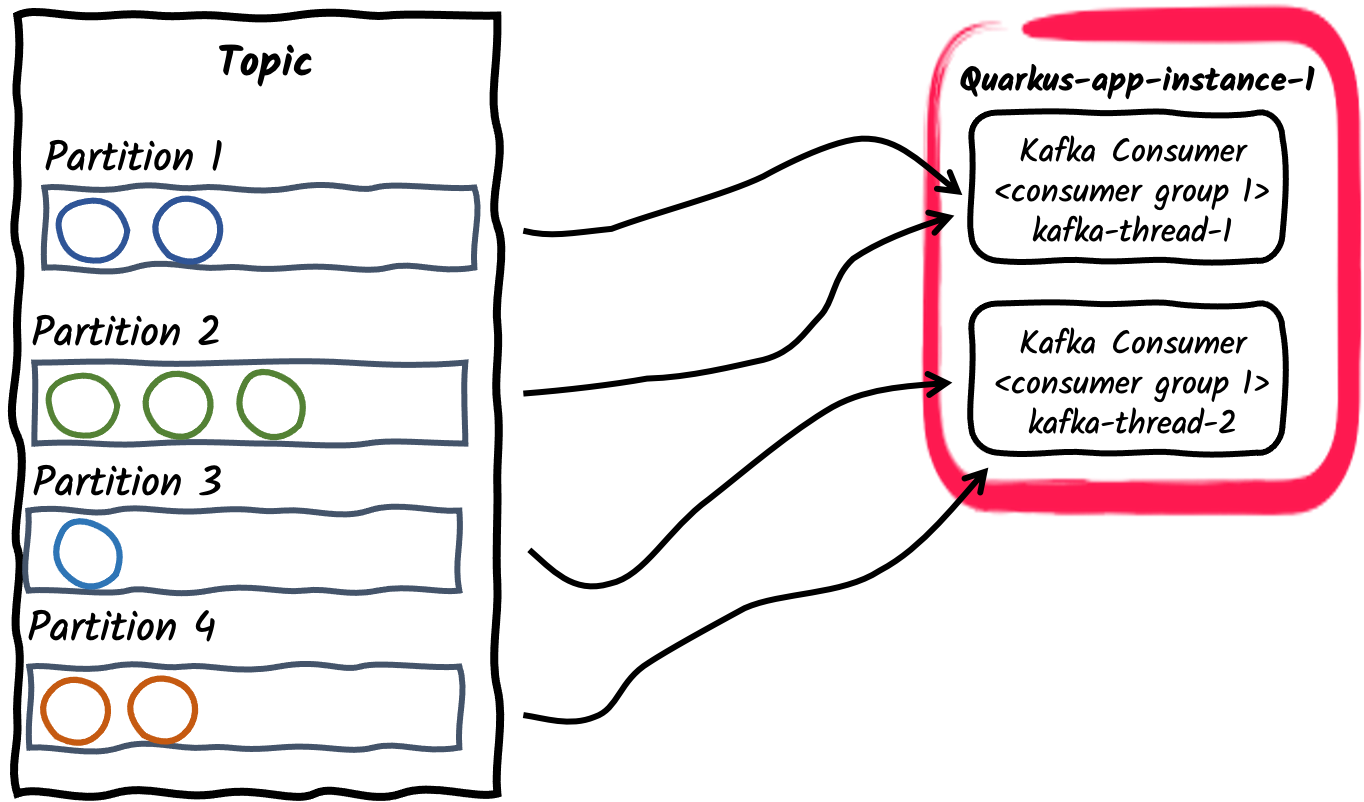

Multiple consumer threads inside a consumer group

For a given application instance, the number of consumers inside the consumer group can be configured using

mp.messaging.incoming.$channel.concurrencyproperty. The partitions of the subscribed topic will be divided among the consumer threads. Note that if theconcurrencyvalue exceed the number of partitions of the topic, some consumer threads won’t be assigned any partitions. Deprecation

DeprecationThe concurrency attribute provides a connector agnostic way for non-blocking concurrent channels and replaces the Kafka connector specific

partitionsattribute. Thepartitionsattribute is therefore deprecated and will be removed in future releases. -

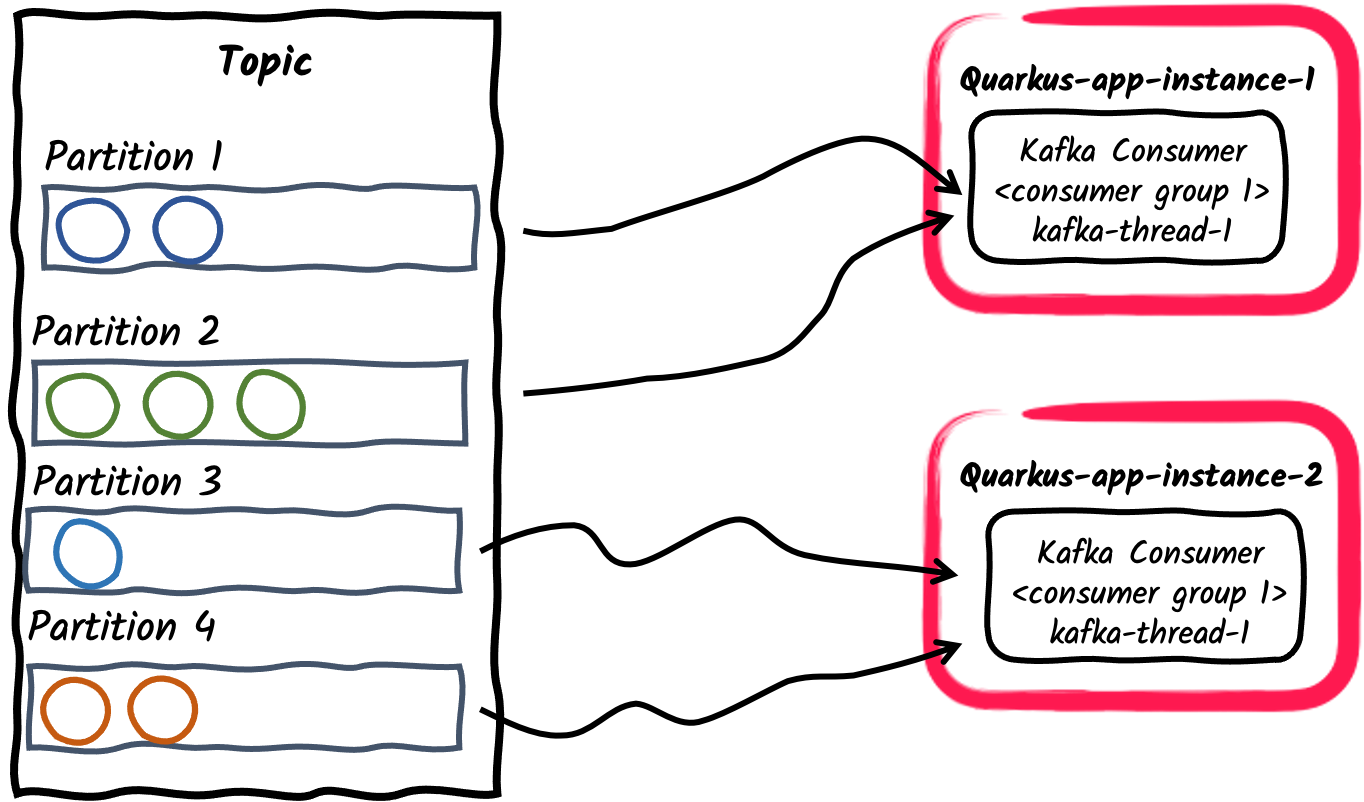

Multiple consumer applications inside a consumer group

Similar to the previous example, multiple instances of an application can subscribe to a single consumer group, configured via

mp.messaging.incoming.$channel.group.idproperty, or left default to the application name. This in turn will divide partitions of the topic among application instances.

-

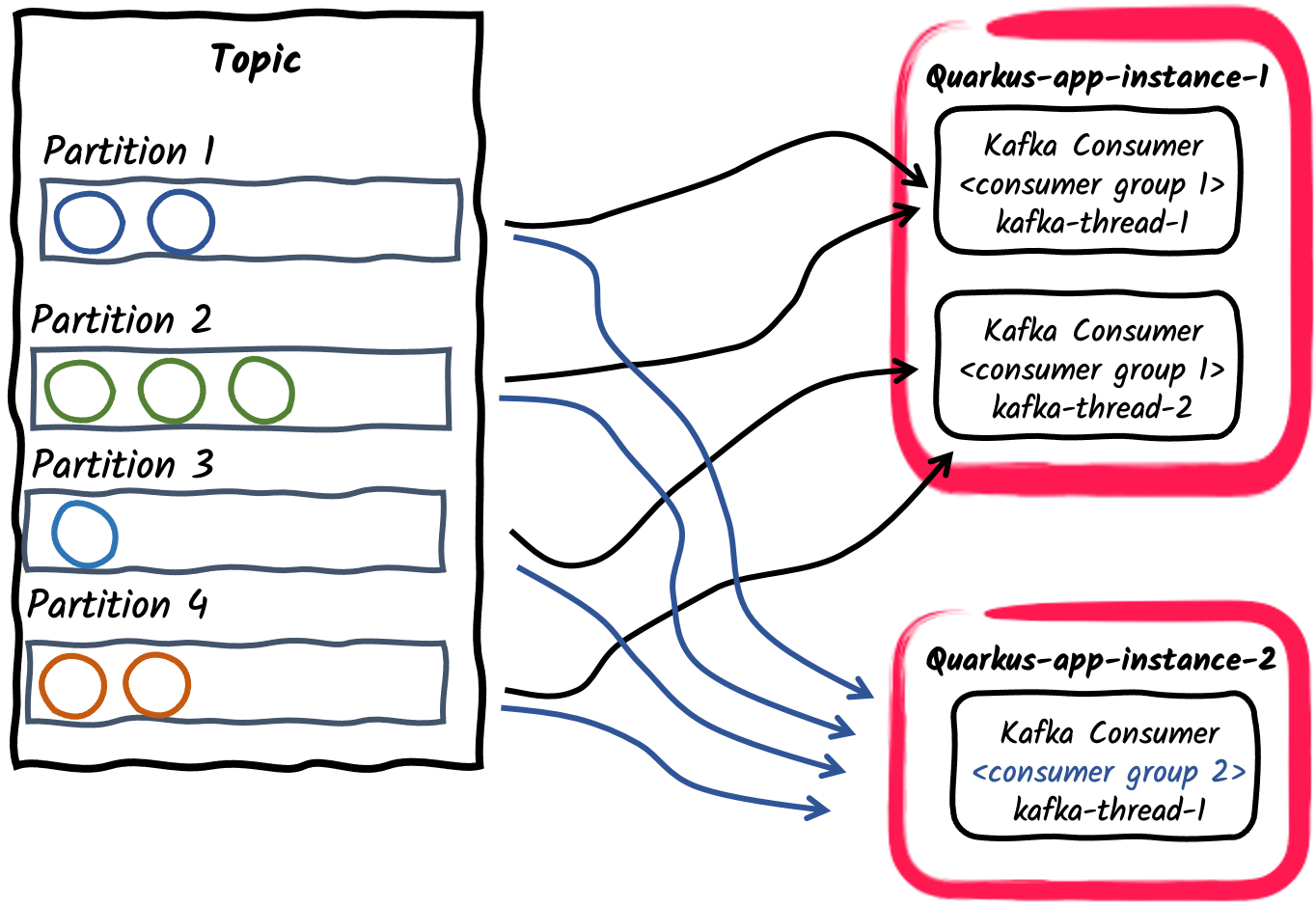

Pub/Sub: Multiple consumer groups subscribed to a topic

Lastly different applications can subscribe independently to same topics using different consumer group ids. For example, messages published to a topic called orders can be consumed independently on two consumer applications, one with

mp.messaging.incoming.orders.group.id=invoicingand second withmp.messaging.incoming.orders.group.id=shipping. Different consumer groups can thus scale independently according to the message consumption requirements.

|

A common business requirement is to consume and process Kafka records in order. The Kafka broker preserves order of records inside a partition and not inside a topic. Therefore, it is important to think about how records are partitioned inside a topic. The default partitioner uses record key hash to compute the partition for a record, or when the key is not defined, chooses a partition randomly per batch or records. During normal operation, a Kafka consumer preserves the order of records inside each partition assigned to it.

SmallRye Reactive Messaging keeps this order for processing, unless Note that due to consumer rebalances, Kafka consumers only guarantee at-least-once processing of single records, meaning that uncommitted records can be processed again by consumers. |

4.5.1. Consumer Rebalance Listener

Inside a consumer group, as new group members arrive and old members leave, the partitions are re-assigned so that each member receives a proportional share of the partitions.

This is known as rebalancing the group.

To handle offset commit and assigned partitions yourself, you can provide a consumer rebalance listener.

To achieve this, implement the io.smallrye.reactive.messaging.kafka.KafkaConsumerRebalanceListener interface and expose it as a CDI bean with the @Idenfier qualifier.

A common use case is to store offset in a separate data store to implement exactly-once semantic, or starting the processing at a specific offset.

The listener is invoked every time the consumer topic/partition assignment changes.

For example, when the application starts, it invokes the partitionsAssigned callback with the initial set of topics/partitions associated with the consumer.

If, later, this set changes, it calls the partitionsRevoked and partitionsAssigned callbacks again, so you can implement custom logic.

Note that the rebalance listener methods are called from the Kafka polling thread and will block the caller thread until completion. That’s because the rebalance protocol has synchronization barriers, and using asynchronous code in a rebalance listener may be executed after the synchronization barrier.

When topics/partitions are assigned or revoked from a consumer, it pauses the message delivery and resumes once the rebalance completes.

If the rebalance listener handles offset commit on behalf of the user (using the NONE commit strategy),

the rebalance listener must commit the offset synchronously in the partitionsRevoked callback.

We also recommend applying the same logic when the application stops.

Unlike the ConsumerRebalanceListener from Apache Kafka, the io.smallrye.reactive.messaging.kafka.KafkaConsumerRebalanceListener methods pass the Kafka Consumer and the set of topics/partitions.

In the following example we set up a consumer that always starts on messages from at most 10 minutes ago (or offset 0).

First we need to provide a bean that implements io.smallrye.reactive.messaging.kafka.KafkaConsumerRebalanceListener and is annotated with io.smallrye.common.annotation.Identifier.

We then must configure our inbound connector to use this bean.

package inbound;

import io.smallrye.common.annotation.Identifier;

import io.smallrye.reactive.messaging.kafka.KafkaConsumerRebalanceListener;

import org.apache.kafka.clients.consumer.Consumer;

import org.apache.kafka.clients.consumer.OffsetAndTimestamp;

import org.apache.kafka.clients.consumer.TopicPartition;

import jakarta.enterprise.context.ApplicationScoped;

import java.util.Collection;

import java.util.HashMap;

import java.util.Map;

import java.util.logging.Logger;

@ApplicationScoped

@Identifier("rebalanced-example.rebalancer")

public class KafkaRebalancedConsumerRebalanceListener implements KafkaConsumerRebalanceListener {

private static final Logger LOGGER = Logger.getLogger(KafkaRebalancedConsumerRebalanceListener.class.getName());

/**

* When receiving a list of partitions, will search for the earliest offset within 10 minutes

* and seek the consumer to it.

*

* @param consumer underlying consumer

* @param partitions set of assigned topic partitions

*/

@Override

public void onPartitionsAssigned(Consumer<?, ?> consumer, Collection<TopicPartition> partitions) {

long now = System.currentTimeMillis();

long shouldStartAt = now - 600_000L; //10 minute ago

Map<TopicPartition, Long> request = new HashMap<>();

for (TopicPartition partition : partitions) {

LOGGER.info("Assigned " + partition);

request.put(partition, shouldStartAt);

}

Map<TopicPartition, OffsetAndTimestamp> offsets = consumer.offsetsForTimes(request);

for (Map.Entry<TopicPartition, OffsetAndTimestamp> position : offsets.entrySet()) {

long target = position.getValue() == null ? 0L : position.getValue().offset();

LOGGER.info("Seeking position " + target + " for " + position.getKey());

consumer.seek(position.getKey(), target);

}

}

}package inbound;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.eclipse.microprofile.reactive.messaging.Acknowledgment;

import org.eclipse.microprofile.reactive.messaging.Incoming;

import org.eclipse.microprofile.reactive.messaging.Message;

import jakarta.enterprise.context.ApplicationScoped;

import java.util.concurrent.CompletableFuture;

import java.util.concurrent.CompletionStage;

@ApplicationScoped

public class KafkaRebalancedConsumer {

@Incoming("rebalanced-example")

@Acknowledgment(Acknowledgment.Strategy.NONE)

public CompletionStage<Void> consume(Message<ConsumerRecord<Integer, String>> message) {

// We don't need to ACK messages because in this example,

// we set offset during consumer rebalance

return CompletableFuture.completedFuture(null);

}

}To configure the inbound connector to use the provided listener, we either set the consumer rebalance listener’s identifier:

mp.messaging.incoming.rebalanced-example.consumer-rebalance-listener.name=rebalanced-example.rebalancer

Or have the listener’s name be the same as the group id:

mp.messaging.incoming.rebalanced-example.group.id=rebalanced-example.rebalancer

Setting the consumer rebalance listener’s name takes precedence over using the group id.

4.5.2. Using unique consumer groups

If you want to process all the records from a topic (from its beginning), you need:

-

to set

auto.offset.reset = earliest -

assign your consumer to a consumer group not used by any other application.

Quarkus generates a UUID that changes between two executions (including in dev mode). So, you are sure no other consumer uses it, and you receive a new unique group id every time your application starts.

You can use that generated UUID as the consumer group as follows:

mp.messaging.incoming.your-channel.auto.offset.reset=earliest

mp.messaging.incoming.your-channel.group.id=${quarkus.uuid}

If the group.id attribute is not set, it defaults the quarkus.application.name configuration property.

|

4.5.3. Manual topic-partition assignment

The assign-seek channel attribute allows manually assigning topic-partitions to a Kafka incoming channel,

and optionally seek to a specified offset in the partition to start consuming records.

If assign-seek is used, the consumer will not be dynamically subscribed to topics,

but instead will statically assign the described partitions.

In manual topic-partition rebalancing doesn’t happen and therefore rebalance listeners are never called.

The attribute takes a list of triplets separated by commas: <topic>:<partition>:<offset>.

For example, the configuration

mp.messaging.incoming.data.assign-seek=topic1:0:10, topic2:1:20assigns the consumer to:

-

Partition 0 of topic 'topic1', setting the initial position at offset 10.

-

Partition 1 of topic 'topic2', setting the initial position at offset 20.

The topic, partition, and offset in each triplet can have the following variations:

-

If the topic is omitted, the configured topic will be used.

-

If the offset is omitted, partitions are assigned to the consumer but won’t be sought to offset.

-

If offset is 0, it seeks to the beginning of the topic-partition.

-

If offset is -1, it seeks to the end of the topic-partition.

4.6. Receiving Kafka Records in Batches

By default, incoming methods receive each Kafka record individually.

Under the hood, Kafka consumer clients poll the broker constantly and receive records in batches, presented inside the ConsumerRecords container.

In batch mode, your application can receive all the records returned by the consumer poll in one go.

To achieve this you need to specify a compatible container type to receive all the data:

@Incoming("prices")

public void consume(List<Double> prices) {

for (double price : prices) {

// process price

}

}The incoming method can also receive Message<List<Payload>>, Message<ConsumerRecords<Key, Payload>>, and ConsumerRecords<Key, Payload> types.

They give access to record details such as offset or timestamp:

@Incoming("prices")

public CompletionStage<Void> consumeMessage(Message<ConsumerRecords<String, Double>> records) {

for (ConsumerRecord<String, Double> record : records.getPayload()) {

String payload = record.getPayload();

String topic = record.getTopic();

// process messages

}

// ack will commit the latest offsets (per partition) of the batch.

return records.ack();

}Note that the successful processing of the incoming record batch will commit the latest offsets for each partition received inside the batch. The configured commit strategy will be applied for these records only.

Conversely, if the processing throws an exception, all messages are nacked, applying the failure strategy for all the records inside the batch.

|

Quarkus autodetects batch types for incoming channels and sets batch configuration automatically.

You can configure batch mode explicitly with |

4.7. Stateful processing with Checkpointing

|

The |

SmallRye Reactive Messaging checkpoint commit strategy allows consumer applications to process messages in a stateful manner, while also respecting Kafka consumer scalability.

An incoming channel with checkpoint commit strategy persists consumer offsets on an external

state store, such as a relational database or a key-value store.

As a result of processing consumed records, the consumer application can accumulate an internal state for each topic-partition assigned to the Kafka consumer.

This local state will be periodically persisted to the state store and will be associated with the offset of the record that produced it.

This strategy does not commit any offsets to the Kafka broker, so when new partitions get assigned to the consumer, i.e. consumer restarts or consumer group instances scale, the consumer resumes the processing from the latest checkpointed offset with its saved state.

The @Incoming channel consumer code can manipulate the processing state through the CheckpointMetadata API.

For example, a consumer calculating the moving average of prices received on a Kafka topic would look the following:

package org.acme;

import java.util.concurrent.CompletionStage;

import jakarta.enterprise.context.ApplicationScoped;

import org.eclipse.microprofile.reactive.messaging.Incoming;

import org.eclipse.microprofile.reactive.messaging.Message;

import io.smallrye.reactive.messaging.kafka.KafkaRecord;

import io.smallrye.reactive.messaging.kafka.commit.CheckpointMetadata;

@ApplicationScoped

public class MeanCheckpointConsumer {

@Incoming("prices")

public CompletionStage<Void> consume(Message<Double> record) {

// Get the `CheckpointMetadata` from the incoming message

CheckpointMetadata<AveragePrice> checkpoint = CheckpointMetadata.fromMessage(record);

// `CheckpointMetadata` allows transforming the processing state

// Applies the given function, starting from the value `0.0` when no previous state exists

checkpoint.transform(new AveragePrice(), average -> average.update(record.getPayload()), /* persistOnAck */ true);

// `persistOnAck` flag set to true, ack will persist the processing state

// associated with the latest offset (per partition).

return record.ack();

}

static class AveragePrice {

long count;

double mean;

AveragePrice update(double newPrice) {

mean += ((newPrice - mean) / ++count);

return this;

}

}

}The transform method applies the transformation function to the current state, producing a changed state and registering it locally for checkpointing.

By default, the local state is persisted to the state store periodically, period specified by auto.commit.interval.ms, (default: 5000).

If persistOnAck flag is given, the latest state is persisted to the state store eagerly on message acknowledgment.

The setNext method works similarly directly setting the latest state.

The checkpoint commit strategy tracks when a processing state is last persisted for each topic-partition.

If an outstanding state change can not be persisted for checkpoint.unsynced-state-max-age.ms (default: 10000), the channel is marked unhealthy.

4.7.1. State stores

State store implementations determine where and how the processing states are persisted.

This is configured by the mp.messaging.incoming.[channel-name].checkpoint.state-store property.

The serialization of state objects depends on the state store implementation.

In order to instruct state stores for serialization can require configuring the class name of state objects using mp.messaging.incoming.[channel-name].checkpoint.state-type property.

Quarkus provides following state store implementations:

-

quarkus-redis: Uses thequarkus-redis-clientextension to persist processing states. Jackson is used to serialize processing state in Json. For complex objects it is required to configure thecheckpoint.state-typeproperty with the class name of the object. By default, the state store uses the default redis client, but if a named client is to be used, the client name can be specified using themp.messaging.incoming.[channel-name].checkpoint.quarkus-redis.client-nameproperty. Processing states will be stored in Redis using the key naming scheme[consumer-group-id]:[topic]:[partition].

For example the configuration of the previous code would be the following:

mp.messaging.incoming.prices.group.id=prices-checkpoint

# ...

mp.messaging.incoming.prices.commit-strategy=checkpoint

mp.messaging.incoming.prices.checkpoint.state-store=quarkus-redis

mp.messaging.incoming.prices.checkpoint.state-type=org.acme.MeanCheckpointConsumer.AveragePrice

# ...

# if using a named redis client

mp.messaging.incoming.prices.checkpoint.quarkus-redis.client-name=my-redis

quarkus.redis.my-redis.hosts=redis://localhost:7000

quarkus.redis.my-redis.password=<redis-pwd>-

quarkus-hibernate-reactive: Uses thequarkus-hibernate-reactiveextension to persist processing states. Processing state objects are required to be a Jakarta Persistence entity and extend theCheckpointEntityclass, which handles object identifiers composed of the consumer group id, topic and partition. Therefore, the class name of the entity needs to be configured using thecheckpoint.state-typeproperty.

For example the configuration of the previous code would be the following:

mp.messaging.incoming.prices.group.id=prices-checkpoint

# ...

mp.messaging.incoming.prices.commit-strategy=checkpoint

mp.messaging.incoming.prices.checkpoint.state-store=quarkus-hibernate-reactive

mp.messaging.incoming.prices.checkpoint.state-type=org.acme.AveragePriceEntityWith AveragePriceEntity being a Jakarta Persistence entity extending CheckpointEntity:

package org.acme;

import jakarta.persistence.Entity;

import io.quarkus.smallrye.reactivemessaging.kafka.CheckpointEntity;

@Entity

public class AveragePriceEntity extends CheckpointEntity {

public long count;

public double mean;

public AveragePriceEntity update(double newPrice) {

mean += ((newPrice - mean) / ++count);

return this;

}

}-

quarkus-hibernate-orm: Uses thequarkus-hibernate-ormextension to persist processing states. It is similar to the previous state store, but it uses Hibernate ORM instead of Hibernate Reactive.

When configured, it can use a named persistence-unit for the checkpointing state store:

mp.messaging.incoming.prices.commit-strategy=checkpoint

mp.messaging.incoming.prices.checkpoint.state-store=quarkus-hibernate-orm

mp.messaging.incoming.prices.checkpoint.state-type=org.acme.AveragePriceEntity

mp.messaging.incoming.prices.checkpoint.quarkus-hibernate-orm.persistence-unit=prices

# ... Setup "prices" persistence unit

quarkus.datasource."prices".db-kind=postgresql

quarkus.datasource."prices".username=<your username>

quarkus.datasource."prices".password=<your password>

quarkus.datasource."prices".jdbc.url=jdbc:postgresql://localhost:5432/hibernate_orm_test

quarkus.hibernate-orm."prices".datasource=prices

quarkus.hibernate-orm."prices".packages=org.acmeFor instructions on how to implement custom state stores, see Implementing State Stores.

5. Sending messages to Kafka

Configuration for the Kafka connector outgoing channels is similar to that of incoming:

%prod.kafka.bootstrap.servers=kafka:9092 (1)

mp.messaging.outgoing.prices-out.connector=smallrye-kafka (2)

mp.messaging.outgoing.prices-out.topic=prices (3)| 1 | Configure the broker location for the production profile. You can configure it globally or per channel using mp.messaging.outgoing.$channel.bootstrap.servers property.

In dev mode and when running tests, Dev Services for Kafka automatically starts a Kafka broker.

When not provided, this property defaults to localhost:9092. |

| 2 | Configure the connector to manage the prices-out channel. |

| 3 | By default, the topic name is same as the channel name. You can configure the topic attribute to override it. |

|

Inside application configuration, channel names are unique.

Therefore, if you’d like to configure an incoming and outgoing channel on the same topic, you will need to name channels differently (like in the examples of this guide, |

Then, your application can generate messages and publish them to the prices-out channel.

It can use double payloads as in the following snippet:

import io.smallrye.mutiny.Multi;

import org.eclipse.microprofile.reactive.messaging.Outgoing;

import jakarta.enterprise.context.ApplicationScoped;

import java.time.Duration;

import java.util.Random;

@ApplicationScoped

public class KafkaPriceProducer {

private final Random random = new Random();

@Outgoing("prices-out")

public Multi<Double> generate() {

// Build an infinite stream of random prices

// It emits a price every second

return Multi.createFrom().ticks().every(Duration.ofSeconds(1))

.map(x -> random.nextDouble());

}

}|

You should not call methods annotated with |

Note that the generate method returns a Multi<Double>, which implements the Reactive Streams Publisher interface.

This publisher will be used by the framework to generate messages and send them to the configured Kafka topic.

Instead of returning a payload, you can return a io.smallrye.reactive.messaging.kafka.Record to send key/value pairs:

@Outgoing("out")

public Multi<Record<String, Double>> generate() {

return Multi.createFrom().ticks().every(Duration.ofSeconds(1))

.map(x -> Record.of("my-key", random.nextDouble()));

}Payload can be wrapped inside org.eclipse.microprofile.reactive.messaging.Message to have more control on the written records:

@Outgoing("generated-price")

public Multi<Message<Double>> generate() {

return Multi.createFrom().ticks().every(Duration.ofSeconds(1))

.map(x -> Message.of(random.nextDouble())

.addMetadata(OutgoingKafkaRecordMetadata.<String>builder()

.withKey("my-key")

.withTopic("my-key-prices")

.withHeaders(new RecordHeaders().add("my-header", "value".getBytes()))

.build()));

}OutgoingKafkaRecordMetadata allows to set metadata attributes of the Kafka record, such as key, topic, partition or timestamp.

One use case is to dynamically select the destination topic of a message.

In this case, instead of configuring the topic inside your application configuration file, you need to use the outgoing metadata to set the name of the topic.

Other than method signatures returning a Reactive Stream Publisher (Multi being an implementation of Publisher), outgoing method can also return single message.

In this case the producer will use this method as generator to create an infinite stream.

@Outgoing("prices-out") T generate(); // T excluding void

@Outgoing("prices-out") Message<T> generate();

@Outgoing("prices-out") Uni<T> generate();

@Outgoing("prices-out") Uni<Message<T>> generate();

@Outgoing("prices-out") CompletionStage<T> generate();

@Outgoing("prices-out") CompletionStage<Message<T>> generate();5.1. Sending messages with Emitter

Sometimes, you need to have an imperative way of sending messages.

For example, if you need to send a message to a stream when receiving a POST request inside a REST endpoint.

In this case, you cannot use @Outgoing because your method has parameters.

For this, you can use an Emitter.

import org.eclipse.microprofile.reactive.messaging.Channel;

import org.eclipse.microprofile.reactive.messaging.Emitter;

import jakarta.inject.Inject;

import jakarta.ws.rs.POST;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Consumes;

import jakarta.ws.rs.core.MediaType;

@Path("/prices")

public class PriceResource {

@Inject

@Channel("price-create")

Emitter<Double> priceEmitter;

@POST

@Consumes(MediaType.TEXT_PLAIN)

public void addPrice(Double price) {

CompletionStage<Void> ack = priceEmitter.send(price);

}

}Sending a payload returns a CompletionStage, completed when the message is acked. If the message transmission fails, the CompletionStage is completed exceptionally with the reason of the nack.

|

The |

|

Using the |

With the Emitter API, you can also encapsulate the outgoing payload inside Message<T>. As with the previous examples, Message lets you handle the ack/nack cases differently.

import java.util.concurrent.CompletableFuture;

import org.eclipse.microprofile.reactive.messaging.Channel;

import org.eclipse.microprofile.reactive.messaging.Emitter;

import jakarta.inject.Inject;

import jakarta.ws.rs.POST;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Consumes;

import jakarta.ws.rs.core.MediaType;

@Path("/prices")

public class PriceResource {

@Inject @Channel("price-create") Emitter<Double> priceEmitter;

@POST

@Consumes(MediaType.TEXT_PLAIN)

public void addPrice(Double price) {

priceEmitter.send(Message.of(price)

.withAck(() -> {

// Called when the message is acked

return CompletableFuture.completedFuture(null);

})

.withNack(throwable -> {

// Called when the message is nacked

return CompletableFuture.completedFuture(null);

}));

}

}If you prefer using Reactive Stream APIs, you can use MutinyEmitter that will return Uni<Void> from the send method.

You can therefore use Mutiny APIs for handling downstream messages and errors.

import org.eclipse.microprofile.reactive.messaging.Channel;

import jakarta.inject.Inject;

import jakarta.ws.rs.POST;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Consumes;

import jakarta.ws.rs.core.MediaType;

import io.smallrye.reactive.messaging.MutinyEmitter;

@Path("/prices")

public class PriceResource {

@Inject

@Channel("price-create")

MutinyEmitter<Double> priceEmitter;

@POST

@Consumes(MediaType.TEXT_PLAIN)

public Uni<String> addPrice(Double price) {

return quoteRequestEmitter.send(price)

.map(x -> "ok")

.onFailure().recoverWithItem("ko");

}

}It is also possible to block on sending the event to the emitter with the sendAndAwait method.

It will only return from the method when the event is acked or nacked by the receiver.

|

Deprecation

The

The new |

|

Deprecation

|

More information on how to use Emitter can be found in SmallRye Reactive Messaging – Emitters and Channels

5.2. Write Acknowledgement

When Kafka broker receives a record, its acknowledgement can take time depending on the configuration. Also, it stores in-memory the records that cannot be written.

By default, the connector does wait for Kafka to acknowledge the record to continue the processing (acknowledging the received Message).

You can disable this by setting the waitForWriteCompletion attribute to false.

Note that the acks attribute has a huge impact on the record acknowledgement.

If a record cannot be written, the message is nacked.

5.3. Backpressure

The Kafka outbound connector handles back-pressure, monitoring the number of in-flight messages waiting to be written to the Kafka broker.

The number of in-flight messages is configured using the max-inflight-messages attribute and defaults to 1024.

The connector only sends that amount of messages concurrently.

No other messages will be sent until at least one in-flight message gets acknowledged by the broker.

Then, the connector writes a new message to Kafka when one of the broker’s in-flight messages get acknowledged.

Be sure to configure Kafka’s batch.size and linger.ms accordingly.

You can also remove the limit of in-flight messages by setting max-inflight-messages to 0.

However, note that the Kafka producer may block if the number of requests reaches max.in.flight.requests.per.connection.

5.4. Retrying message dispatch

When the Kafka producer receives an error from the server, if it is a transient, recoverable error, the client will retry sending the batch of messages.

This behavior is controlled by retries and retry.backoff.ms parameters.

In addition to this, SmallRye Reactive Messaging will retry individual messages on recoverable errors, depending on the retries and delivery.timeout.ms parameters.

Note that while having retries in a reliable system is a best practice, the max.in.flight.requests.per.connection parameter defaults to 5, meaning that the order of the messages is not guaranteed.

If the message order is a must for your use case, setting max.in.flight.requests.per.connection to 1 will make sure a single batch of messages is sent at a time, in the expense of limiting the throughput of the producer.

For applying retry mechanism on processing errors, see the section on Retrying processing.

5.5. Handling Serialization Failures

For Kafka producer client serialization failures are not recoverable, thus the message dispatch is not retried. In these cases you may need to apply a failure strategy for the serializer.

To achieve this, you need to create a bean implementing SerializationFailureHandler<T> interface:

@ApplicationScoped

@Identifier("failure-fallback") // Set the name of the failure handler

public class MySerializationFailureHandler

implements SerializationFailureHandler<JsonObject> { // Specify the expected type

@Override

public byte[] decorateSerialization(Uni<byte[]> serialization, String topic, boolean isKey,

String serializer, Object data, Headers headers) {

return serialization

.onFailure().retry().atMost(3)

.await().indefinitely();

}

}To use this failure handler, the bean must be exposed with the @Identifier qualifier and the connector configuration must specify the attribute mp.messaging.outgoing.$channel.[key|value]-serialization-failure-handler (for key or value serializers).

The handler is called with details of the serialization, including the action represented as Uni<byte[]>.

Note that the method must await on the result and return the serialized byte array.

5.6. In-memory channels

In some use cases, it is convenient to use the messaging patterns to transfer messages inside the same application. When you don’t connect a channel to a messaging backend like Kafka, everything happens in-memory, and the streams are created by chaining methods together. Each chain is still a reactive stream and enforces the back-pressure protocol.

The framework verifies that the producer/consumer chain is complete,

meaning that if the application writes messages into an in-memory channel (using a method with only @Outgoing, or an Emitter),

it must also consume the messages from within the application (using a method with only @Incoming or using an unmanaged stream).

5.7. Broadcasting messages on multiple consumers

By default, a channel can be linked to a single consumer, using @Incoming method or @Channel reactive stream.

At application startup, channels are verified to form a chain of consumers and producers with single consumer and producer.

You can override this behavior by setting mp.messaging.$channel.broadcast=true on a channel.

In case of in-memory channels, @Broadcast annotation can be used on the @Outgoing method. For example,

import java.util.Random;

import jakarta.enterprise.context.ApplicationScoped;

import org.eclipse.microprofile.reactive.messaging.Incoming;

import org.eclipse.microprofile.reactive.messaging.Outgoing;

import io.smallrye.reactive.messaging.annotations.Broadcast;

@ApplicationScoped

public class MultipleConsumer {

private final Random random = new Random();

@Outgoing("in-memory-channel")

@Broadcast

double generate() {

return random.nextDouble();

}

@Incoming("in-memory-channel")

void consumeAndLog(double price) {

System.out.println(price);

}

@Incoming("in-memory-channel")

@Outgoing("prices2")

double consumeAndSend(double price) {

return price;

}

}|

Reciprocally, multiple producers on the same channel can be merged by setting |

Repeating the @Outgoing annotation on outbound or processing methods allows another way of dispatching messages to multiple outgoing channels:

import java.util.Random;

import jakarta.enterprise.context.ApplicationScoped;

import org.eclipse.microprofile.reactive.messaging.Outgoing;

@ApplicationScoped

public class MultipleProducers {

private final Random random = new Random();

@Outgoing("generated")

@Outgoing("generated-2")

double priceBroadcast() {

return random.nextDouble();

}

}In the previous example generated price will be broadcast to both outbound channels.

The following example selectively sends messages to multiple outgoing channels using the Targeted container object,

containing key as channel name and value as message payload.

import jakarta.enterprise.context.ApplicationScoped;

import org.eclipse.microprofile.reactive.messaging.Incoming;

import org.eclipse.microprofile.reactive.messaging.Outgoing;

import io.smallrye.reactive.messaging.Targeted;

@ApplicationScoped

public class TargetedProducers {

@Incoming("in")

@Outgoing("out1")

@Outgoing("out2")

@Outgoing("out3")

public Targeted process(double price) {

Targeted targeted = Targeted.of("out1", "Price: " + price,

"out2", "Quote: " + price);

if (price > 90.0) {

return targeted.with("out3", price);

}

return targeted;

}

}Note that the auto-detection for Kafka serializers doesn’t work for signatures using the Targeted.

For more details on using multiple outgoings, please refer to the SmallRye Reactive Messaging documentation.

5.8. Kafka Transactions

Kafka transactions enable atomic writes to multiple Kafka topics and partitions.

The Kafka connector provides KafkaTransactions custom emitter for writing Kafka records inside a transaction.

It can be injected as a regular emitter @Channel:

import jakarta.enterprise.context.ApplicationScoped;

import org.eclipse.microprofile.reactive.messaging.Channel;

import io.smallrye.mutiny.Uni;

import io.smallrye.reactive.messaging.kafka.KafkaRecord;

import io.smallrye.reactive.messaging.kafka.transactions.KafkaTransactions;

@ApplicationScoped

public class KafkaTransactionalProducer {

@Channel("tx-out-example")

KafkaTransactions<String> txProducer;

public Uni<Void> emitInTransaction() {

return txProducer.withTransaction(emitter -> {

emitter.send(KafkaRecord.of(1, "a"));

emitter.send(KafkaRecord.of(2, "b"));

emitter.send(KafkaRecord.of(3, "c"));

return Uni.createFrom().voidItem();

});

}

}The function given to the withTransaction method receives a TransactionalEmitter for producing records, and returns a Uni that provides the result of the transaction.

-

If the processing completes successfully, the producer is flushed and the transaction is committed.

-

If the processing throws an exception, returns a failing

Uni, or marks theTransactionalEmitterfor abort, the transaction is aborted.

Kafka transactional producers require configuring acks=all client property, and a unique id for transactional.id, which implies enable.idempotence=true.

When Quarkus detects the use of KafkaTransactions for an outgoing channel it configures these properties on the channel,

providing a default value of "${quarkus.application.name}-${channelName}" for transactional.id property.

Note that for production use the transactional.id must be unique across all application instances.

|

While a normal message emitter would support concurrent calls to Note that in Reactive Messaging, the execution of processing methods, is already serialized, unless An example usage can be found in Chaining Kafka Transactions with Hibernate Reactive transactions. |

6. Kafka Request-Reply

The Kafka Request-Reply pattern allows to publish a request record to a Kafka topic and then await for a reply record that responds to the initial request.

The Kafka connector provides the KafkaRequestReply custom emitter that implements the requestor (or the client) of the request-reply pattern for Kafka outbound channels:

It can be injected as a regular emitter @Channel:

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.ws.rs.POST;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

import org.eclipse.microprofile.reactive.messaging.Channel;

import io.smallrye.mutiny.Uni;

import io.smallrye.reactive.messaging.kafka.reply.KafkaRequestReply;

@ApplicationScoped

@Path("/kafka")

public class KafkaRequestReplyEmitter {

@Channel("request-reply")

KafkaRequestReply<Integer, String> requestReply;

@POST

@Path("/req-rep")

@Produces(MediaType.TEXT_PLAIN)

public Uni<String> post(Integer request) {

return requestReply.request(request);

}

}The request method publishes the record to the configured target topic of the outgoing channel,

and polls a reply topic (by default, the target topic with -replies suffix) for a reply record.

When the reply is received the returned Uni is completed with the record value.

The request send operation generates a correlation id and sets a header (by default REPLY_CORRELATION_ID),

which it expects to be sent back in the reply record.

The replier can be implemented using a Reactive Messaging processor (see Processing Messages).

For more information on Kafka Request Reply feature and advanced configuration options, see the SmallRye Reactive Messaging Documentation.

7. Processing Messages

Applications streaming data often need to consume some events from a topic, process them and publish the result to a different topic.

A processor method can be simply implemented using both the @Incoming and @Outgoing annotations:

import org.eclipse.microprofile.reactive.messaging.Incoming;

import org.eclipse.microprofile.reactive.messaging.Outgoing;

import jakarta.enterprise.context.ApplicationScoped;

@ApplicationScoped

public class PriceProcessor {

private static final double CONVERSION_RATE = 0.88;

@Incoming("price-in")

@Outgoing("price-out")

public double process(double price) {

return price * CONVERSION_RATE;

}

}The parameter of the process method is the incoming message payload, whereas the return value will be used as the outgoing message payload.

Previously mentioned signatures for parameter and return types are also supported, such as Message<T>, Record<K, V>, etc.

You can apply asynchronous stream processing by consuming and returning reactive stream Multi<T> type:

import jakarta.enterprise.context.ApplicationScoped;

import org.eclipse.microprofile.reactive.messaging.Incoming;

import org.eclipse.microprofile.reactive.messaging.Outgoing;

import io.smallrye.mutiny.Multi;

@ApplicationScoped

public class PriceProcessor {

private static final double CONVERSION_RATE = 0.88;

@Incoming("price-in")

@Outgoing("price-out")

public Multi<Double> process(Multi<Integer> prices) {

return prices.filter(p -> p > 100).map(p -> p * CONVERSION_RATE);

}

}7.1. Propagating Record Key

When processing messages, you can propagate incoming record key to the outgoing record.

Enabled with mp.messaging.outgoing.$channel.propagate-record-key=true configuration,

record key propagation produces the outgoing record with the same key as the incoming record.

If the outgoing record already contains a key, it won’t be overridden by the incoming record key.

If the incoming record does have a null key, the mp.messaging.outgoing.$channel.key property is used.

7.2. Exactly-Once Processing

Kafka Transactions allows managing consumer offsets inside a transaction, together with produced messages. This enables coupling a consumer with a transactional producer in a consume-transform-produce pattern, also known as exactly-once processing.

The KafkaTransactions custom emitter provides a way to apply exactly-once processing to an incoming Kafka message inside a transaction.

The following example includes a batch of Kafka records inside a transaction.

import jakarta.enterprise.context.ApplicationScoped;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.eclipse.microprofile.reactive.messaging.Channel;

import org.eclipse.microprofile.reactive.messaging.Incoming;

import org.eclipse.microprofile.reactive.messaging.Message;

import org.eclipse.microprofile.reactive.messaging.OnOverflow;

import io.smallrye.mutiny.Uni;

import io.smallrye.reactive.messaging.kafka.KafkaRecord;

import io.smallrye.reactive.messaging.kafka.transactions.KafkaTransactions;

@ApplicationScoped

public class KafkaExactlyOnceProcessor {

@Channel("prices-out")

@OnOverflow(value = OnOverflow.Strategy.BUFFER, bufferSize = 500) (3)

KafkaTransactions<Integer> txProducer;

@Incoming("prices-in")

public Uni<Void> emitInTransaction(Message<ConsumerRecords<String, Integer>> batch) { (1)

return txProducer.withTransactionAndAck(batch, emitter -> { (2)

for (ConsumerRecord<String, Integer> record : batch.getPayload()) {

emitter.send(KafkaRecord.of(record.key(), record.value() + 1)); (3)

}

return Uni.createFrom().voidItem();

});

}

}| 1 | It is recommended to use exactly-once processing along with the batch consumption mode. While it is possible to use it with a single Kafka message, it’ll have a significant performance impact. |

| 2 | The consumed message is passed to the KafkaTransactions#withTransactionAndAck in order to handle the offset commits and message acks. |

| 3 | The send method writes records to Kafka inside the transaction, without waiting for send receipt from the broker.

Messages pending to be written to Kafka will be buffered, and flushed before committing the transaction.

It is therefore recommended configuring the @OnOverflow bufferSize in order to fit enough messages, for example the max.poll.records, maximum amount of records returned in a batch.

|

When using exactly-once processing, consumed message offset commits are handled by the transaction and therefore the application should not commit offsets through other means.

The consumer should have enable.auto.commit=false (the default) and set explicitly commit-strategy=ignore:

mp.messaging.incoming.prices-in.commit-strategy=ignore

mp.messaging.incoming.prices-in.failure-strategy=ignore7.2.1. Error handling for the exactly-once processing

The Uni returned from the KafkaTransactions#withTransaction will yield a failure if the transaction fails and is aborted.

The application can choose to handle the error case, but if a failing Uni is returned from the @Incoming method, the incoming channel will effectively fail and stop the reactive stream.

The KafkaTransactions#withTransactionAndAck method acks and nacks the message but will not return a failing Uni.

Nacked messages will be handled by the failure strategy of the incoming channel, (see Error Handling Strategies).

Configuring failure-strategy=ignore simply resets the Kafka consumer to the last committed offsets and resumes the consumption from there.

8. Accessing Kafka clients directly

In rare cases, you may need to access the underlying Kafka clients.

KafkaClientService provides thread-safe access to Producer and Consumer.

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.enterprise.event.Observes;

import jakarta.inject.Inject;

import org.apache.kafka.clients.producer.ProducerRecord;

import io.quarkus.runtime.StartupEvent;

import io.smallrye.reactive.messaging.kafka.KafkaClientService;

import io.smallrye.reactive.messaging.kafka.KafkaConsumer;

import io.smallrye.reactive.messaging.kafka.KafkaProducer;

@ApplicationScoped

public class PriceSender {

@Inject

KafkaClientService clientService;

void onStartup(@Observes StartupEvent startupEvent) {

KafkaProducer<String, Double> producer = clientService.getProducer("generated-price");

producer.runOnSendingThread(client -> client.send(new ProducerRecord<>("prices", 2.4)))

.await().indefinitely();

}

}|

The |

You can also get the Kafka configuration injected to your application and create Kafka producer, consumer and admin clients directly:

import io.smallrye.common.annotation.Identifier;

import org.apache.kafka.clients.admin.AdminClient;

import org.apache.kafka.clients.admin.AdminClientConfig;

import org.apache.kafka.clients.admin.KafkaAdminClient;

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.enterprise.inject.Produces;

import jakarta.inject.Inject;

import java.util.HashMap;

import java.util.Map;

@ApplicationScoped

public class KafkaClients {

@Inject

@Identifier("default-kafka-broker")

Map<String, Object> config;

@Produces

AdminClient getAdmin() {

Map<String, Object> copy = new HashMap<>();

for (Map.Entry<String, Object> entry : config.entrySet()) {

if (AdminClientConfig.configNames().contains(entry.getKey())) {

copy.put(entry.getKey(), entry.getValue());

}

}

return KafkaAdminClient.create(copy);

}

}The default-kafka-broker configuration map contains all application properties prefixed with kafka. or KAFKA_.

For more configuration options check out Kafka Configuration Resolution.

9. JSON serialization

Quarkus has built-in capabilities to deal with JSON Kafka messages.

Imagine we have a Fruit data class as follows:

public class Fruit {

public String name;

public int price;

public Fruit() {

}

public Fruit(String name, int price) {

this.name = name;

this.price = price;

}

}And we want to use it to receive messages from Kafka, make some price transformation, and send messages back to Kafka.

import io.smallrye.reactive.messaging.annotations.Broadcast;

import org.eclipse.microprofile.reactive.messaging.Incoming;

import org.eclipse.microprofile.reactive.messaging.Outgoing;

import jakarta.enterprise.context.ApplicationScoped;

/**

* A bean consuming data from the "fruit-in" channel and applying some price conversion.

* The result is pushed to the "fruit-out" channel.

*/

@ApplicationScoped

public class FruitProcessor {

private static final double CONVERSION_RATE = 0.88;

@Incoming("fruit-in")

@Outgoing("fruit-out")

@Broadcast

public Fruit process(Fruit fruit) {

fruit.price = fruit.price * CONVERSION_RATE;

return fruit;

}

}To do this, we will need to set up JSON serialization with Jackson or JSON-B.

With JSON serialization correctly configured, you can also use Publisher<Fruit> and Emitter<Fruit>.

|

9.1. Serializing via Jackson

Quarkus has built-in support for JSON serialization and deserialization based on Jackson.

It will also generate the serializer and deserializer for you, so you do not have to configure anything.

When generation is disabled, you can use the provided ObjectMapperSerializer and ObjectMapperDeserializer as explained below.

There is an existing ObjectMapperSerializer that can be used to serialize all data objects via Jackson.

You may create an empty subclass if you want to use Serializer/deserializer autodetection.

By default, the ObjectMapperSerializer serializes null as the "null" String, this can be customized by setting the Kafka configuration

property json.serialize.null-as-null=true which will serialize null as null.

This is handy when using a compacted topic, as null is used as a tombstone to know which messages delete during compaction phase.

|

The corresponding deserializer class needs to be subclassed.

So, let’s create a FruitDeserializer that extends the ObjectMapperDeserializer.

package com.acme.fruit.jackson;

import io.quarkus.kafka.client.serialization.ObjectMapperDeserializer;

public class FruitDeserializer extends ObjectMapperDeserializer<Fruit> {

public FruitDeserializer() {

super(Fruit.class);

}

}Finally, configure your channels to use the Jackson serializer and deserializer.

# Configure the Kafka source (we read from it)

mp.messaging.incoming.fruit-in.topic=fruit-in

mp.messaging.incoming.fruit-in.value.deserializer=com.acme.fruit.jackson.FruitDeserializer

# Configure the Kafka sink (we write to it)

mp.messaging.outgoing.fruit-out.topic=fruit-out

mp.messaging.outgoing.fruit-out.value.serializer=io.quarkus.kafka.client.serialization.ObjectMapperSerializerNow, your Kafka messages will contain a Jackson serialized representation of your Fruit data object.

In this case, the deserializer configuration is not necessary as the Serializer/deserializer autodetection is enabled by default.

If you want to deserialize a list of fruits, you need to create a deserializer with a Jackson TypeReference denoted the generic collection used.

package com.acme.fruit.jackson;

import java.util.List;

import com.fasterxml.jackson.core.type.TypeReference;

import io.quarkus.kafka.client.serialization.ObjectMapperDeserializer;

public class ListOfFruitDeserializer extends ObjectMapperDeserializer<List<Fruit>> {

public ListOfFruitDeserializer() {

super(new TypeReference<List<Fruit>>() {});

}

}9.2. Serializing via JSON-B

First, you need to include the quarkus-jsonb extension.

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-jsonb</artifactId>

</dependency>implementation("io.quarkus:quarkus-jsonb")There is an existing JsonbSerializer that can be used to serialize all data objects via JSON-B.

You may create an empty subclass if you want to use Serializer/deserializer autodetection.

By default, the JsonbSerializer serializes null as the "null" String, this can be customized by setting the Kafka configuration

property json.serialize.null-as-null=true which will serialize null as null.

This is handy when using a compacted topic, as null is used as a tombstone to know which messages delete during compaction phase.

|

The corresponding deserializer class needs to be subclassed.

So, let’s create a FruitDeserializer that extends the generic JsonbDeserializer.

package com.acme.fruit.jsonb;

import io.quarkus.kafka.client.serialization.JsonbDeserializer;

public class FruitDeserializer extends JsonbDeserializer<Fruit> {

public FruitDeserializer() {

super(Fruit.class);

}

}Finally, configure your channels to use the JSON-B serializer and deserializer.

# Configure the Kafka source (we read from it)

mp.messaging.incoming.fruit-in.connector=smallrye-kafka

mp.messaging.incoming.fruit-in.topic=fruit-in

mp.messaging.incoming.fruit-in.value.deserializer=com.acme.fruit.jsonb.FruitDeserializer

# Configure the Kafka sink (we write to it)

mp.messaging.outgoing.fruit-out.connector=smallrye-kafka

mp.messaging.outgoing.fruit-out.topic=fruit-out

mp.messaging.outgoing.fruit-out.value.serializer=io.quarkus.kafka.client.serialization.JsonbSerializerNow, your Kafka messages will contain a JSON-B serialized representation of your Fruit data object.

If you want to deserialize a list of fruits, you need to create a deserializer with a Type denoted the generic collection used.

package com.acme.fruit.jsonb;

import java.lang.reflect.Type;

import java.util.ArrayList;

import java.util.List;

import io.quarkus.kafka.client.serialization.JsonbDeserializer;

public class ListOfFruitDeserializer extends JsonbDeserializer<List<Fruit>> {

public ListOfFruitDeserializer() {

super(new ArrayList<MyEntity>() {}.getClass().getGenericSuperclass());

}

}

If you don’t want to create a deserializer for each data object, you can use the generic io.vertx.kafka.client.serialization.JsonObjectDeserializer

that will deserialize to a io.vertx.core.json.JsonObject. The corresponding serializer can also be used: io.vertx.kafka.client.serialization.JsonObjectSerializer.

|

10. Avro Serialization

This is described in a dedicated guide: Using Apache Kafka with Schema Registry and Avro.

11. JSON Schema Serialization

This is described in a dedicated guide: Using Apache Kafka with Schema Registry and JSON Schema.

12. Serializer/deserializer autodetection

When using Quarkus Messaging with Kafka (io.quarkus:quarkus-messaging-kafka), Quarkus can often automatically detect the correct serializer and deserializer class.

This autodetection is based on declarations of @Incoming and @Outgoing methods, as well as injected @Channels.

For example, if you declare

@Outgoing("generated-price")

public Multi<Integer> generate() {

...

}and your configuration indicates that the generated-price channel uses the smallrye-kafka connector, then Quarkus will automatically set the value.serializer to Kafka’s built-in IntegerSerializer.

Similarly, if you declare

@Incoming("my-kafka-records")

public void consume(Record<Long, byte[]> record) {

...

}and your configuration indicates that the my-kafka-records channel uses the smallrye-kafka connector, then Quarkus will automatically set the key.deserializer to Kafka’s built-in LongDeserializer, as well as the value.deserializer to ByteArrayDeserializer.

Finally, if you declare

@Inject

@Channel("price-create")

Emitter<Double> priceEmitter;and your configuration indicates that the price-create channel uses the smallrye-kafka connector, then Quarkus will automatically set the value.serializer to Kafka’s built-in DoubleSerializer.

The full set of types supported by the serializer/deserializer autodetection is:

-

shortandjava.lang.Short -

intandjava.lang.Integer -

longandjava.lang.Long -

floatandjava.lang.Float -

doubleandjava.lang.Double -

byte[] -

java.lang.String -

java.util.UUID -

java.nio.ByteBuffer -

org.apache.kafka.common.utils.Bytes -

io.vertx.core.buffer.Buffer -

io.vertx.core.json.JsonObject -

io.vertx.core.json.JsonArray -

classes for which a direct implementation of

org.apache.kafka.common.serialization.Serializer<T>/org.apache.kafka.common.serialization.Deserializer<T>is present.-

the implementation needs to specify the type argument

Tas the (de-)serialized type.

-

-

classes generated from Avro schemas, as well as Avro

GenericRecord, if Confluent or Apicurio Registry serde is present-

in case multiple Avro serdes are present, serializer/deserializer must be configured manually for Avro-generated classes, because autodetection is impossible

-

see Using Apache Kafka with Schema Registry and Avro for more information about using Confluent or Apicurio Registry libraries

-

-

classes for which a subclass of

ObjectMapperSerializer/ObjectMapperDeserializeris present, as described in Serializing via Jackson-

it is technically not needed to subclass

ObjectMapperSerializer, but in such case, autodetection isn’t possible

-

-

classes for which a subclass of

JsonbSerializer/JsonbDeserializeris present, as described in Serializing via JSON-B-

it is technically not needed to subclass

JsonbSerializer, but in such case, autodetection isn’t possible

-

If a serializer/deserializer is set by configuration, it won’t be replaced by the autodetection.

In case you have any issues with serializer autodetection, you can switch it off completely by setting quarkus.messaging.kafka.serializer-autodetection.enabled=false.

If you find you need to do this, please file a bug in the Quarkus issue tracker so we can fix whatever problem you have.

13. JSON Serializer/deserializer generation

Quarkus automatically generates serializers and deserializers for channels where:

-

the serializer/deserializer is not configured

-

the auto-detection did not find a matching serializer/deserializer

It uses Jackson underneath.

This generation can be disabled using:

quarkus.messaging.kafka.serializer-generation.enabled=false

Generation does not support collections such as List<Fruit>.

Refer to Serializing via Jackson to write your own serializer/deserializer for this case.

|

14. Using Schema Registry