Ship.Cars leverages Quarkus to reach its goals

Ship.Cars is a revolutionary partner in auto transport logistics, offering customizable software solutions specially tailored to accommodate all your car hauling requirements. Our tools are impeccably designed to amplify your business’s ability to streamline, automate, and organize the entire car hauling process, from start to finish.

Through the development of various products, Ship.Cars has helped the automotive logistics industry to transition into the modern age. Our industry solutions, such as LoadMate and LoadMate Pro, cater to the various needs of dealerships, rental car companies, and other shippers. Meanwhile, innovations like our SmartHaul TMS and SmartHaul APP have become indispensable tools for our car haulers to book and manage their loads.

Contending with challenges

As a product-centric organization, we utilize the microservice paradigm to deliver a diverse array of functionality via numerous distinct software products. Thus far, we’ve developed over 50 microservices. Each of these not only meets the requisite functional requirements but also adheres to rigorous technical specifications. These specifications ensure seamless provisioning of services, consistent performance under load, and easy identification and resolution of any arising issues.

The construction of these services, over a large period of time, has relied on various frameworks, including Quarkus , Spring Boot and Django. Each framework exhibits its unique strengths and weaknesses extending from nuanced characteristics. However, with time, we’ve determined that Quarkus optimally fulfills a large portion of our requirements. This explains our current shift from Django to Quarkus for a significant portion of our development.

As Ship.Cars deploys its microservices on Kubernetes within the Google Cloud platform, we continually seek efficient ways to scale our developmental prowess, while simultaneously saving cloud resource consumption. With cloud resource consumption costs always being a priority, we strive to find effective ways to optimize memory and processor use in the cloud.

-

Lower cloud resource consumption: Multiple active microservices can consume a significant amount of memory and CPU, escalating costs rapidly. Hence, effective management of cloud resources is crucial.

-

Faster boot-up times: In a microservices architecture, it’s important for services to stop, start, and scale swiftly. Slow boot-up times can have a severe impact on system performance and responsiveness.

-

Streamlined microservices development: Building and ensuring interoperability within microservices can be complex, requiring deft management and specialized tooling.

-

Resilience and fault tolerance: Microservices must be resilient and capable of quick recovery from unexpected failures. Implementing such fault tolerance mechanisms, however, can be challenging.

-

Service discovery: The ability to discover and communicate between services becomes critical as their number increases. Traditional hard-coded endpoints do not scale well in these scenarios.

-

Event-driven microservices: Implementing an event-driven architectural model in microservices enables distinct services to communicate asynchronously. Yet, orchestrating this can be difficult.

-

Reactive and imperative programming: The selection of an appropriate programming model for the cloud, especially one that supports scalability and system responsiveness, can be daunting.

-

Lower cloud resource consumption: Known for their high memory usage, traditional Java applications can get expensive in a cloud environment where resources cost money. Quarkus significantly reduces the memory footprint of applications, leading to more efficient cloud resource management.

-

Faster boot-up times: Slow startup times are quite common with traditional Java applications, an issue that presents a particular problem in the cloud where applications need to scale up and down quickly. Quarkus drastically improves start-up performances, with applications often starting in sub-second times.

-

Streamlined microservices development: Quarkus has been designed to work with popular Java standards and technologies such as

Eclipse MicroProfile,Jakarta EE,OpenTelemetry,Hibernate, etc., simplifying the development process and reducing the time and complexity involved. -

Resilience and fault tolerance: Quarkus employs the

MicroProfile Fault Tolerancespecification to provide features like timeout, retry, bulkhead, circuit breaker, and fallback. These features render your microservices more resilient and fault-tolerant. -

Service discovery: Quarkus supports Kubernetes service discovery natively, allowing services to discover and communicate with each other in a reliable manner.

-

Event-driven microservices: Quarkus supports event-driven architecture, enabling services to communicate through events, thereby reducing the complexity and coupling between the services.

-

Reactive and imperative programming: Quarkus gives developers the freedom to use reactive or imperative programming models or even combine both in the same application, creating a perfect solution for scalability and system responsiveness.

Tackling cloud resource consumption

For businesses like ours, one of our organizational goals is to reduce costs while not sacrificing platform’s performance to ensure premium user experience. However, traditional JVM-based services often present challenges like substantial memory footprints, extended startup times, and high CPU usage. These problems not only impact technical aspects but also have financial implications, significantly affecting the overall cost of running and maintaining software solutions.

Native images are standalone executables that include both the application code and the necessary runtime components. With the advent of GraalVM, a high-performance, polyglot virtual machine able to run applications written in different programming languages, the concept of native images has gained popularity.

-

Faster startup time: As pre-compiled entities, native images can start incredibly quickly, often in milliseconds. This aspect is hugely beneficial when applications need to start and stop almost instantly, like in serverless functions or cloud-based microservices architectures. For instance, one of our microservices,

native powered by Quarkus 3.2.7.Final, starts in just 0.677s. -

Lower memory footprint: Applications' memory footprints can be significantly reduced with native images as they only include the runtime components actually used by the applications. This efficiency is important in cloud environments where resource usage directly affects costs.

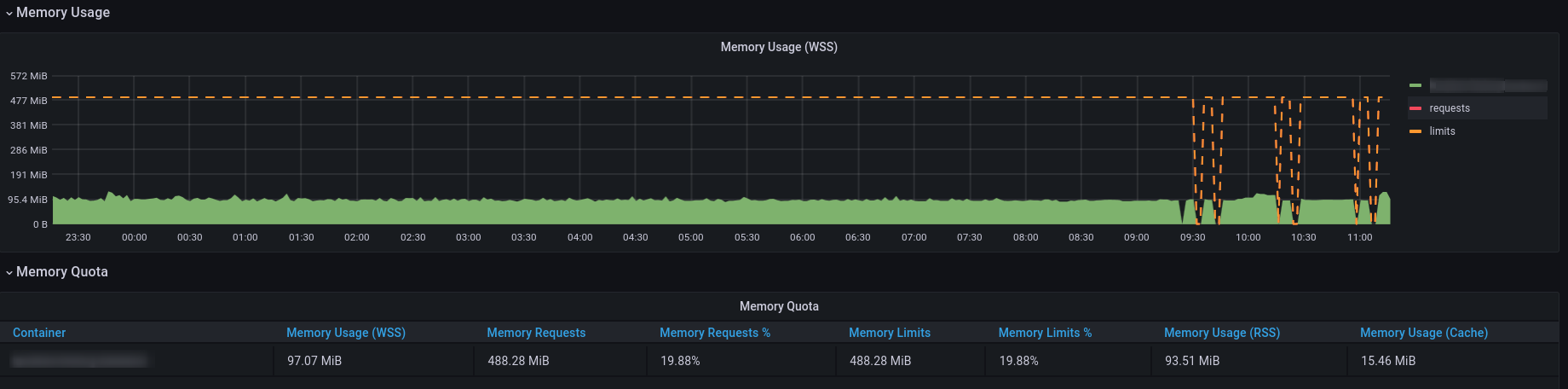

Real service memory usage

-

Easier distribution: As standalone executables, native images can be easily distributed and run on any environment without requiring the installation of a separate runtime.

-

Reduced container size: Being fully self-contained, the container images for native images are more efficient to distribute due to their reduced size. This leads to faster start-up times in containerized environments like Kubernetes. For example, the size comparison between

Quarkus Native (85.1 MB),Quarkus Non-Native (648.4 MB)andSpring Boot (861.9 MB)provides a clear picture of the difference in resource efficiency between them.

With Quarkus, you can compile your application into a native image by leveraging the GraalVM native-image compiler, allowing your Java applications to experience these advantages in cloud platforms, containerization, and serverless architectures due to their swift startup times and lower resource consumption.

Optimizing developer productivity

-

Live Coding: With no build time and deploy time, developers can test changes to the code instantaneously.

-

Zero configuration with Dev Services: Quarkus can automatically configure some services for development and testing purposes, enhancing efficiency.

-

Continuous testing: Continuous testing is implemented via the command line and the Dev UI, enhancing the quality of the end product without depending on third-party tools and processes.

-

Dev UI: Developers can configure extensions, monitor the application, and test components with great ease.

-

Unified config: All of the application’s configurations are consolidated in one place, improving accessibility.

-

Standards-based

Embracing Quarkus extensions

Quarkus Extensions are pre-configured feature sets designed to simplify several common tasks during application development. They offer an efficient way to imbibe new capabilities or direct integrations in your project with minimum effort.

In our organization, we managed to implement our internal extensions swiftly, effectively addressing maintenance issues and configuration incompatibilities we encountered earlier while trying to create native images. Today, we benefit from an extension hub that quells all previous concerns and enhances our productivity.

While Quarkus extensions are powerful tools offering deep integration, optimization, and enhanced developer experience, it’s essential to weigh the trade-offs and consider if simpler solutions like standard JAR libraries might suit the need better.

Looking ahead

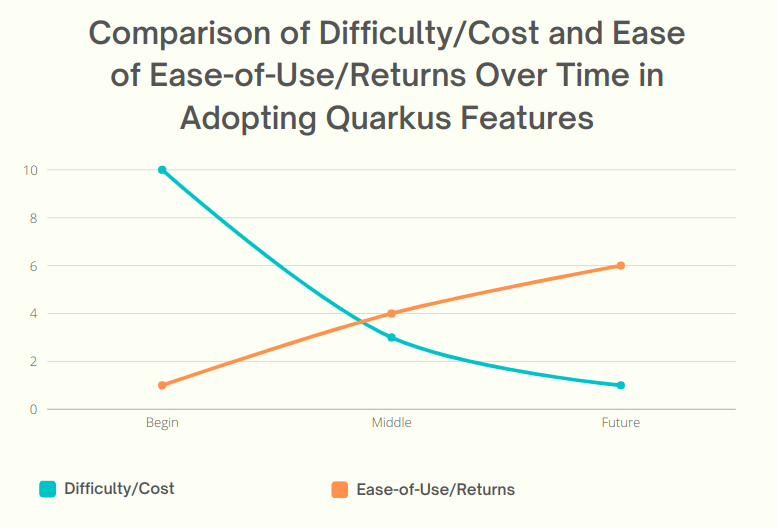

In the graphical representation below, I want to illustrate the inherent relationship between the process of adopting Quarkus and the subsequent outcomes over time.

On the "Y-Axis", we define difficulty or cost in terms of story-points per sprint, reflecting the relative effort required for the features' implementation. This also represents costs in terms of time and resources spent in the adoption of Quarkus features. Simultaneously, ease-of-use/returns take into account metrics such as decreased debugging time, faster feature development, and improvements in team productivity post successful implementation. The graph clearly demonstrates that at the outset (tagged as "Begin" on the "X-Axis"), both the difficulty (illustrated in higher story points) and costs are at their peak, signifying a challenging initial phase. However, as we move along the timeline from "Begin" through "Middle" and onto "Future", we see a notable drop in story-points per sprint, indicating a reduced difficulty level and cost. In parallel to this, the ease-of-use and returns charted start at a comparatively low point at the beginning. These escalate gradually as we advance along the timeline towards "Middle" and "Future", showing a tangible increase in productivity and other gains from adopting and integrating Quarkus features into our practices.

By the time we reach "Future", we see a substantial decrease in difficulty and cost, while the ease-of-use and returns have considerably increased. This dual progression effectively highlights the significant benefits of investing in the adoption of Quarkus, despite the initial challenges. Investing in Quarkus is a strategic maneuver towards creating efficient, scalable, and modern applications aptly suited for the cloud era. With its robust capabilities and supportive community, Quarkus is well-positioned to pioneer the future of cloud-native application development. The decision to adopt Quarkus is a significant leap towards optimizing for efficiency, scalability, and cutting-edge application performance that will provide us with a considerable competitive edge in the rapidly evolving tech landscape.