Welcome

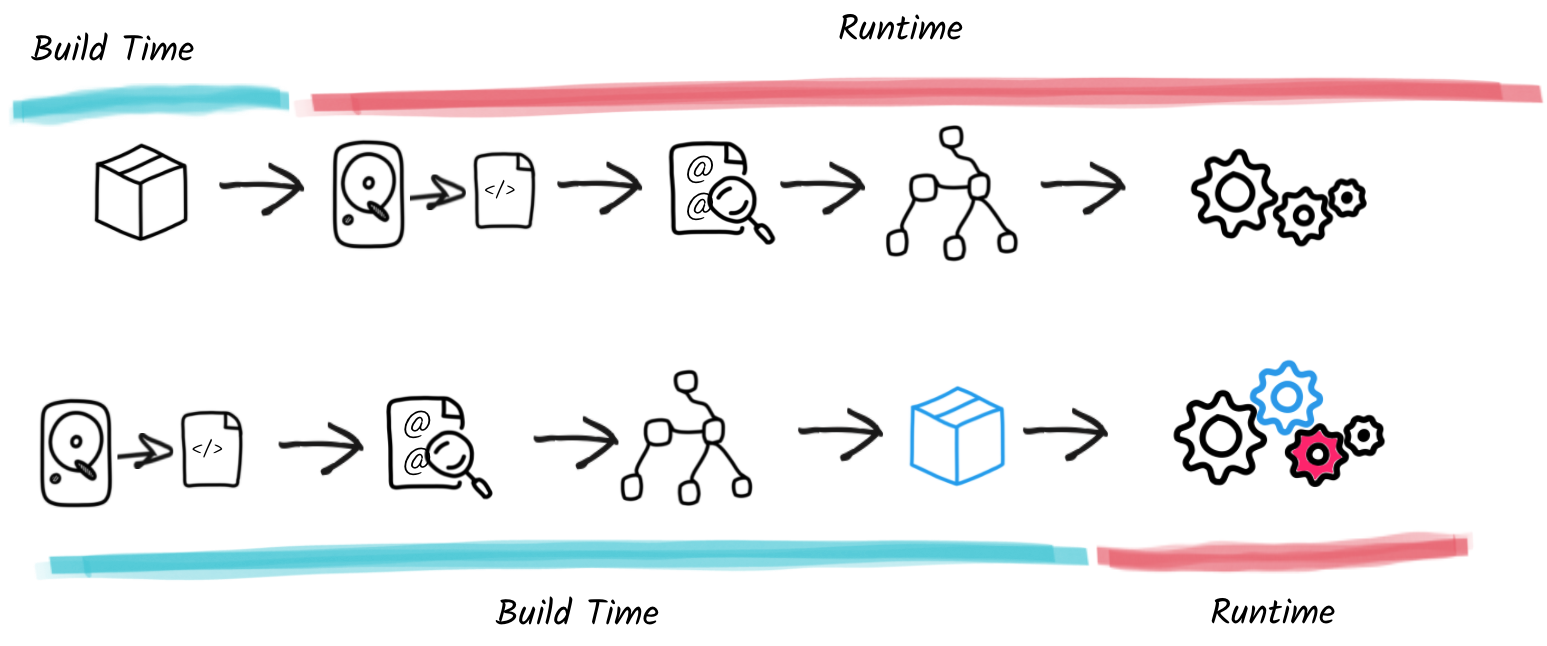

Let’s start from the beginning. Quarkus. What’s Quarkus? That’s a pretty good question and probably a good start. If you go to the Quarkus web site, you’ll read that Quarkus is "A Kubernetes Native Java stack tailored for OpenJDK HotSpot & GraalVM, crafted from the best of breed Java libraries and standards." This description is somewhat unclear but does an outstanding job at using bankable keywords. It’s also written: "Supersonic Subatomic Java." This is a way of saying that Quarkus is really fast, and it’s really light. But what does Quarkus do?

In practice, Quarkus is a stack to develop distributed systems and modern applications in Java, Kotlin, or Scala. Quarkus applications are tailored for the Cloud, containers, and Kubernetes. That does not mean you can’t use Quarkus in other environments, there are no limits, but the principles infused in Quarkus have made containerization of applications more efficient. In this workshop, we will explain what Quarkus is and because the best way to understand Quarkus is to use it, build a set of microservices with it. Again, Quarkus is not limited to microservices, but it’s a generally well-understood type of architecture.

This workshop offers attendees an intro-level, hands-on session with Quarkus, from the first line of code to making services, to consuming them, and finally to assembling everything in a consistent system. But, what are we going to build? Well, it’s going to be a set of microservices:

-

Using Quarkus

-

Using blocking and non-blocking HTTP invocations

-

With some parts of the dark side of microservices (resilience, health, etc)

-

Use an event-based architecture using Apache Kafka

-

Use OpenAI to introduce some artificial intelligence

-

Deploy the entire application to Azure Container Apps

-

Answer the ultimate question: are super-heroes stronger than super-villains?

This workshop is a BYOL (Bring Your Own Laptop) session, so bring your Windows, OSX, or Linux laptop. You just need JDK 17 on your machine and Docker (having Apache Maven 3.9.x installed is optional as you will rely on the Apache Maven Wrapper). On Mac and Windows, Docker for x is recommended instead of the Docker toolbox setup. On Windows it is also recommended to have WSL (Windows Subsystem for Linux) installed.

What you are going to learn:

-

What is Quarkus, and how you can use it

-

How to build an HTTP endpoint (REST API) with Quarkus

-

How to access a relational database

-

How you can use Swagger and OpenAPI

-

How you test your microservice

-

How to build a reactive microservice, including reactive data access

-

How you improve the resilience of your service

-

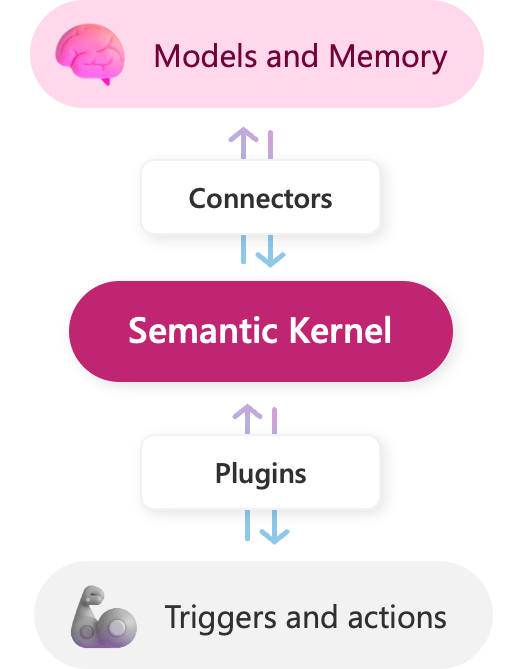

How to invoke OpenAI/Azure OpenAI APIs using Semantic Kernel

-

How to build event-driven microservices with Kafka

-

How to build use contract testing so we are sure our microservices are fully functional

-

How to build native executable

-

How to containerize our microservices

-

How to deploy the microservices to Kubernetes

-

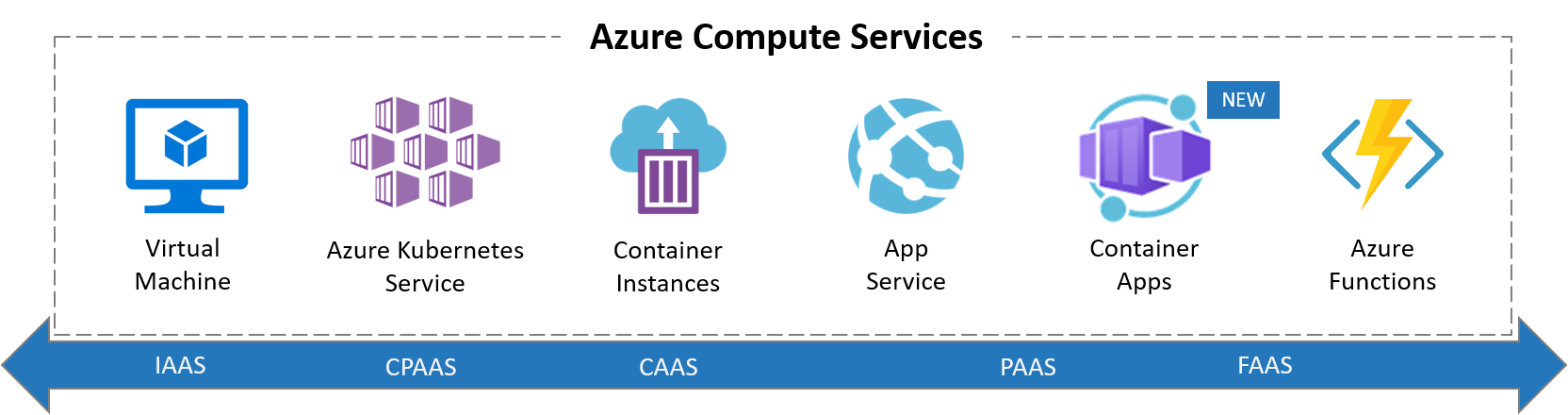

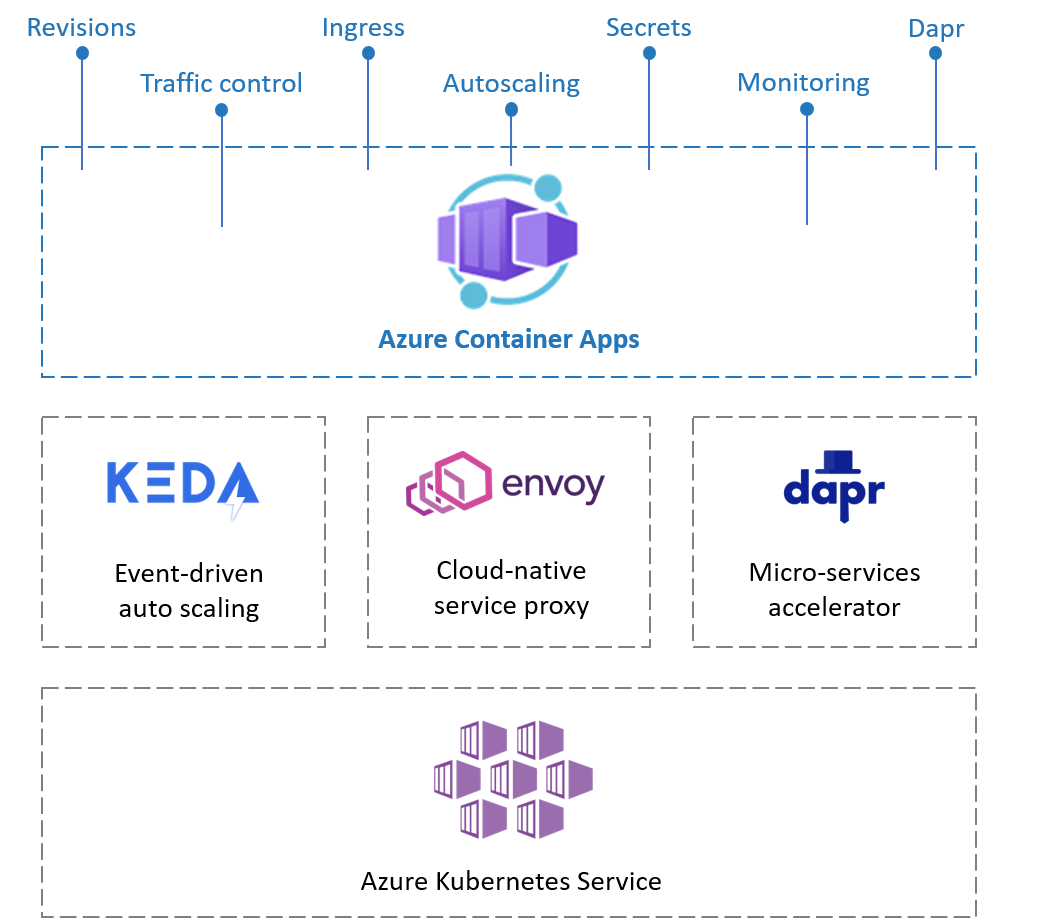

What is Azure Container Apps

-

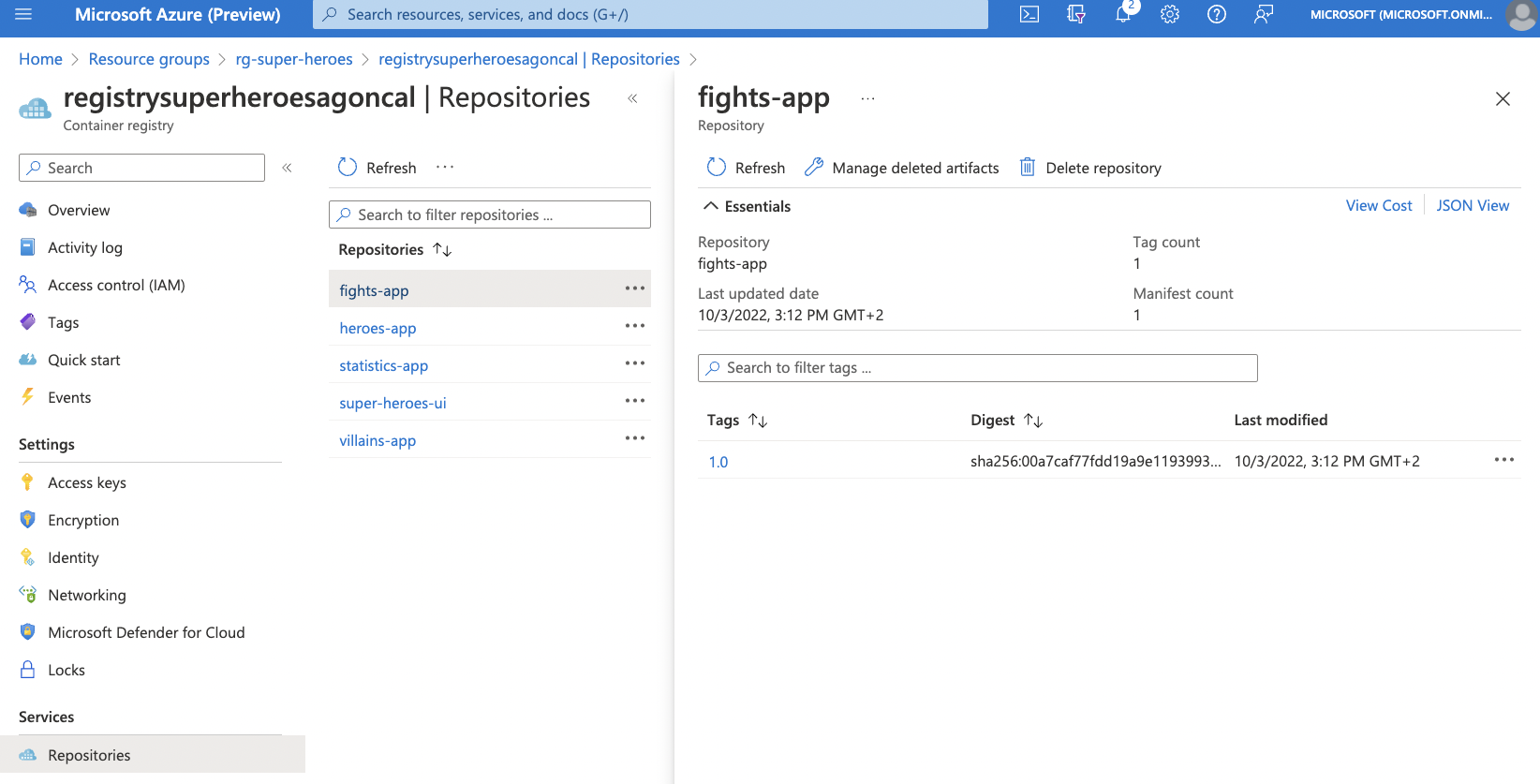

How to push the Docker images to Azure Registry

-

How to create managed services in Azure (Postgres database, Kafka)

-

How to deploy microservices to Azure Container Apps

-

How to configure Docker images in Azure Container Apps

-

How to use Quarkus CLI to create a command-line application to load stress our microservices

-

How to extend Quarkus with extensions

-

And much more!

Ready? Here we go! Check the workshop at https://quarkus.io/quarkus-workshops/super-heroes/ or flash the QR Code if you don’t want to type.

Presenting the Workshop

This workshop should give you a practical introduction to Quarkus. You will practice all the needed tools to develop an entire microservice architecture, mixing classical HTTP, reactive and event-based microservices. You will finish by extending the capabilities of Quarkus and learn more about the ability to create native executables.

The idea is that you leave this workshop with a good understanding of what Quarkus is, what it is not, and how it can help you in your projects. Then, you’ll be prepared to investigate a bit more and, hopefully, contribute.

What Will You Be Developing?

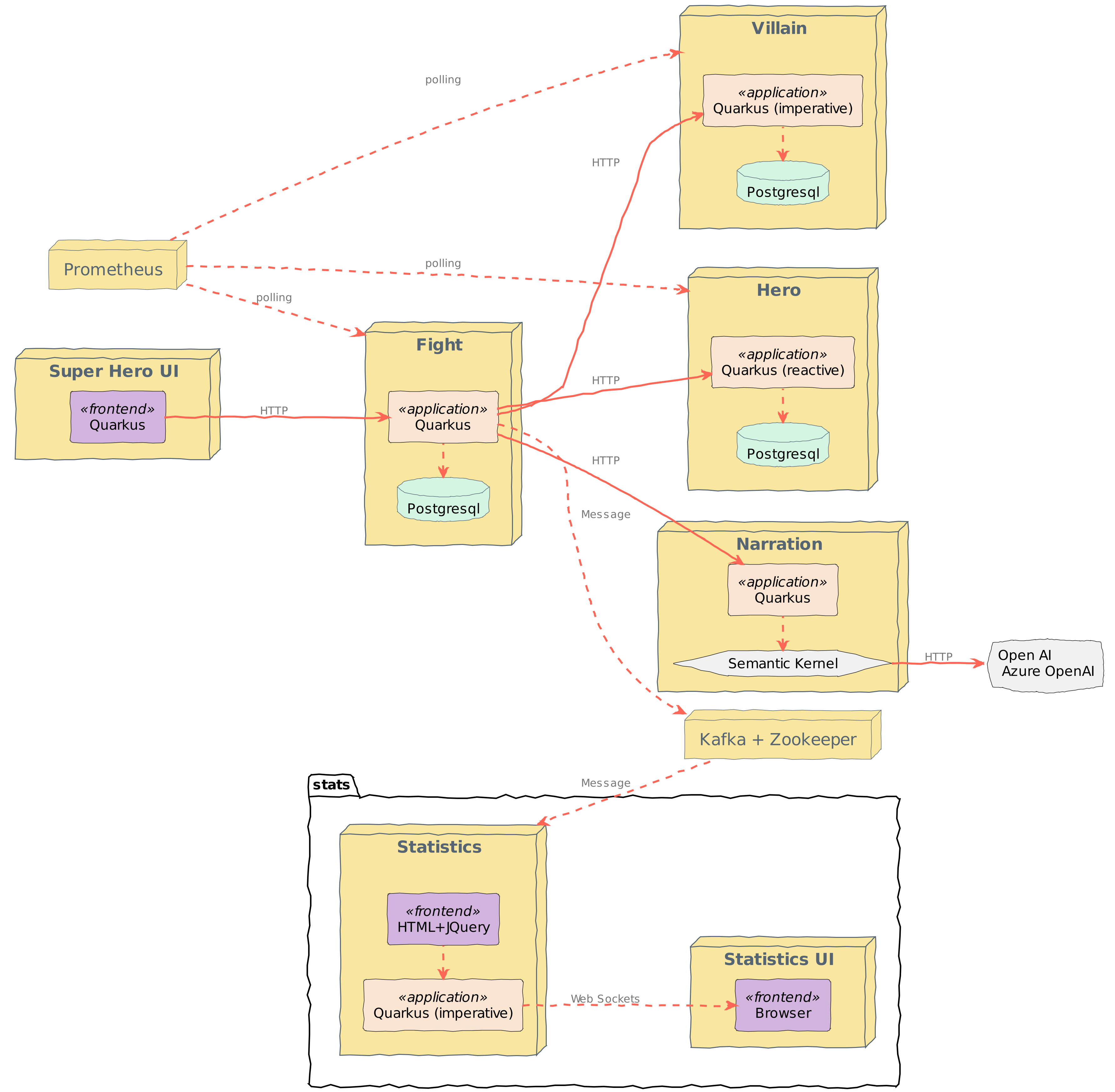

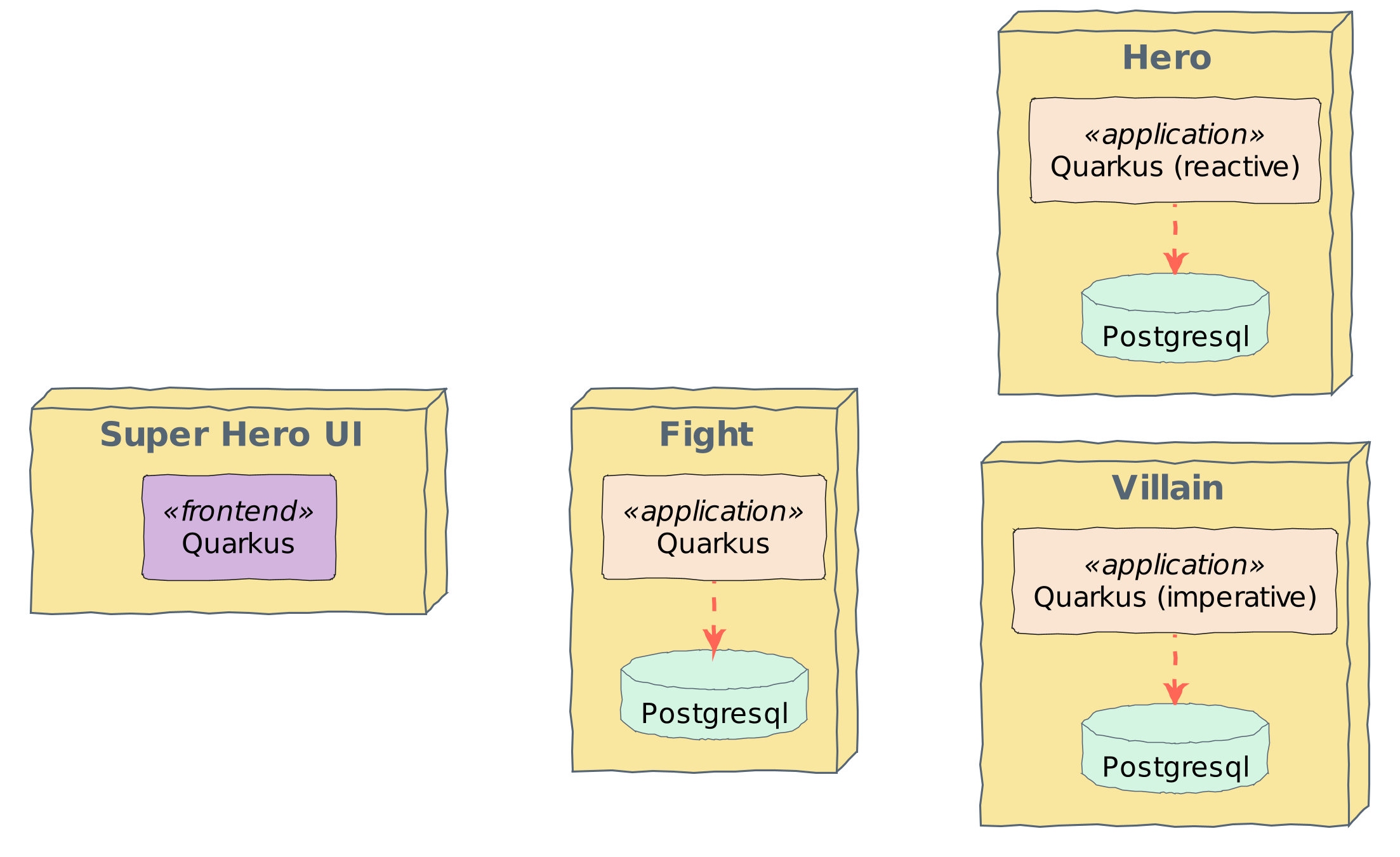

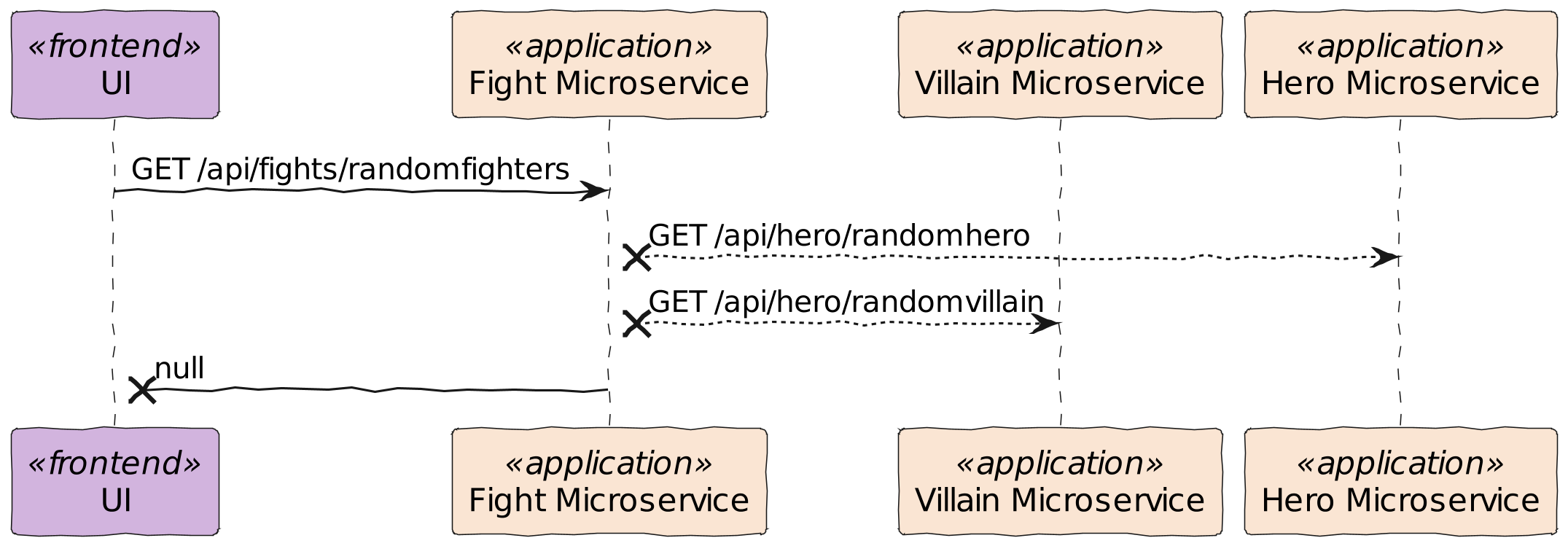

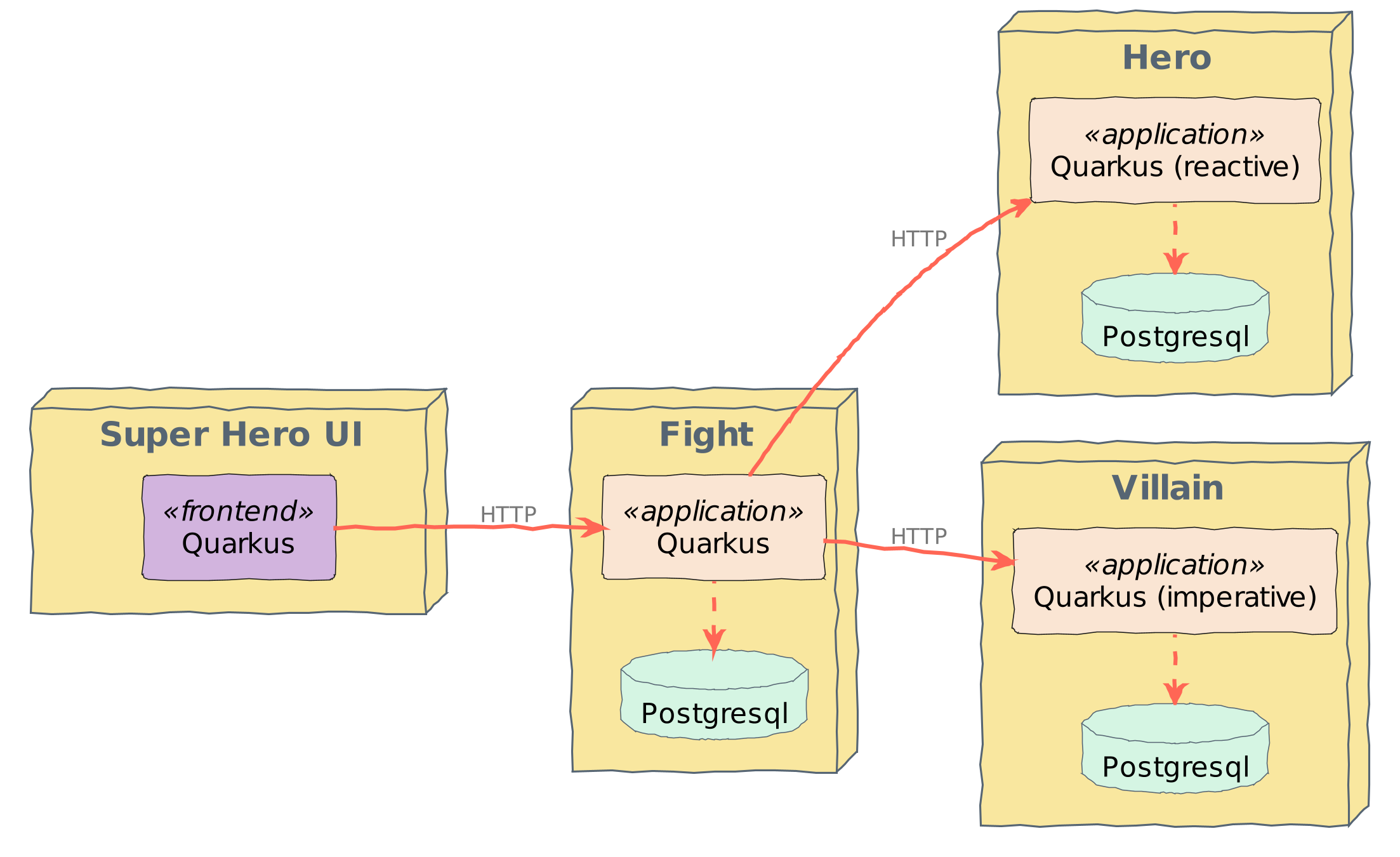

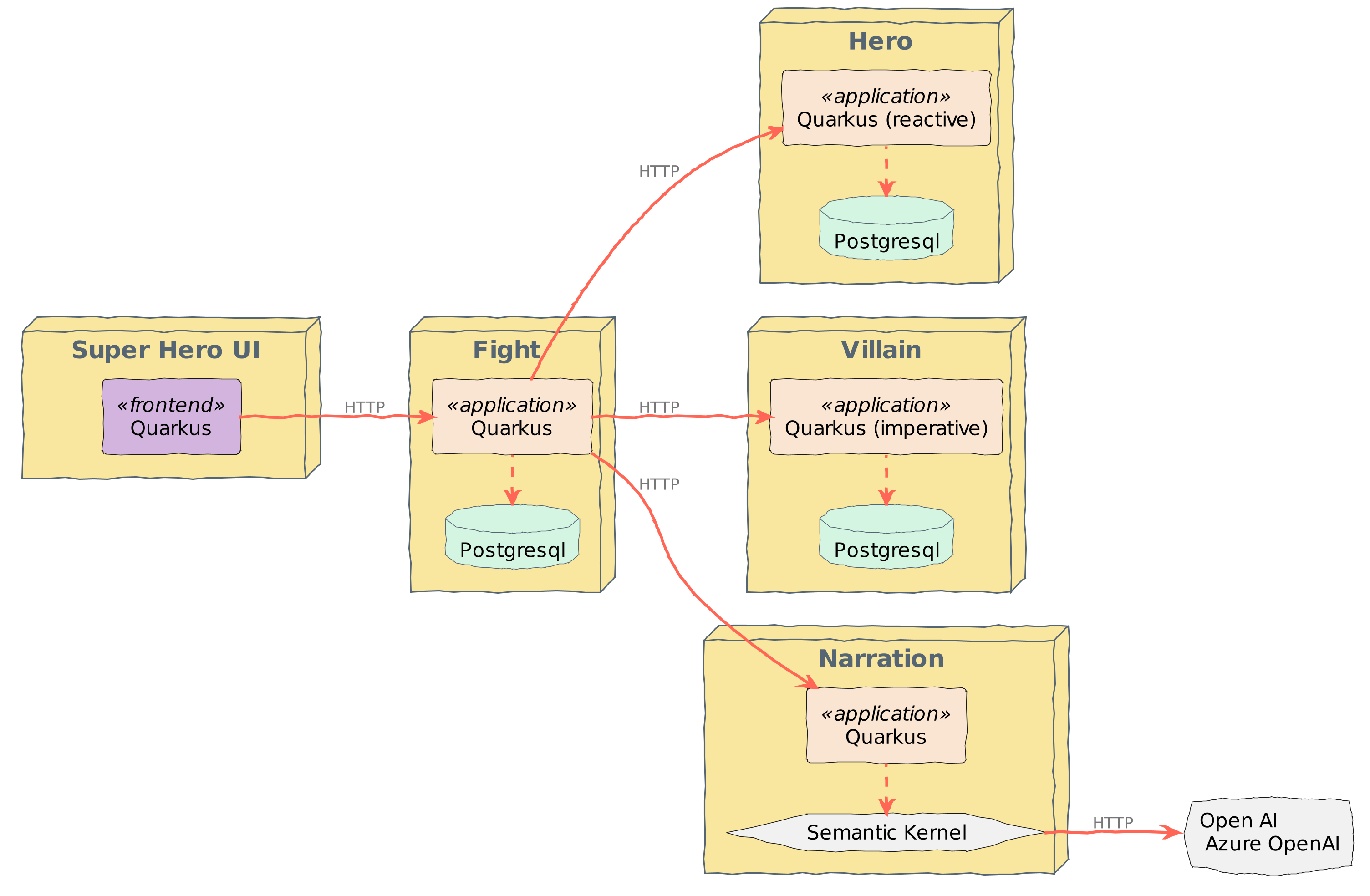

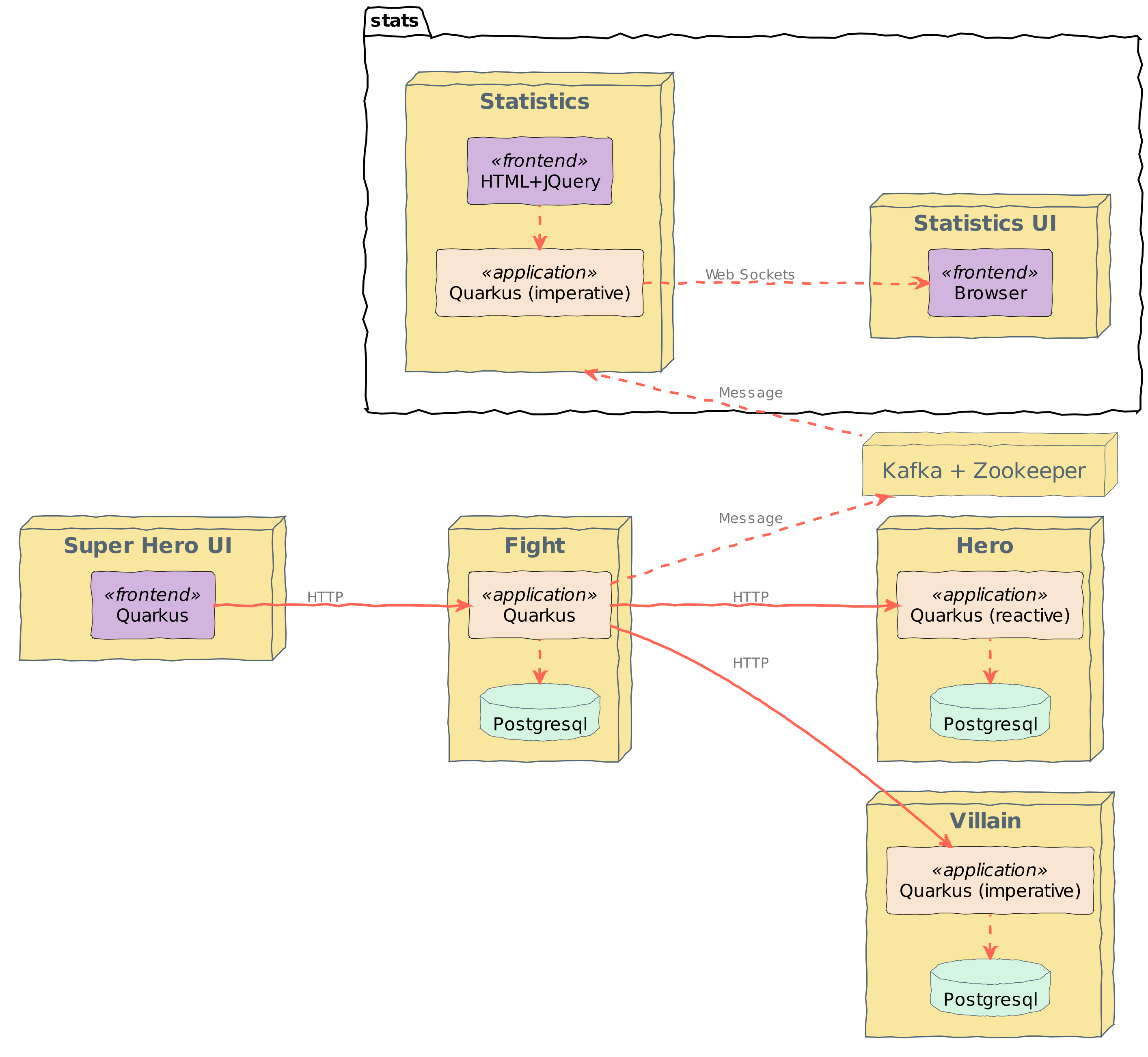

In this workshop, you will develop an application that allows superheroes to fight against supervillains. You will be developing several microservices communicating with each other:

-

Super Hero UI: a React application to pick up a random superhero, a random supervillain, and makes them fight. The Super Hero UI is exposed via Quarkus and invokes the Fight REST API

-

Villain REST API: A classical HTTP microservice exposing CRUD operations on Villains, stored in a PostgreSQL database

-

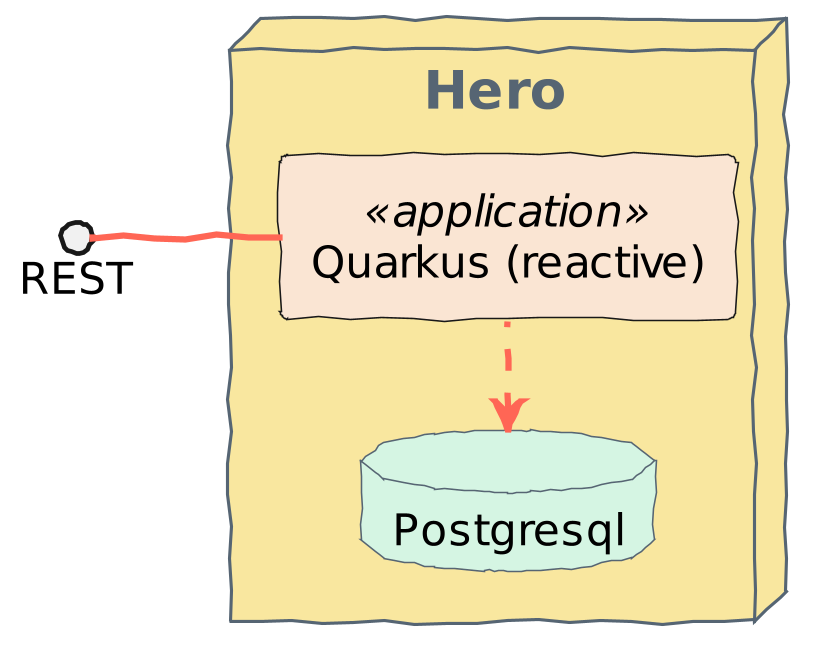

Hero REST API: A reactive HTTP microservice exposing CRUD operations on Heroes, stored in a Postgres database

-

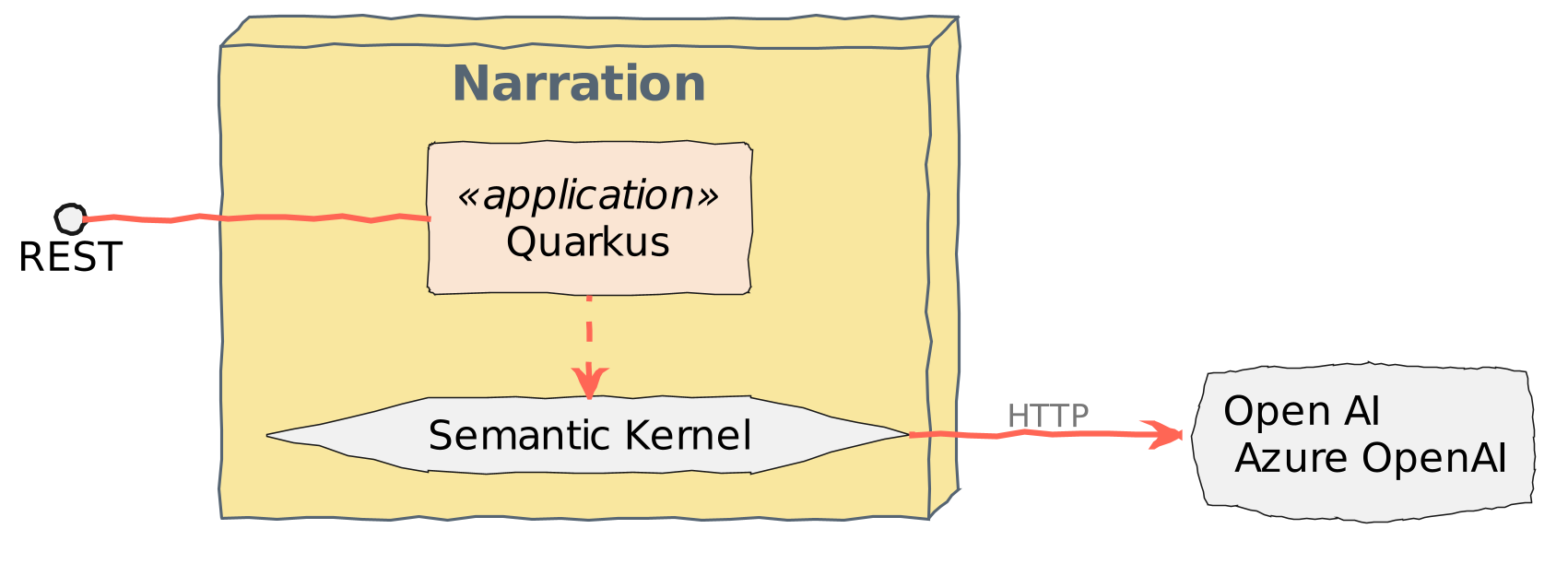

Narration REST API: This microservice talks to OpenAI/Azure OpenAI to generate a random narration of the fight.

-

Fight REST API: This REST API invokes the Hero and Villain APIs to get a random superhero and supervillain. It also invokes the Narration API to get a random narration of the fight. Each fight is, then, stored in a PostgreSQL database. This microservice can be developed using both the classical (imperative) or reactive approach. Invocations to the other microservices are protected using resilience patterns (retry, timeout, circuit-breakers)

-

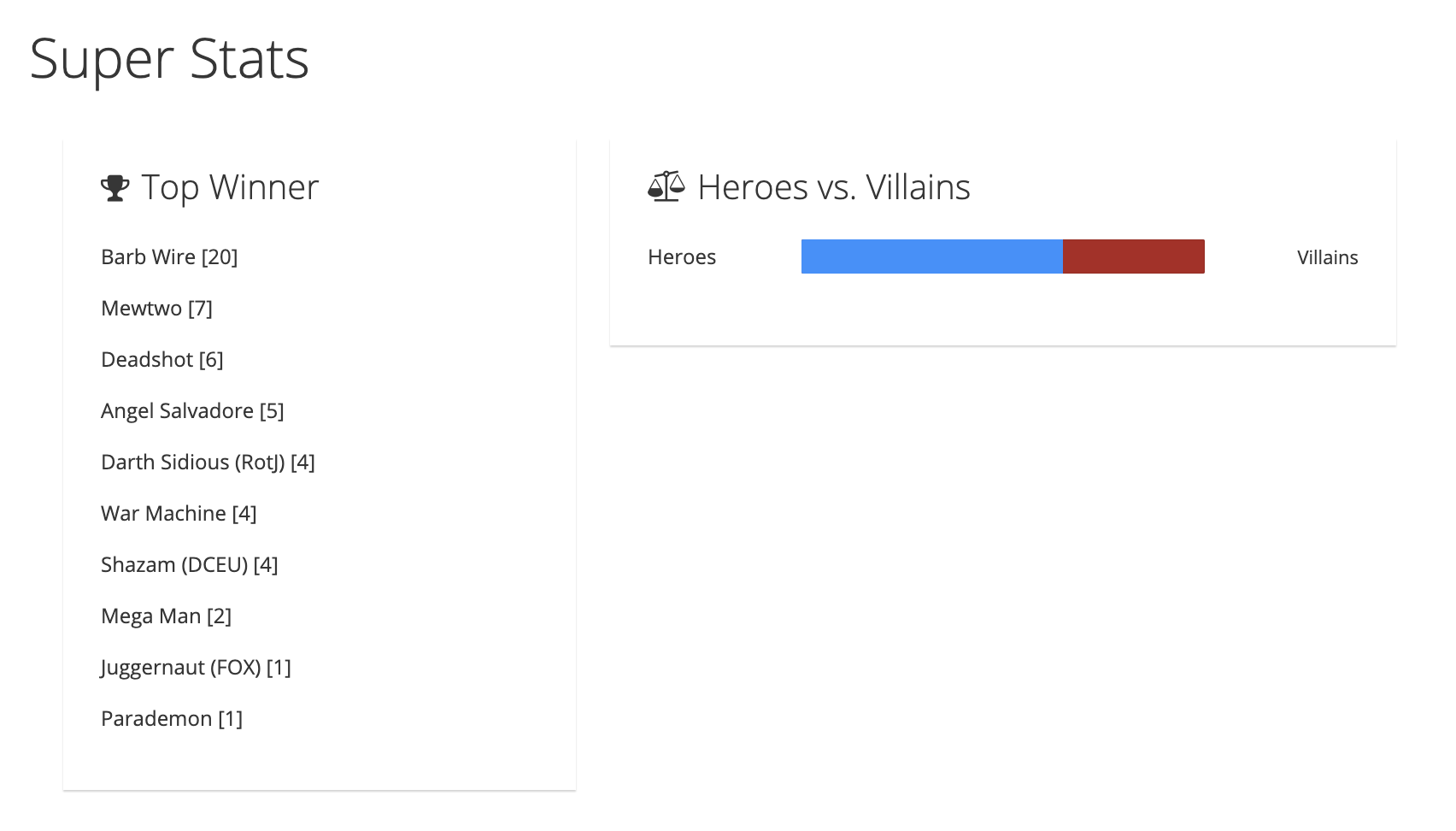

Statistics: Each fight is asynchronously sent (via Kafka) to the Statistics microservice. It has an HTML + JQuery UI displaying all the statistics.

-

Command Line Interface: Quarkus can also be used to create CLI applications. You will create a CLI application that adds load to the Fight, Hero, and Villain microservices.

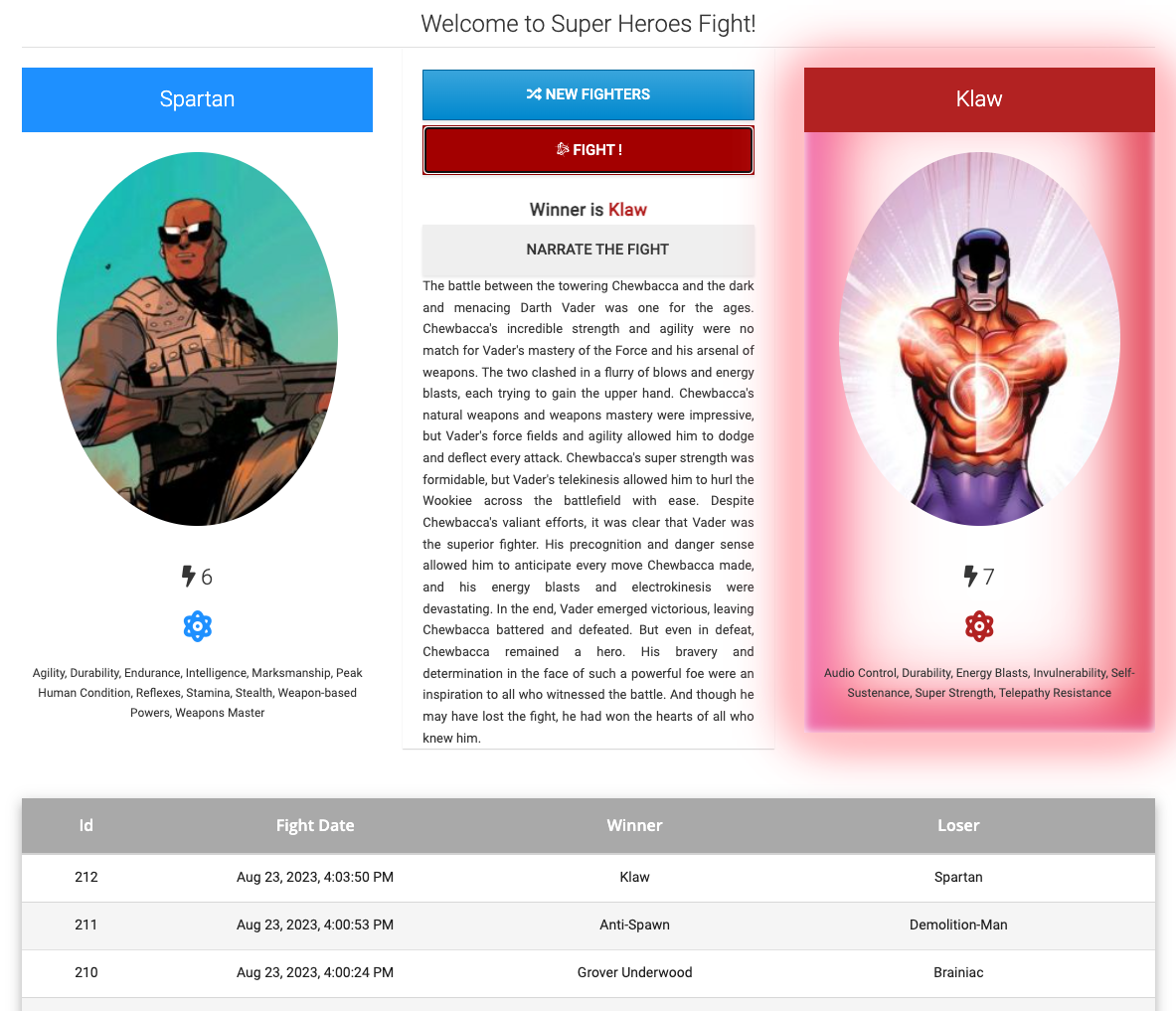

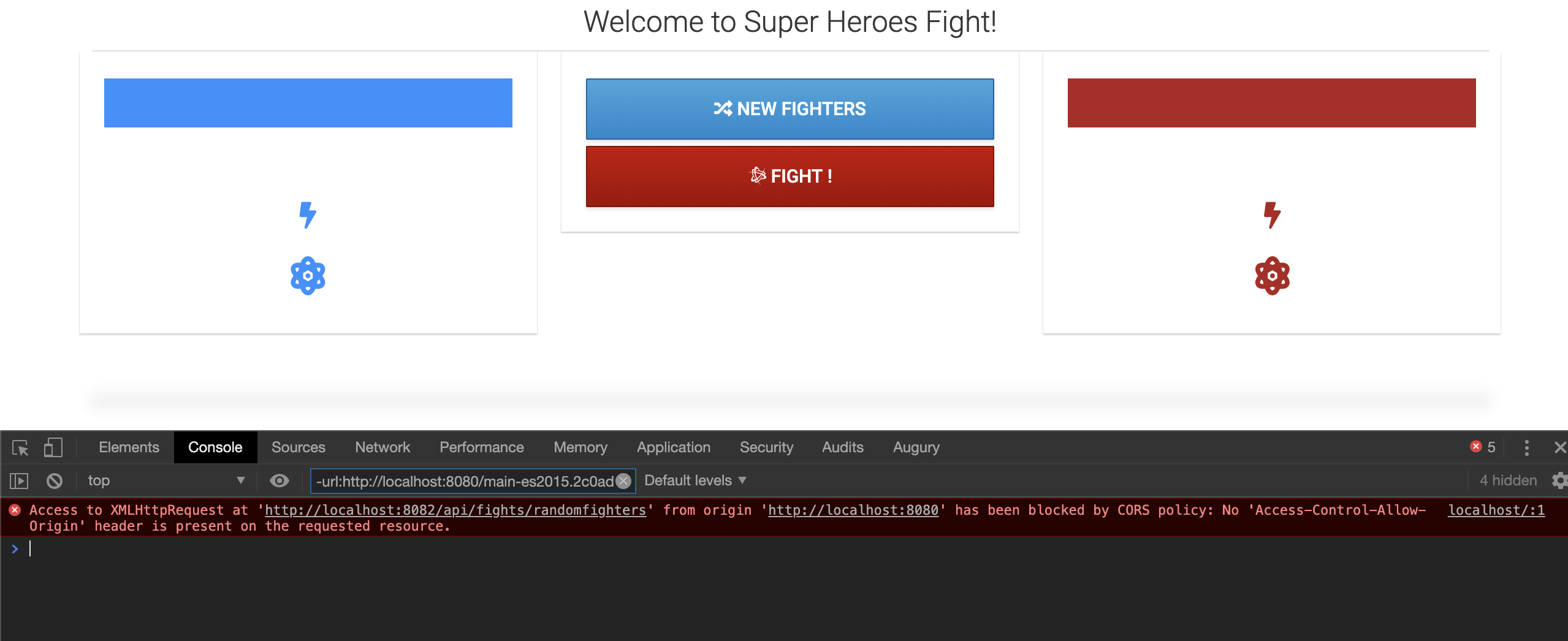

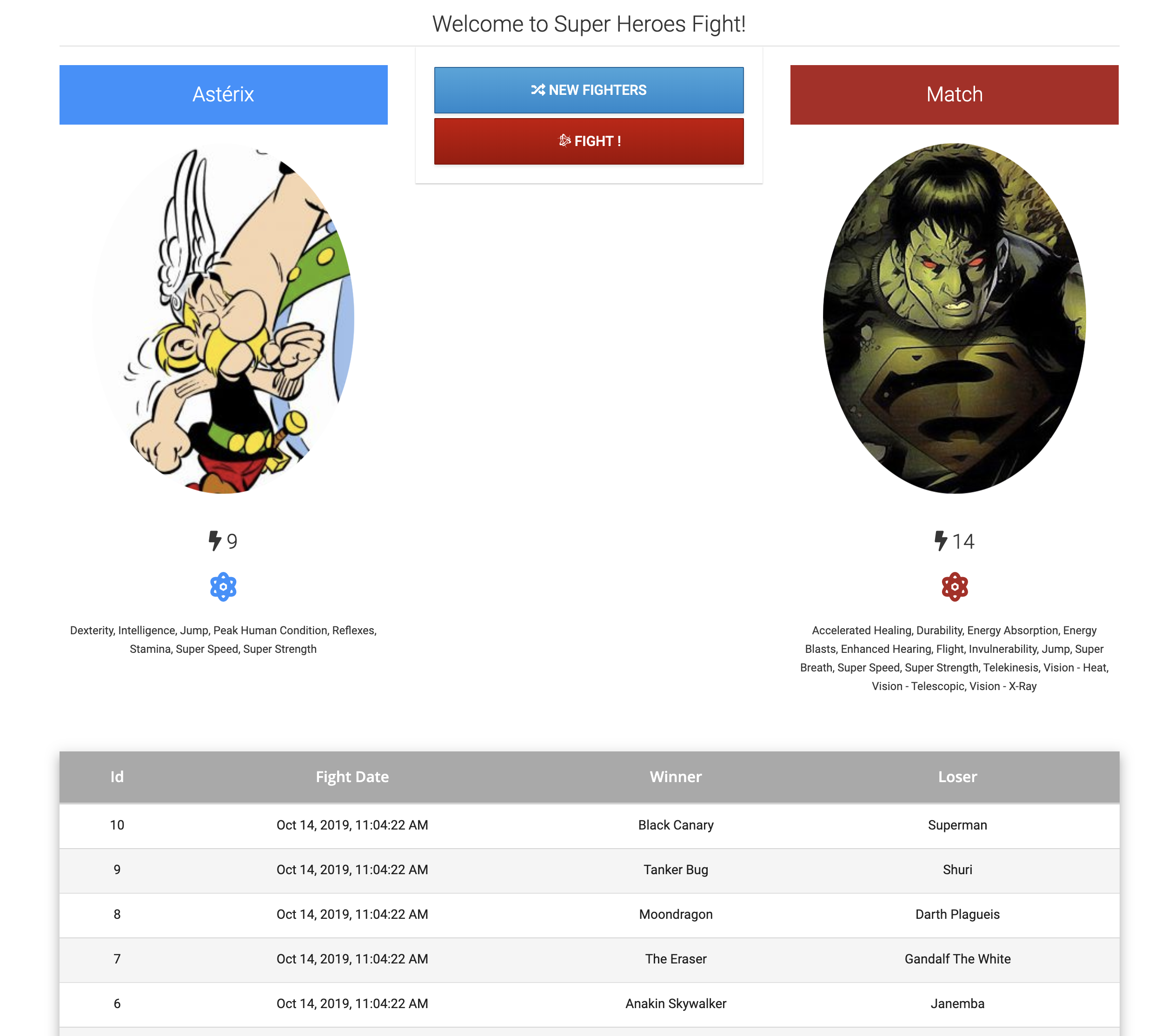

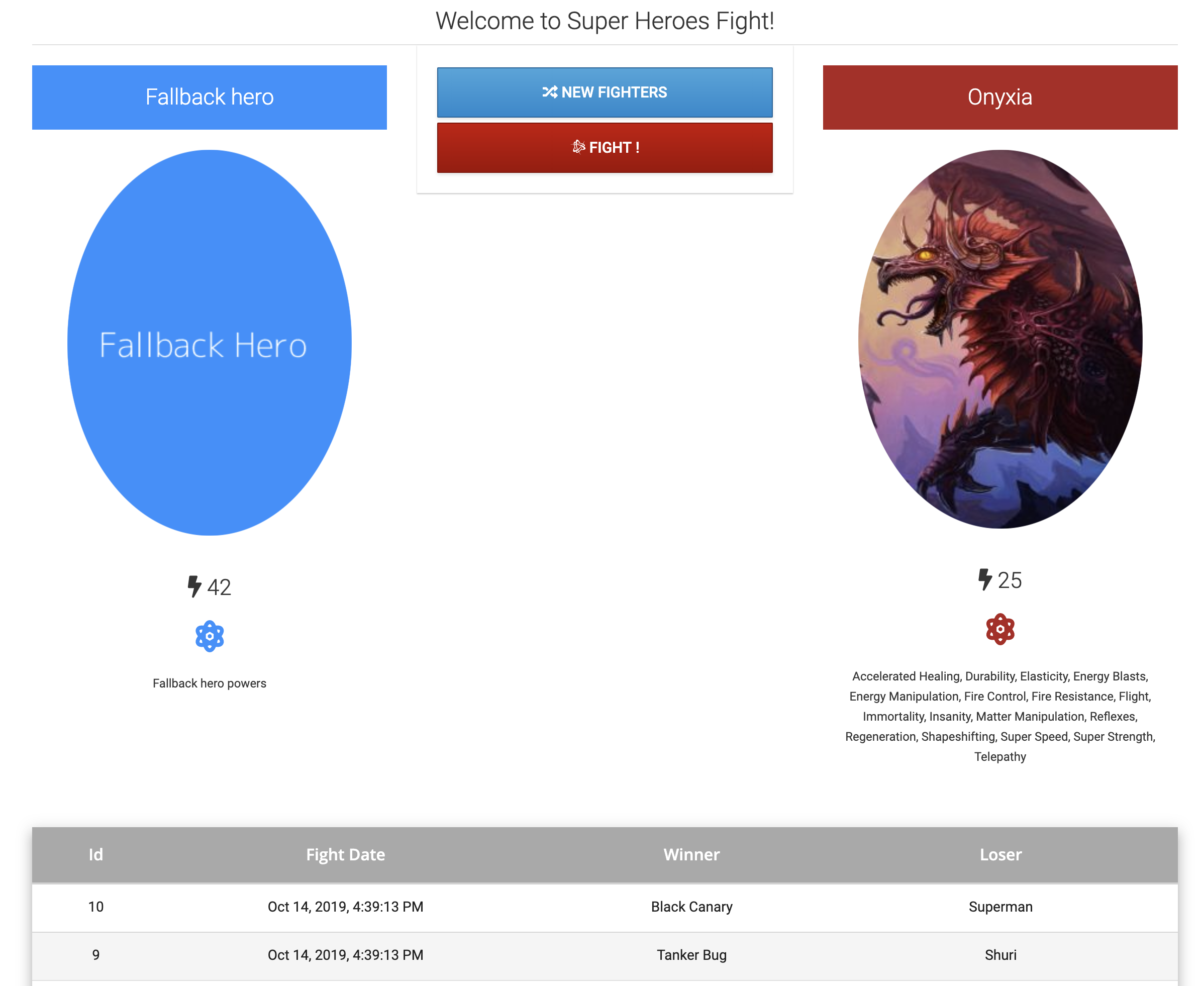

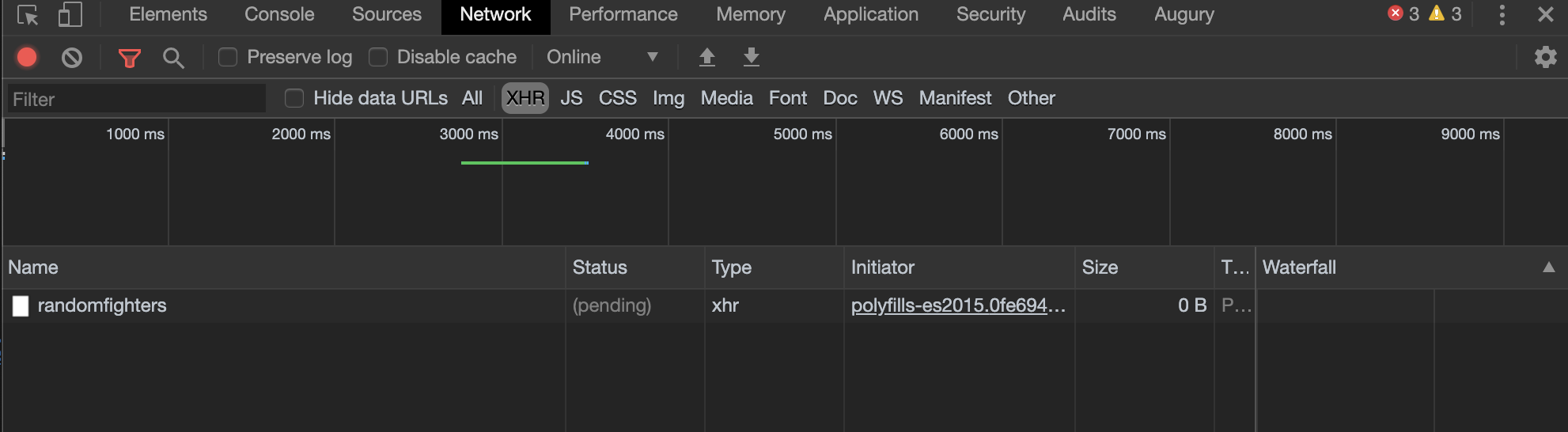

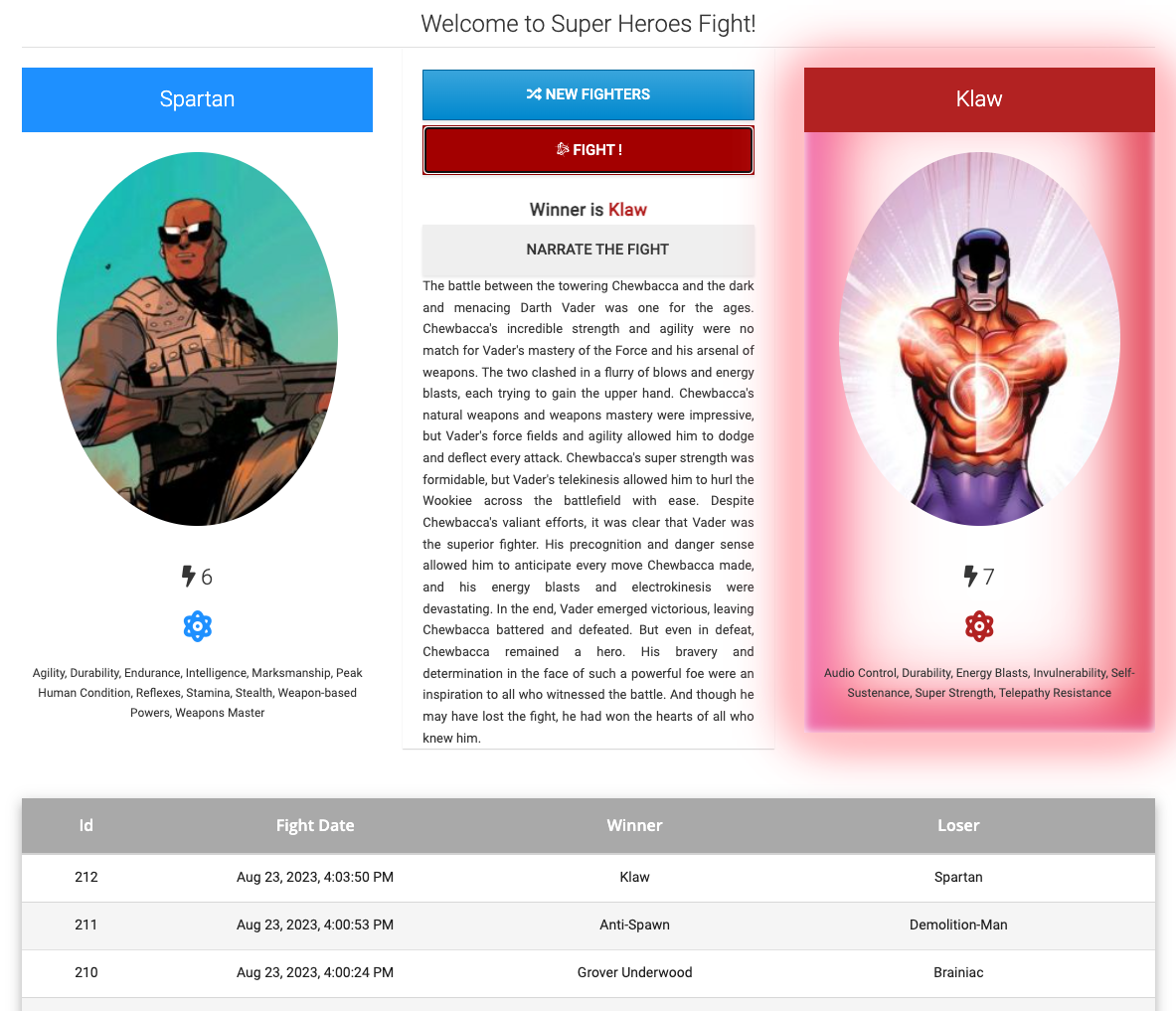

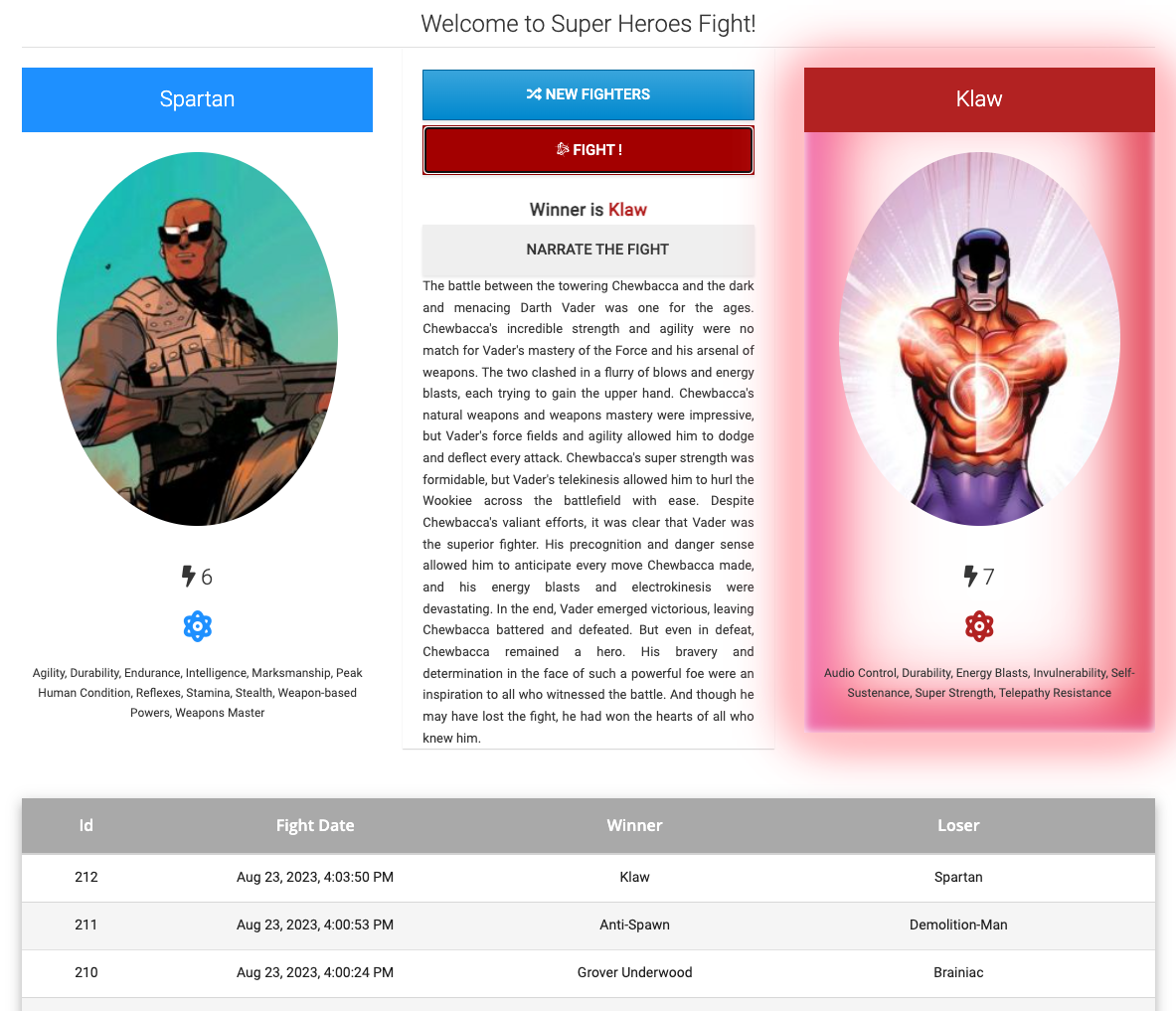

The main UI allows you to pick up one random Hero and Villain by clicking on "New Fighters." Then it’s just a matter of clicking on "Fight!" to get them to fight. If you click on "Narrate the Fight", you will have some AI-generated text describing the fight. The table at the bottom shows the list of the previous fights.

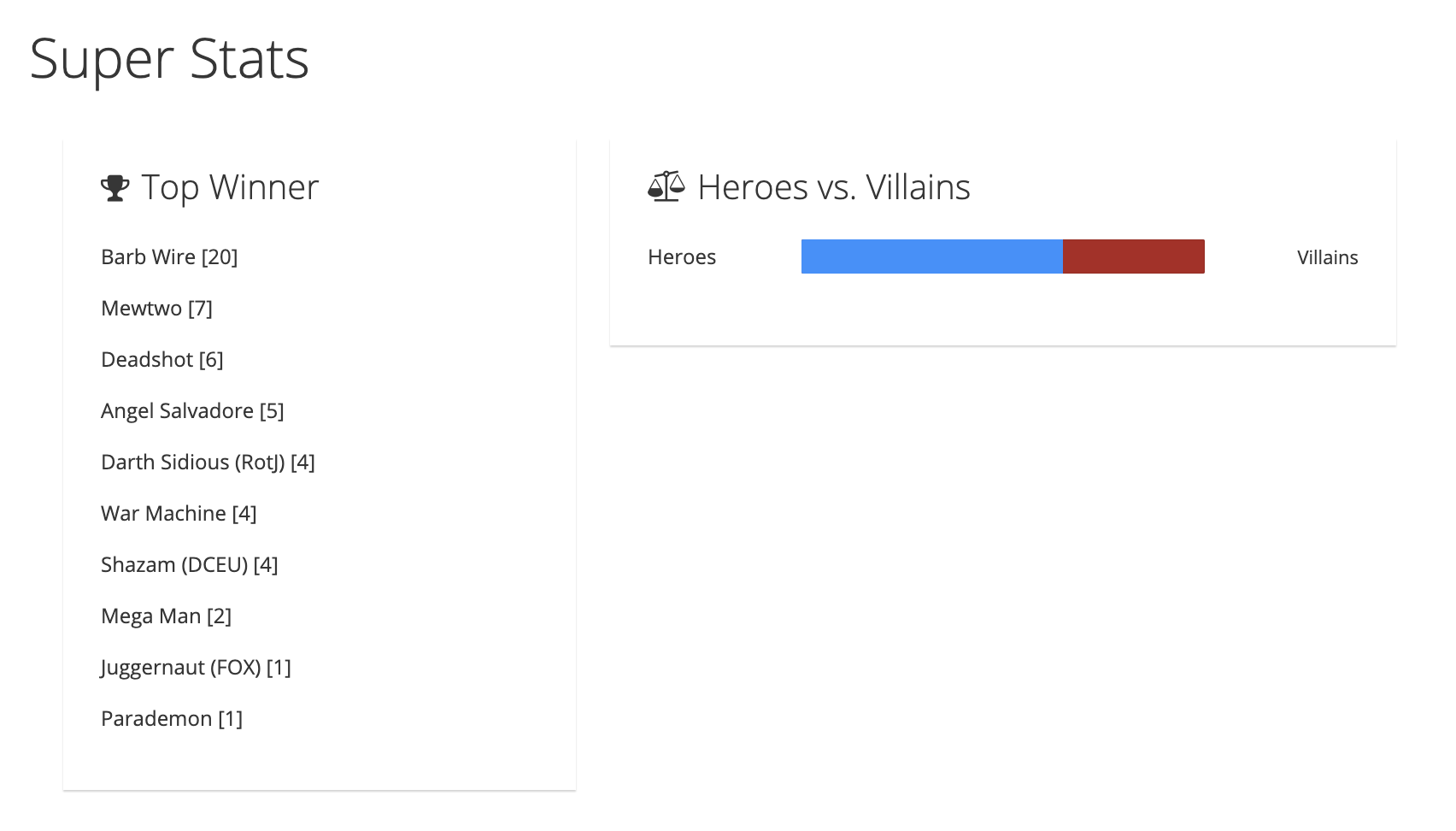

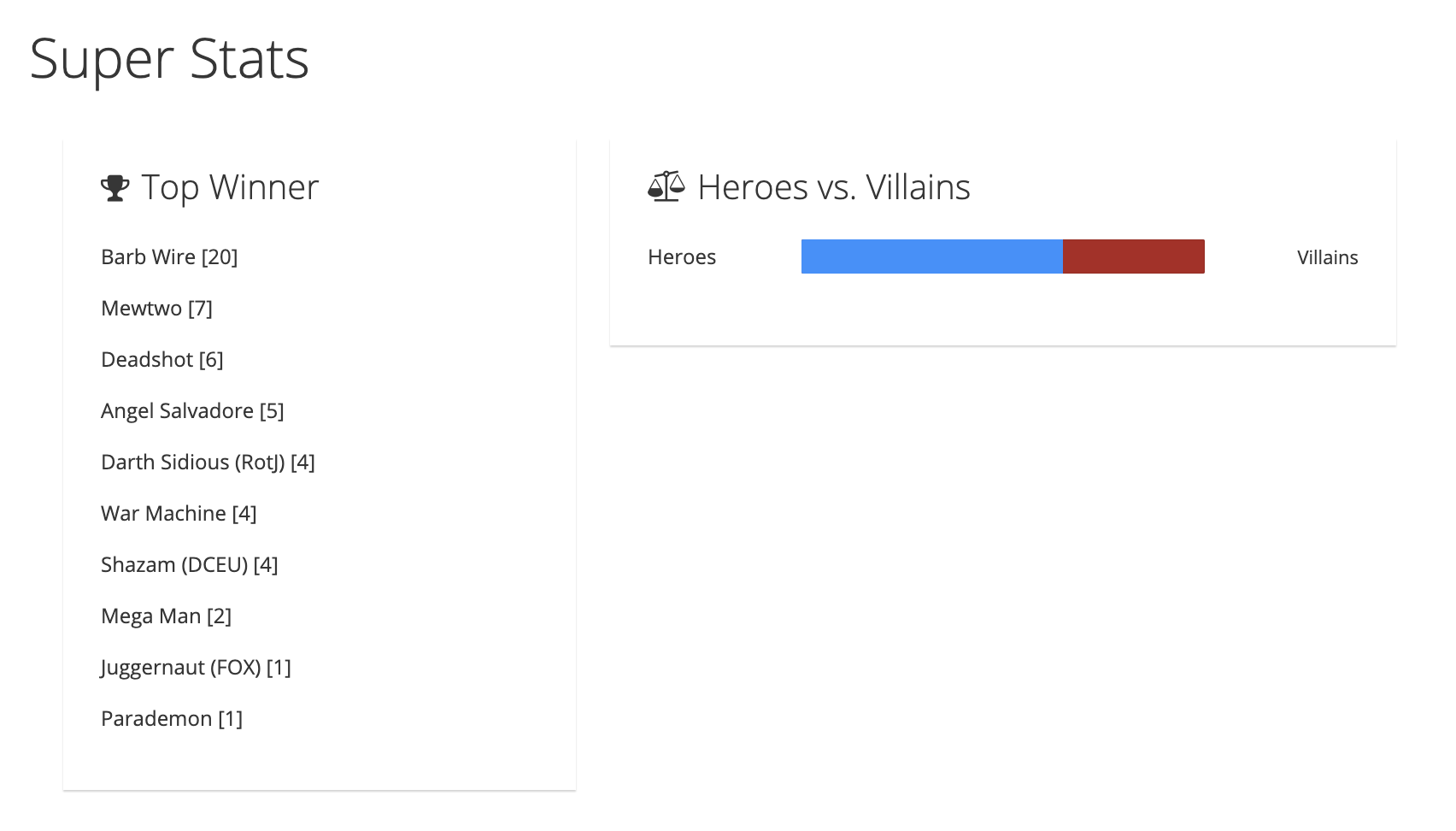

The Statistics UI shows the number of fights per hero and villain.

How Does This Workshop Work?

You have this material in your hands (either electronically or printed), and you can now follow it step by step. The structure of this workshop is as follows:

-

Installing all the needed tools: in this section, you will install all the tools and code to be able to develop, compile and execute our application

-

Developing microservices with Quarkus: in this section, you will develop a microservice architecture by creating several Maven projects, write some Java code, add JPA entities, JAX-RS REST endpoints, write some tests, use a React web application, and all that on Quarkus

-

Artificial Intelligence: in this section, you will use OpenAI or Azure OpenAI to invoke an AI so you can generate a random narration of the fight

-

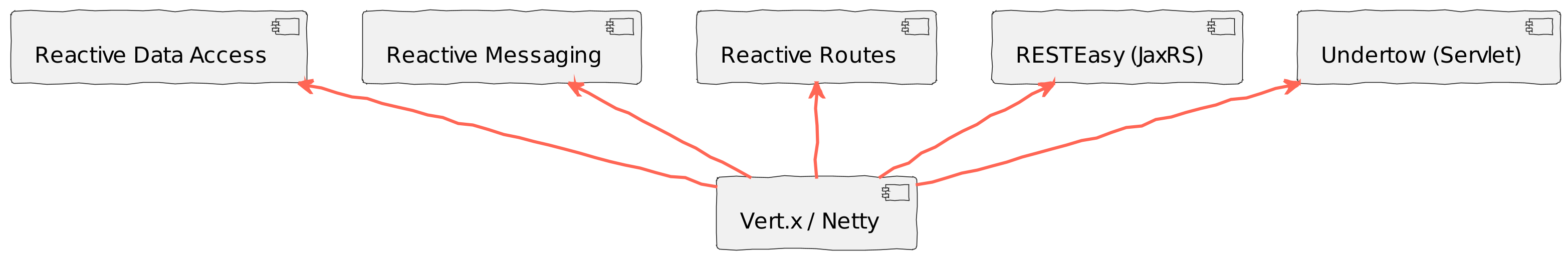

Event-driven and Reactive microservices: in this section, you will create a reactive microservice and an event-driven microservice using Kafka

-

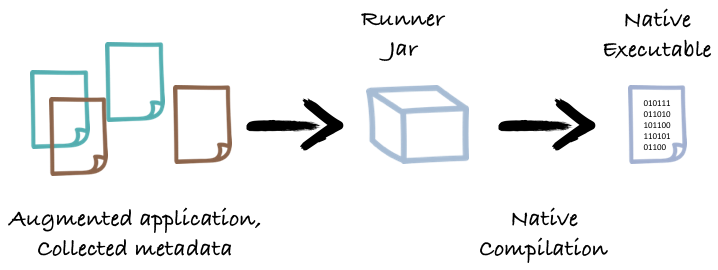

Build native executables: in this section, you will build native executables of all the microservices thanks to GraalVM

-

Containers: in this section, you will create Docker images of all the microservices

-

Cloud: once the microservices are all containerize, you can deploy them to Kubernetes

-

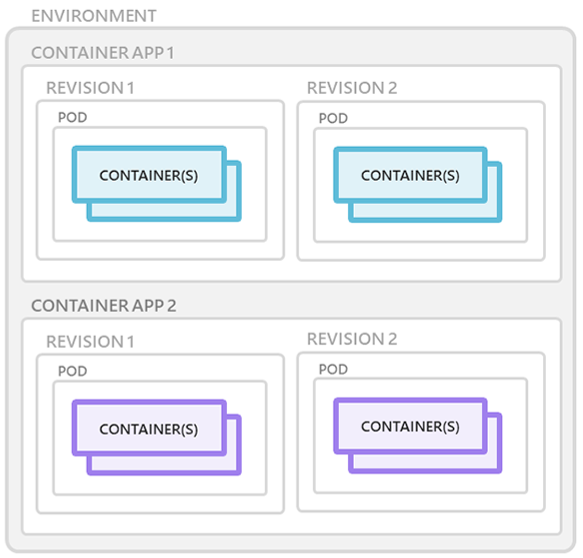

Azure Container Apps and Quarkus: this section introduces Quarkus and Azure Container Apps to make sure you have all the needed vocabulary to follow along

-

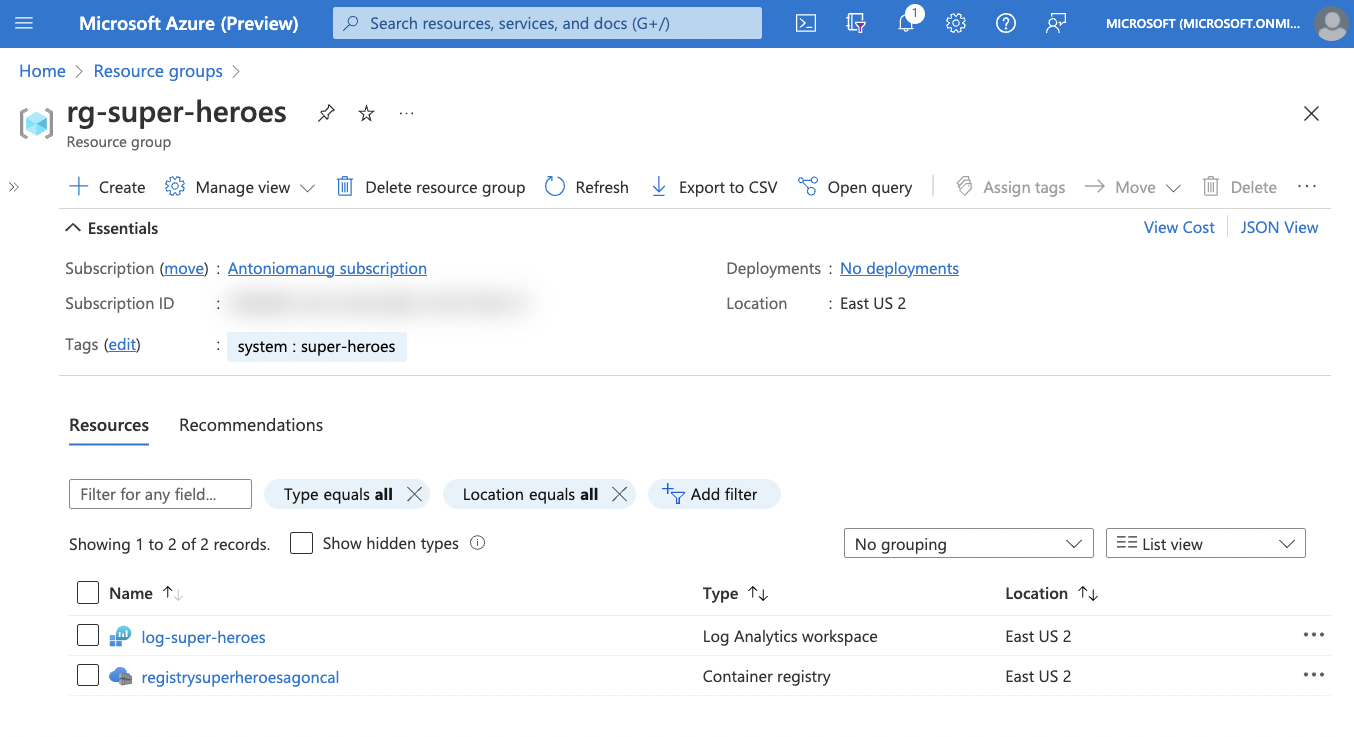

Running the Application Locally: in this section, you will build Docker images out of Quarkus microservices, execute them locally with Docker compose, push them to Azure Registry, all that using Docker and Azure CLI

-

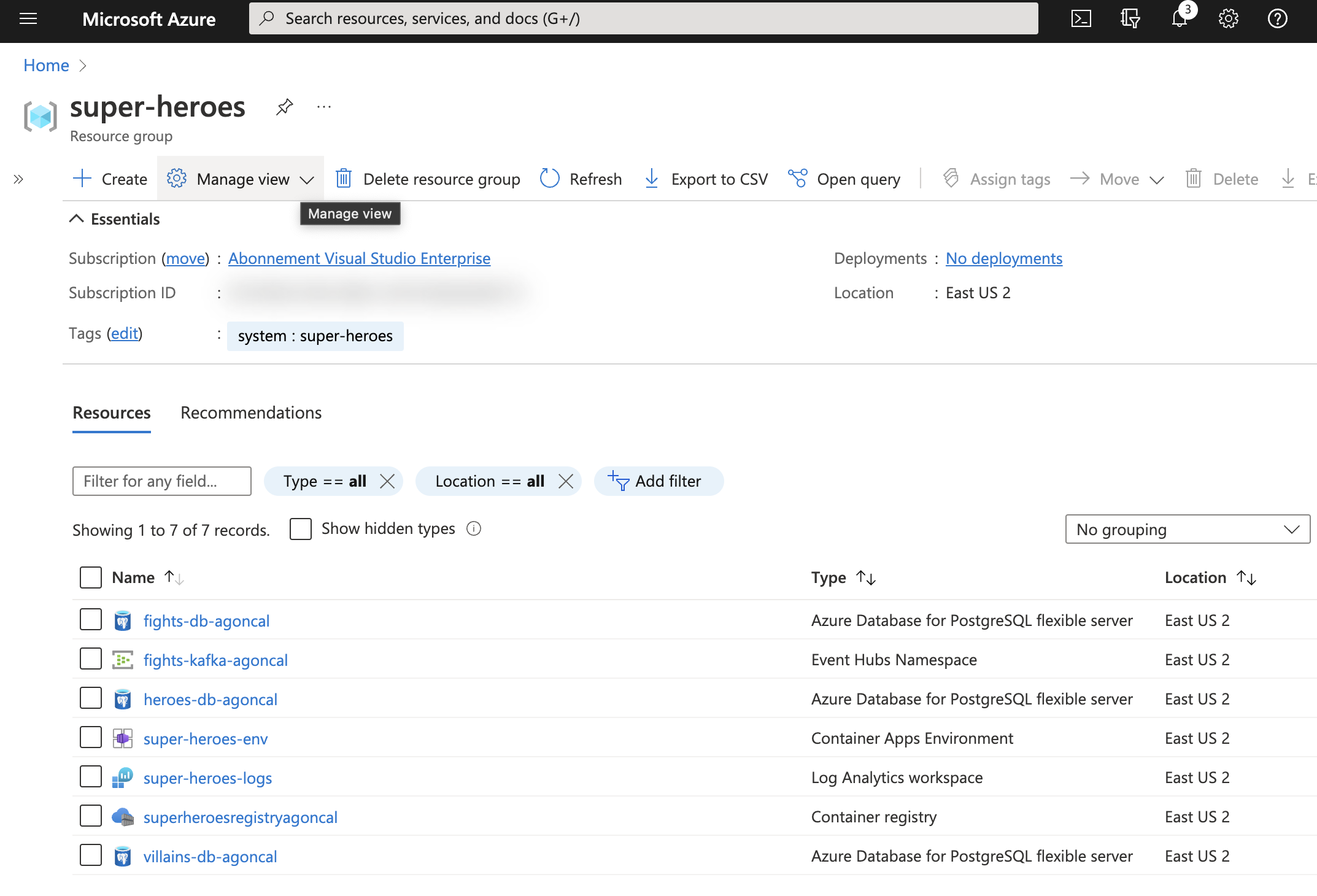

Running the Application on Azure Container Apps: in this section, you will create an entire infrastructure on Azure (Postgres databases, Kafka, etc.) and deploy the microservices to Azure Container Apps

-

Command Line Interface: in this section, you will create a command line application with Quarkus

-

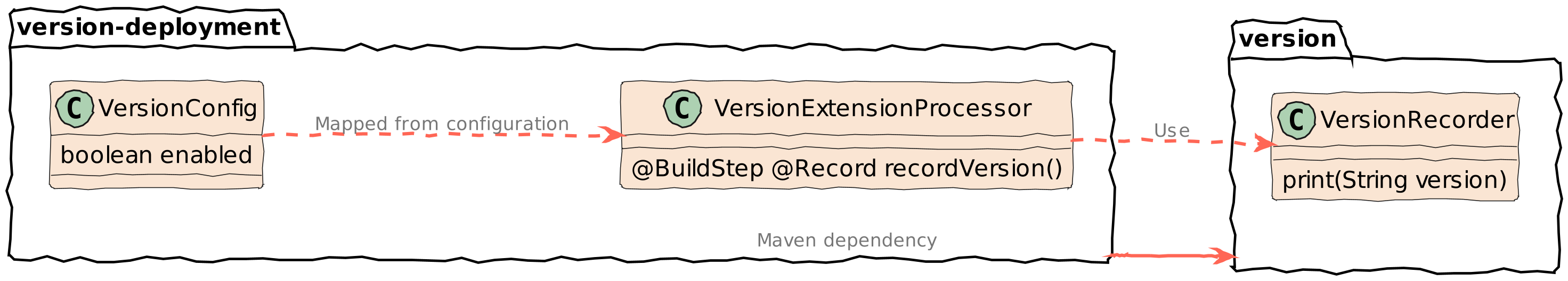

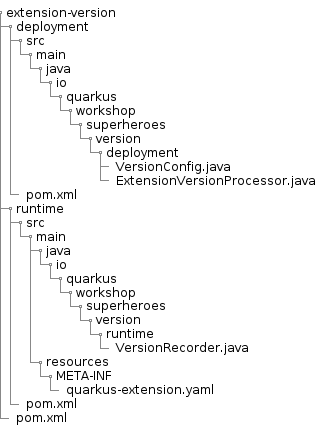

Extending Quarkus: in this section, you will create a Quarkus extension

If you already have the tools installed, skip the Installing all the needed tools section and jump to the section Developing with Quarkus, and start hacking some code and add-ons. This "à la carte" mode lets you make the most of this 6-hour long hands-on lab.

What Do You Have to Do?

This workshop should be as self-explanatory as possible. So your job is to follow the instructions by yourself, do what you are supposed to do, and do not hesitate to ask for any clarification or assistance; that’s why the team is here.

You will download a zip file (quarkus-super-heroes-workshop.zip) containing some of the code and you will need to complete it.

Oh, and be ready to have some fun!

Software Requirements

First of all, make sure you have a 64-bit computer with admin rights (so you can install all the needed tools) and at least 8Gb of RAM (as some tools need a few resources).

|

If you are using Mac OS X, make sure the version is greater than 10.11.x (El Capitan). |

This workshop will make use of the following software, tools, frameworks that you will need to install and know (more or less) how it works:

-

Any IDE you feel comfortable with (eg. Intellij IDEA, Eclipse IDE, VS Code..)

-

JDK 17

-

GraalVM 22.3

-

Docker or Podman

-

cURL (should already be installed in your OS, if not, check the appendix for installation instructions) and

jq -

OpenAI or Azure OpenAI keys

-

An Azure subscription and the Azure CLI

We will also be using Maven 3.9.x, but there is no need to install it.

Instead, the workshop scaffolding includes a maven wrapper, mvnw.

If you’d prefer to install your own Maven and don’t already have it, see the instructions at the end of the workshop.

The following section focuses on how to install and set up the needed software. You can skip the next section if you have already installed all the prerequisites.

|

This workshop assumes a bash shell. If you run on Windows, in particular, adjust the commands accordingly or install WSL. |

Installing Software

JDK 17

Essential for the development and execution of this workshop is the Java Development Kit (JDK).[1]

The JDK includes several tools such as a compiler (javac), a virtual machine, a documentation generator (`JavaDoc), monitoring tools (Visual VM) and so on[2].

The code in this workshop uses JDK 17.

Installing the JDK

To install the JDK 17, follows the instructions from https://adoptium.net/installation.html to download and install the JDK for your platform.

There is also an easier way to download and install Java if you are on Mac OS X: using SDKMAN!.

SDKMAN![3] is a tool for managing parallel versions of multiple Software Development Kits (SDK) on most Unix based systems.

It provides a convenient Command Line Interface (CLI) and API for installing, switching, removing and listing Candidates.

Developers often need to manage parallel versions of different builds of SDKs in their environment and switch from one to another.

Manually setting the PATH variable can become quickly painful.

That’s when SDKMAN! can help you.

Installing SDKMAN!

Installing SDKMAN! is easy. On Bash and ZSH shells simply open a new terminal and enter:

$ curl -s "https://get.sdkman.io" | bashFollow the instructions on-screen to complete installation.

Listing Java Versions

To install Java, we need to list the available versions on SDKMAN! using the list command.

The result is a table of entries grouped by the vendor and sorted by version:

$ sdk list java

==========================================================================

Available Java Versions for macOS ARM 64bit

==========================================================================

Vendor | Use | Version | Dist | Status | Identifier

--------------------------------------------------------------------------

Corretto | | 19.0.1 | amzn | | 19.0.1-amzn

....

Microsoft | | 17.0.5 | ms | | 17.0.5-ms

...

Oracle | | 19.0.1 | oracle | | 19.0.1-oracle

...

Temurin | | 19.0.1 | tem | | 19.0.1-tem

| >>> | 17.0.5 | tem | installed | 17.0.5-tem

| | 11.0.17 | tem | installed | 11.0.17-tem

==========================================================================If you have any Java candidate installed, you should see installed in the Status column.

If you don’t have any Java candidate installed, use SDKMAN! to install one or several.

Installing Java 17

There are several different vendors of Java, and each vendor has its own distribution. Most of these distributions are available on SDKMAN! and can easily be installed. Let’s install Temurin.

To install Temurin 17, we copy its identifier (17-tem), which is the version from the table, and we add it as an argument in the install command:

$ sdk install java 17-tem

Downloading: java 17-tem

Repackaging Java 17-tem...

Installing: java 17-tem

Done installing!

Do you want java 17-tem to be set as default? (Y/n):For Linux distributions, there are also packaged java installations.

# dnf (rpm-based)

dnf install java-17-openjdk

# Debian-based distributions:

$ apt-get install openjdk-17-jdkChecking for Java Installation

Once the installation is complete, it is necessary to set the JAVA_HOME variable and the $JAVA_HOME/bin directory to the PATH variable.

Check that your system recognizes Java by entering java -version and the Java compiler with javac -version.

$ java -version

openjdk version "17.0.5" 2022-10-18

OpenJDK Runtime Environment GraalVM CE 22.3.0 (build 17.0.5+8-jvmci-22.3-b08)

OpenJDK 64-Bit Server VM GraalVM CE 22.3.0 (build 17.0.5+8-jvmci-22.3-b08, mixed mode, sharing)

$ javac -version

javac 17.0.5Docker or Podman

Docker is a set of utilities that use OS-level virtualization to deliver software in packages called containers. Containers are isolated from one another and bundle their software, libraries, and configuration files; they can communicate with each other through well-defined channels.

Installing Docker

Quarkus use Testcontainers, and therefore also Docker, to ease the management of different technical services (database, monitoring…) during development.

Our workshop also uses Docker to manage these services in a production-style deployment.

So for this, we need to install docker and docker compose

Installation instructions are available on the following page:

-

Mac OS X - https://docs.docker.com/docker-for-mac/install/ (version 20+)

-

Windows - https://docs.docker.com/docker-for-windows/install/ (version 20+)

-

CentOS - https://docs.docker.com/install/linux/docker-ce/centos/

-

Debian - https://docs.docker.com/install/linux/docker-ce/debian/

-

Fedora - https://docs.docker.com/install/linux/docker-ce/fedora/

-

Ubuntu - https://docs.docker.com/install/linux/docker-ce/ubuntu/

On Linux, don’t forget the post-execution steps described on https://docs.docker.com/install/linux/linux-postinstall/.

If you do not have a Docker licence, you might prefer podman (https://podman.io/) instead of docker. To install podman and podman-compose please follow the instructions at https://quarkus.io/guides/podman. Do not forget the extra steps to configure the testcontainers library. As a convenience you can even alias docker to podman.

|

Checking for Docker Installation

Once installed, check that both docker and docker compose are available in your PATH.

For that, execute the following commands:

$ docker versionYou should see something like this:

Docker version 20.10.8, build 3967b7d

Cloud integration: v1.0.24

Version: 20.10.14

API version: 1.41Then, check the Docker Compose version:

$ docker compose versionDocker compose being a separate utility, you should get a different version than Docker itself:

Docker Compose version v2.5.0Finally, run your first container as follows:

$ docker run hello-worldFor the first time, this will download the hello-world image from the Docker Hub and run it.

You should get something like this:

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/Some Docker Commands

Docker is a command-line utility where you can use several parameters and options to start/stop a container.

You invoke docker with zero, one, or several command-line options with the container or image ID you want to work with.

Docker comes with several options that are described in the documentation if you need more help.[4]

To get some help on the commands and options, you can type, use the following command:

$ docker help

Usage: docker [OPTIONS] COMMAND

$ docker help attach

Usage: docker attach [OPTIONS] CONTAINER

Attach local standard input, output, and error streams to a running containerHere are some commands that you will be using to start/stop containers in this workshop.

-

docker container ls: Lists containers. -

docker container start CONTAINER: Starts one or more stopped containers. -

docker compose -f docker-compose.yaml up -d: Starts all containers defined in a Docker Compose file. -

docker compose -f docker-compose.yaml down: Stops all containers defined in a Docker Compose file.

jq

Very often, when using cURL to invoke a RESTful web service, we get some JSON payload. cURL does not format this JSON so that you will get a flat String such as:

curl http://localhost:8083/api/heroes

[{"id":"1","name":"Chewbacca","level":"14"},{"id":"2","name":"Wonder Woman","level":"15"},{"id":"3","name":"Anakin Skywalker","level":"8"}]But what we want is to format the JSON payload, so it is easier to read.

For that, there is a neat utility tool called jq that we could use.

jq is a tool for processing JSON inputs, applying the given filter to its JSON text inputs, and producing the filter’s results as JSON on standard output.[5]

Installing jq

You can install it on Mac OSX with a simple brew install jq.

Checking for jq Installation

Once installed, it’s just a matter of piping the cURL output to jq like this:

curl http://localhost:8083/api/heroes | jq

[

{

"id": "1",

"name": "Chewbacca",

"lastName": "14"

},

{

"id": "2",

"name": "Wonder Woman",

"lastName": "15"

},

{

"id": "3",

"name": "Anakin Skywalker",

"lastName": "8"

}

]GraalVM 22.3

GraalVM extends the Java Virtual Machine (JVM) to support more languages and several execution modes.[6] It supports a large set of languages: Java of course, other JVM-based languages (such as Groovy, Kotlin etc.) but also JavaScript, Ruby, Python, R and C/C++. It includes a high-performance Java compiler, which can be used in a Just-In-Time (JIT) configuration on the HotSpot VM or in an Ahead-Of-Time (AOT) configuration with the GraalVM native compiler. One objective of GraalVM is to improve the performance of Java virtual machine-based languages to match the performance of native languages.

Prerequisites for GraalVM

On Linux, you need GCC and the Glibc and zlib headers. Examples for common distributions:

# dnf (rpm-based)

sudo dnf install gcc glibc-devel zlib-devel

# Debian-based distributions:

sudo apt-get install build-essential libz-dev zlib1g-devOn macOS X there are several ways to install GraalVM. But using SDKMAN! is the preferred option, as it allows you to easily switch between different versions of GraalVM if needed.

On Windows, you need the Developer Command Prompt for Microsoft Visual C++. Check the Windows prerequisites page for details.

Installing GraalVM

Listing GraalVM Versions

First of all, check if you already have the GraalVM Candidates installed on your machine.

To list the available versions of GraalVM, use the SDKMAN! list java command.

The result is a table of entries grouped by the vendor and sorted by version.

GraalVM has its own group and is listed under the GraalVM vendor:

$ sdk list java

=======================================================================

Available Java Versions for macOS ARM 64bit

=======================================================================

Vendor | Use | Version | Dist | Status | Identifier

-----------------------------------------------------------------------

GraalVM | | 22.3.r19 | grl | | 22.3.r19-grl

| | 22.3.r17 | grl | | 22.3.r17-grl

| | 22.3.r11 | grl | | 22.3.r11-grl

| | 22.2.r17 | grl | | 22.2.r17-grl

| | 22.2.r11 | grl | | 22.2.r11-grl

| | 22.1.0.r17 | grl | | 22.1.0.r17-grl

| | 22.1.0.r11 | grl | | 22.1.0.r11-grl

=======================================================================If you have any GraalVM candidate installed, you should see installed in the Status column.

If you don’t have any GraalVM candidate installed, use SDKMAN! to install one or several.

Installing a GraalVM Version

There are several versions of GraalVM available for different versions of the JDK.

Because we are using Java 17 in this fascicle, we will install the version of GraalVM that is compatible with Java 17 (the versions finishing with r17-grl).

Let’s install GraalVM 22.3.

$ sdk install java 22.3.r17-grl

Downloading: java 22.3.r17-grl

Repackaging Java 22.3.r17-grl...

Installing: java 22.3.r17-grl

Done installing!

Do you want java 22.3.r17-grl to be set as default? (Y/n):Once installed, define the GRAALVM_HOME environment variable to point to the directory where GraalVM is installed (eg. on Mac OS X it could be ~/.sdkman/candidates/java/22.3.r17-grl).

|

Mac OS X - Catalina

On Mac OS X Catalina, the installation of the |

Checking for GraalVM Installation

Once installed and set up, you should be able to run the following command and get something like the following output.

$ $GRAALVM_HOME/bin/native-image --versionYou should get something like:

native-image 22.0.2 2024-07-16

OpenJDK Runtime Environment Mandrel-24.0.2.0-Final (build 22.0.2+9)

OpenJDK 64-Bit Server VM Mandrel-24.0.2.0-Final (build 22.0.2+9, mixed mode)OpenAI or Azure OpenAI Subscriptions

The Narration microservice needs to access an AI service to generate the text narrating the fight. You can choose between OpenAI or Azure OpenAI. Azure OpenAI, or "OpenAI on Azure" is a service that provides REST API access to OpenAI’s models, including the GPT-4, GPT-3, Codex and Embeddings series. The difference between OpenAI and Azure OpenAI is that it runs on Azure global infrastructure, which meets your production needs for critical enterprise security, compliance, and regional availability.

OpenAI

OpenAI is a leading artificial intelligence research organization creating cutting-edge AI technologies. OpenAI offers a range of APIs that enable developers to integrate AI capabilities into their applications. Some of these APIs include:

-

GPT API: This API provides access to the GPT-3 and 4 language model

-

Codex API: This API provides access to the Codex model, which is a language model trained on a dataset of public code

-

DALL-E API: This API provides access to the DALL-E model, which is a language model trained on a dataset of text-image pairs

We will be using the GPT-3 API to generate the text narrating the fight.

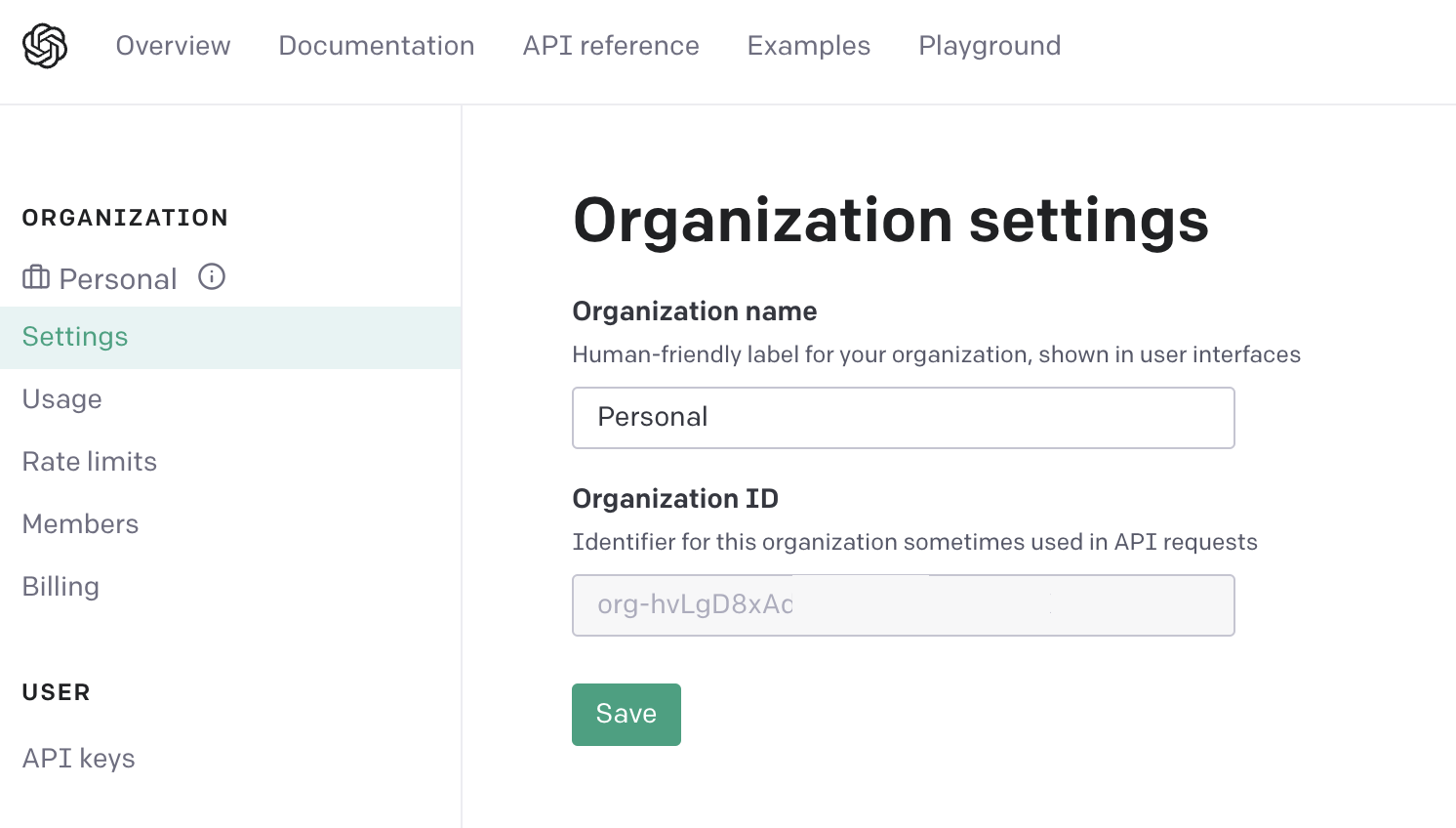

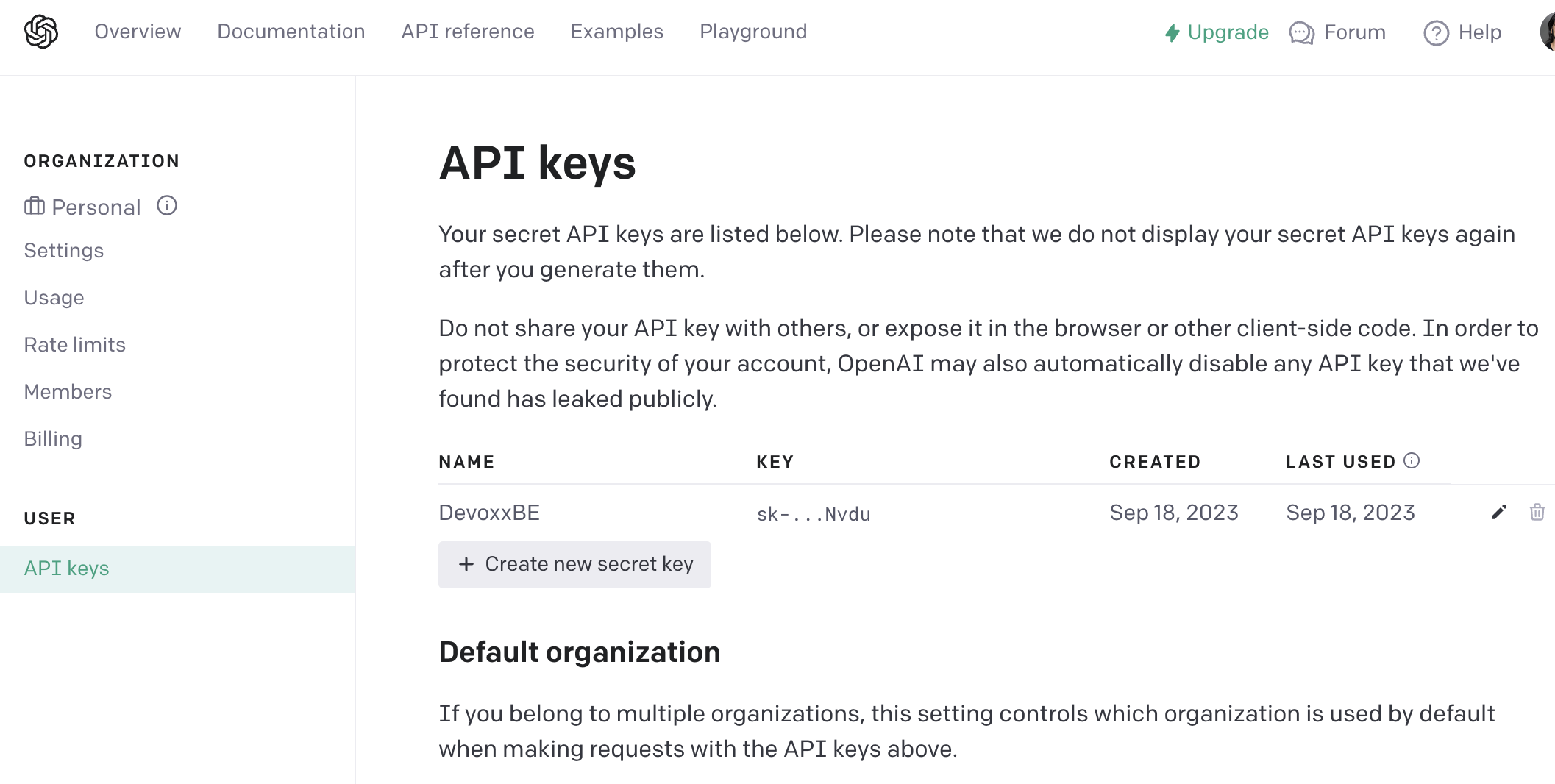

First, you need to have an OpenAI subscription and then generate an API key. Then you need to ge the organisation id and key to invoke the API. To get the organisation id, go to OpenAI organisation settings and copy the organisation id.:

Then, to get the API key, go to OpenAI API keys and copy the API key:

You will have to credit your OpenAI account with some money to be able to use the API.

Azure OpenAI

If you already have an Azure subscription, you can use it to create an Azure OpenAI resource. Otherwise, you can create a free Azure subscription and use it to create an Azure OpenAI resource. Then, you need to use the Azure OpenAI service to generate the API key and endpoint URL. You can either do that using the Azure Portal or the Azure CLI.

The easiest being use the Azure CLI, we recommend that you install it and use it to create the Azure OpenAI resource and generate the API key and endpoint URL. Once Azure CLI is installed and you have your Azure subscription, sign in to your Azure account:

az login|

If you have several Azure subscription, make sure you are using the right one. For that, you can execute the following command to list all your subscriptions and set the one you want to use: |

Then, execute the following command to create the Azure OpenAI resources:

echo "Setting up environment variables..."

echo "----------------------------------"

PROJECT="<give-your-project-a-name>"

RESOURCE_GROUP="rg-$PROJECT"

LOCATION="eastus"

TAG="$PROJECT"

COGNITIVE_SERVICE="cognit-$PROJECT"

COGNITIVE_DEPLOYMENT="gpt35turbo"

echo "Creating the resource group..."

echo "------------------------------"

az group create \

--name "$RESOURCE_GROUP" \

--location "$LOCATION" \

--tags system="$TAG"

echo "Creating the Cognitive Service..."

echo "---------------------------------"

az cognitiveservices account create \

--name "$COGNITIVE_SERVICE" \

--resource-group "$RESOURCE_GROUP" \

--location "$LOCATION" \

--custom-domain "$COGNITIVE_SERVICE" \

--tags system="$TAG" \

--kind "OpenAI" \

--sku "S0"

echo "Deploying the model..."

echo "----------------------"

az cognitiveservices account deployment create \

--name "$COGNITIVE_SERVICE" \

--resource-group "$RESOURCE_GROUP" \

--deployment-name "$COGNITIVE_DEPLOYMENT" \

--model-name "gpt-35-turbo" \

--model-version "0301" \

--model-format "OpenAI" \

--sku-capacity 1 \

--sku-name "Standard"This script will create the Azure OpenAI resource and deploy the model. Once the script has executed, you can use the following command to get the API key and endpoint URL. You will need these properties later one when you will configure the Narration microservice:

echo "Storing the key and endpoint in environment variables..."

echo "--------------------------------------------------------"

AZUREOPENAI_KEY=$(

az cognitiveservices account keys list \

--name "$COGNITIVE_SERVICE" \

--resource-group "$RESOURCE_GROUP" \

| jq -r .key1

)

AZUREOPENAI_ENDPOINT=$(

az cognitiveservices account show \

--name "$COGNITIVE_SERVICE" \

--resource-group "$RESOURCE_GROUP" \

| jq -r .properties.endpoint

)

# Set the properties

echo "--------------------------------------------------"

echo "The following properties can be copied to either the rest-narration/src/main/resources/conf.properties or to the ~/.sk/conf.properties file:"

echo "--------------------------------------------------"

echo "client.azureopenai.key=$AZUREOPENAI_KEY"

echo "client.azureopenai.endpoint=$AZUREOPENAI_ENDPOINT"

echo "client.azureopenai.deploymentname=$COGNITIVE_DEPLOYMENT"Once you’ve finished the workshop, remember to delete the Azure OpenAI resources to avoid being charged for it:

# Clean up

az group delete \

--name "$RESOURCE_GROUP" \

--yes

az cognitiveservices account purge \

--name "$COGNITIVE_SERVICE" \

--resource-group "$RESOURCE_GROUP" \

--location "$LOCATION"

az cognitiveservices account delete \

--name "$COGNITIVE_SERVICE" \

--resource-group "$RESOURCE_GROUP"WSL

Windows Subsystem for Linux (WSL) lets developers run a GNU/Linux environment — including most command-line tools, utilities, and applications — directly on Windows, unmodified, without the overhead of a traditional virtual machine or dual-boot setup.

|

If you are using Windows, it is recommended to install WSL as all the commands use bash. |

Installing WSL

You can install everything you need to run Windows Subsystem for Linux (WSL) by entering this command in an administrator PowerShell or Windows Command Prompt and then restarting your machine:

wsl --installThis command will enable the required optional components, download the latest Linux kernel, set WSL 2 as your default, and install a Linux distribution for you (Ubuntu by default).

The first time you launch a newly installed Linux distribution, a console window will open and you’ll be asked to wait for files to de-compress and be stored on your machine. All future launches should take less than a second.

Azure CLI

The Azure command-line interface (Azure CLI) is a set of commands used to create and manage Azure resources. The Azure CLI is available across Azure services and is designed to get you working quickly with Azure, with an emphasis on automation. For this workshop you need to have Azure CLI installed locally on your machine.

Installing Azure CLI

The Azure CLI is available to install in Windows, macOS and Linux environments. It can also be run in a Docker container and Azure Cloud Shell. On Mac OS X, the easiest way to install Azure CLI is by executing the following command:

brew install azure-cliChecking for Azure CLI Installation

Once the installation is complete, you can execute a few commands to be sure the Azure CLI is correctly installed:

az version

az --versionSome Azure CLI Commands

Azure CLI is a command-line utility where you can use several parameters and options to create, query, or delete Azure resources. To get some help on the commands, you can type:

az help

az --help

az vm --help

az vm disk --help

az vm disk attach --helpRecap

Before going further, make sure the following commands work on your machine.

java -version

$GRAALVM_HOME/bin/native-image --version

curl --version

docker version

docker compose version

az --versionWe have not mentioned cURL up to now, because most modern operating systems ship with it pre-installed. If your system is missing cURL, the appendix includes instructions for installing cURL.

And also make sure to have your OpenAI or Azure credentials ready if you want to develop the "Narration" microservice.

Preparing for the Workshop

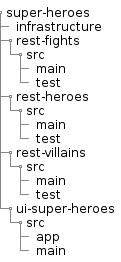

In this workshop, you will be developing an application dealing with Super Heroes (and Super-Villains 🦹) as well as Quarkus extensions. The code will be separated into two different directories:

Download the workshop scaffolding

We’ve done some of the layout for you. First, download the zip file https://raw.githubusercontent.com/quarkusio/quarkus-workshops/refs/heads/main/quarkus-workshop-super-heroes/dist/quarkus-super-heroes-workshop.zip, and unzip it wherever you want. This zip file contains some of the code of the workshop, along with assets you will need to complete it.

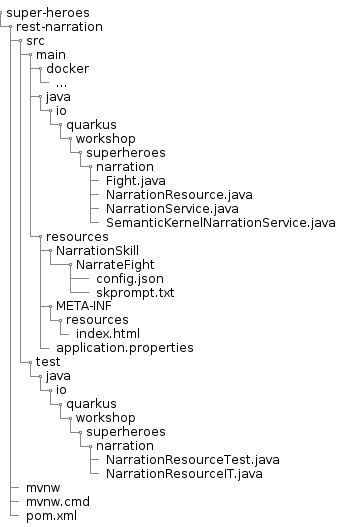

Super Heroes Application

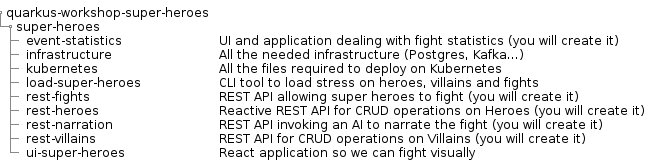

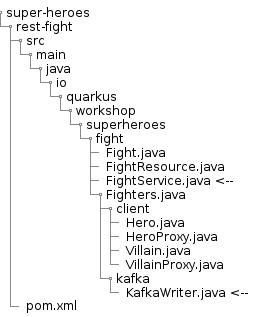

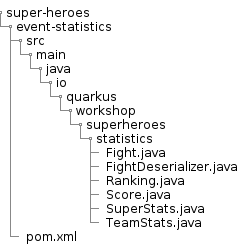

Under the super-heroes directory you will find the entire Super Hero application spread throughout a set of subdirectories, each one containing a microservice or some tooling.

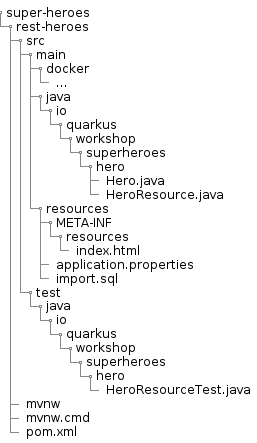

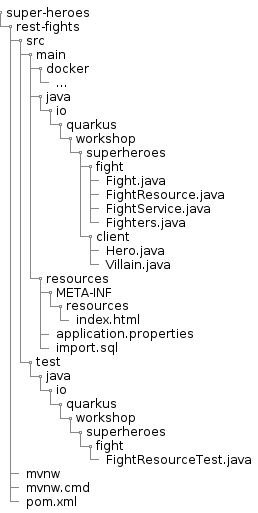

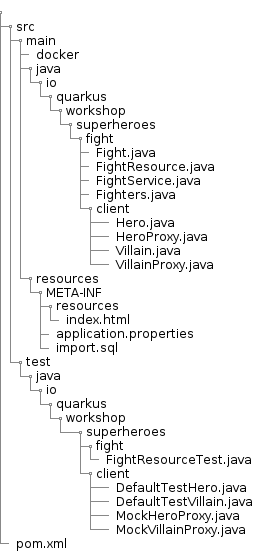

The final structure will be the following (don’t worry if you don’t have all this yet, this is what we’re aiming towards):

Most of these subdirectories are Maven projects and follow the Maven directory structure:

Checking Ports

During this workshop, we will use several ports.

Use lsof to make sure the following ports are free, so you don’t run into any conflicts.

lsof -i tcp:8080 # UI

lsof -i tcp:8082 # Fight REST API

lsof -i tcp:8083 # Hero REST API

lsof -i tcp:8084 # Villain REST API

lsof -i tcp:8085 # Statistics REST API

lsof -i tcp:8086 # Narration REST API

lsof -i tcp:5432 # Postgres

lsof -i tcp:2181 # Zookeeper

lsof -i tcp:9092 # KafkaReady?

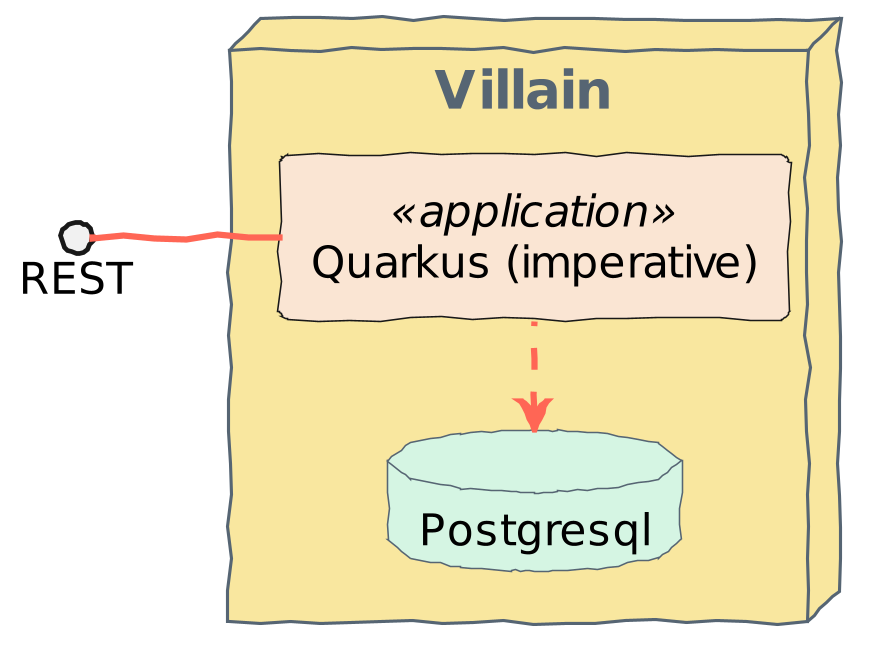

After the prerequisites have been installed and the environment has been checked, it’s now time to write some code!

Creating a classical REST/HTTP Microservice

At the heart of the Super-Hero application comes Villains! You can’t have superheroes without super-villains.

We need to expose a REST API allowing CRUD operations on villains. This microservice is, let’s say, a classical REST microservice. It uses HTTP to expose a REST API and internally store data into a database. It’s using the imperative development model.

The fight microservice will use this service.

In the following sections, you learn:

-

How to create a new Quarkus application

-

How to implement REST API using JAX-RS and the Quarkus REST extension [7]

-

How to compose your application using beans

-

How to access your database using Hibernate ORM with Panache

-

How to use transactions

-

How to enable OpenAPI and Swagger-UI

| This service is exposed on the port 8084. |

But first, let’s describe our service. The Super-Villains microservice manages villains with their names, powers, and so on. The REST API allows adding, removing, listing, and picking a random villain from the stored set. Nothing outstanding but a good first step to discover Quarkus.

Villain Microservice

First thing first, we need a project. That’s what you are going to see in this section.

Bootstrapping the Villain REST Endpoint

The easiest way to create a new Quarkus project is to use the Quarkus Maven plugin.

Open a terminal and run the following command under the quarkus-workshop-super-heroes/super-heroes directory:

./mvnw io.quarkus:quarkus-maven-plugin:3.27.0:create \

-DplatformVersion=3.27.0 \

-DprojectGroupId=io.quarkus.workshop.super-heroes \

-DprojectArtifactId=rest-villains \

-DclassName="io.quarkus.workshop.superheroes.villain.VillainResource" \

-Dpath="api/villains" \

-Dextensions="rest-jackson"|

Notice that we scaffold the project with Quarkus REST ( |

Remember that mvnw is the maven wrapper. It behaves like mvn,

but allows a project’s build dependencies to be encapsulated.

The last line selects the extension we want to use.

As a start, we only select rest-jackson, which will also import rest.

If you want your IDE to manage this new Maven project, you can declare it in the parent POM (quarkus-super-heroes/pom.xml) by adding this new module in the <modules> section:

<module>super-heroes/rest-villains</module>|

Prefer a Web UI?

Instead of the Maven command, you can use https://code.quarkus.io and select the |

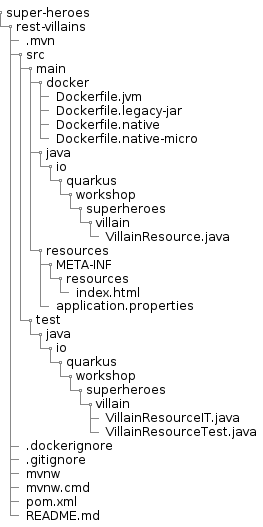

Directory Structure

Once you bootstrap the project, you get the following directory structure with a few Java classes and other artifacts :

The Maven archetype generates the following rest-villains sub-directory:

-

The Maven structure with a

pom.xml -

An

io.quarkus.workshop.superheroes.villain.VillainResourceresource exposed on/api/villains -

An associated unit test

VillainResourceTest -

The landing page

index.htmlthat is accessible on http://localhost:8080 after starting the application -

Example

Dockerfilefiles for both native and JVM modes insrc/main/docker -

The

application.propertiesconfiguration file

Once generated, look at the pom.xml.

You will find the import of the Quarkus BOM, allowing you to omit the version on the different Quarkus dependencies.

In addition, you can see the quarkus-maven-plugin responsible for the packaging of the application and providing the development mode.

<project>

<!-- ... -->

<properties>

<compiler-plugin.version>3.11.0</compiler-plugin.version>

<maven.compiler.release>17</maven.compiler.release>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding>

<quarkus.platform.artifact-id>quarkus-bom</quarkus.platform.artifact-id>

<quarkus.platform.group-id>io.quarkus</quarkus.platform.group-id>

<quarkus.platform.version>3.27.0</quarkus.platform.version>

<skipITs>true</skipITs>

<surefire-plugin.version>3.5.3</surefire-plugin.version>

</properties>

<!-- ... -->

<dependencyManagement>

<dependencies>

<dependency>

<groupId>${quarkus.platform.group-id}</groupId>

<artifactId>${quarkus.platform.artifact-id}</artifactId>

<version>${quarkus.platform.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<!-- ... -->

<build>

<plugins>

<plugin>

<groupId>${quarkus.platform.group-id}</groupId>

<artifactId>quarkus-maven-plugin</artifactId>

<version>${quarkus.platform.version}</version>

<extensions>true</extensions>

<executions>

<execution>

<goals>

<goal>build</goal>

<goal>generate-code</goal>

<goal>generate-code-tests</goal>

</goals>

</execution>

</executions>

</plugin>

<!-- ... -->

</plugins>

</build>

<!-- ... -->

</project>If we focus on the dependencies section, you can see the extensions allowing the development of REST applications (rest and rest-jackson)

<dependencies>

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-arc</artifactId>

</dependency>

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-rest</artifactId>

</dependency>

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-rest-jackson</artifactId>

</dependency>

<!-- ... -->

</dependencies>-

quarkus-arcis the dependency injection framework integrated into Quarkus. It’s designed to perform build-time injections. We will see later why this is essential for Quarkus. -

quarkus-restis the framework we will use to implement our REST API. It uses JAX-RS annotations such as@Path,@GET… -

quarkus-rest-jacksonadds JSON object mapping capabilities to Quarkus REST.

The Villain Resource

During the project creation, the VillainResource.java file has been created with the following content:

package io.quarkus.workshop.superheroes.villain;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

@Path("/api/villains")

public class VillainResource {

@GET

@Produces(MediaType.TEXT_PLAIN)

public String hello() {

return "Hello from Quarkus REST";

}

}It’s a very simple REST endpoint returning a "Hello World" to requests on /api/villains.

It uses JAX-RS annotations:

-

@Pathindicates the HTTP path handled by the resource, -

@GETindicates that the method should be called when receiving aGETrequest on/api/villains.

Methods can also have their own @Path annotation suffixed to the class one (if any).

|

Running the Application

Now we are ready to run our application.

Use: ./mvnw quarkus:dev in the rest-villains directory:

$ ./mvnw quarkus:dev

[INFO] Scanning for projects...

[INFO]

[INFO] -----------< io.quarkus.workshop.super-heroes:rest-villains >-----------

[INFO] Building rest-villains 1.0.0-SNAPSHOT

[INFO] --------------------------------[ jar ]---------------------------------

[INFO]

[INFO] --- quarkus-maven-plugin:3.27.0:dev (default-cli) @ rest-villains ---

[INFO] Invoking resources:3.27.0:resources (default-resources) @ rest-villains

[INFO] Copying 2 resources from src/main/resources to target/classes

[INFO] Invoking quarkus:3.27.0:generate-code (default) @ rest-villains

[INFO] Invoking compiler:3.11.0:compile (default-compile) @ rest-villains

[INFO] Nothing to compile - all classes are up to date

[INFO] Invoking resources:3.27.0:testResources (default-testResources) @ rest-villains

[INFO] skip non existing resourceDirectory /Users/agoncal/Documents/Code/Temp/quarkus-super-heroes/super-heroes/rest-villains/src/test/resources

[INFO] Invoking quarkus:3.27.0:generate-code-tests (default) @ rest-villains

[INFO] Invoking compiler:3.11.0:testCompile (default-testCompile) @ rest-villains

[INFO] Nothing to compile - all classes are up to date

Listening for transport dt_socket at address: 5005

__ ____ __ _____ ___ __ ____ ______

--/ __ \/ / / / _ | / _ \/ //_/ / / / __/

-/ /_/ / /_/ / __ |/ , _/ ,< / /_/ /\ \

--\___\_\____/_/ |_/_/|_/_/|_|\____/___/

2022-11-15 14:05:29,414 INFO [io.quarkus] (Quarkus Main Thread) rest-villains 1.0.0-SNAPSHOT on JVM (powered by Quarkus 3.27.0) started in 1.093s. Listening on: http://localhost:8080

2022-11-15 14:05:29,423 INFO [io.quarkus] (Quarkus Main Thread) Profile dev activated. Live Coding activated.

2022-11-15 14:05:29,423 INFO [io.quarkus] (Quarkus Main Thread) Installed features: [cdi, rest, rest-jackson, smallrye-context-propagation, vertx]

Tests paused

Press [r] to resume testing, [o] Toggle test output, [:] for the terminal, [h] for more options>Then check that the endpoint returns hello as expected:

curl http://localhost:8080/api/villainsYou should see the following

Hello from Quarkus RESTAlternatively, you can open http://localhost:8080/api/villains in your browser.

Development Mode

quarkus:dev runs Quarkus in development mode.

It enables hot deployment with background compilation, which means that when you modify your Java files or your resource files and invoke a REST endpoint (i.e., cURL command or refresh your browser), these changes will automatically take effect.

It works too for resource files like the configuration property and HTML files.

Refreshing the browser triggers a scan of the workspace, and if any changes are detected, the Java files are recompiled and the application is redeployed; your request is then serviced by the redeployed application.

If there are any issues with compilation or deployment an error page will let you know.

The development mode also allows debugging and listens for a debugger on port 5005.

If you want to wait for the debugger to attach before running, you can pass -Dsuspend=true on the command line.

If you don’t want the debugger at all, you can use -Ddebug=false.

Alright, time to change some code.

Open your favorite IDE and import the project.

To check that the hot reload is working, update the VillainResource.hello() method by returning the String "Hello Villain Resource".

Now, execute the cURL command again:

curl http://localhost:8080/api/villainsThe output has changed ("Hello Villain Resource") without you having to stop and restart Quarkus!

Testing the Application

All right, so far, so good, but wouldn’t it be better with a few tests, just in case.

In the generated pom.xml file, you can see two test dependencies:

<dependencies>

<!-- ... -->

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-junit5</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>io.rest-assured</groupId>

<artifactId>rest-assured</artifactId>

<scope>test</scope>

</dependency>

</dependencies>So, we will use Junit 5 combined with RESTAssured, which eases the testing of REST applications.

If you look at the maven-surefire-plugin configuration in the pom.xml, you will see that we set the java.util.logging system property to ensure tests will use the correct method log manager.

<plugin>

<artifactId>maven-surefire-plugin</artifactId>

<version>${surefire-plugin.version}</version>

<configuration>

<systemPropertyVariables>

<java.util.logging.manager>org.jboss.logmanager.LogManager</java.util.logging.manager>

<maven.home>${maven.home}</maven.home>

</systemPropertyVariables>

</configuration>

</plugin>The generated project contains a simple test in VillainResourceTest.java.

package io.quarkus.workshop.superheroes.villain;

import io.quarkus.test.junit.QuarkusTest;

import org.junit.jupiter.api.Test;

import static io.restassured.RestAssured.given;

import static org.hamcrest.CoreMatchers.is;

@QuarkusTest

public class VillainResourceTest {

@Test

public void testHelloEndpoint() {

given()

.when().get("/api/villains")

.then()

.statusCode(200)

.body(is("Hello from Quarkus REST"));

}

}By using the QuarkusTest runner, the VillainResourceTest class instructs JUnit to start the application before the tests.

Then, the testHelloEndpoint method checks the HTTP response status code and content.

Notice that these tests use RestAssured, but feel free to use your favorite library.[8]

|

Quarkus provides a RestAssured integration that updates the default port used by RestAssured before the tests are run.

So in your RestAssured tests, you don’t have to specify the default test port 8081 used by Quarkus.

You can also configure the ports used by tests by configuring the |

In the terminal running the application in dev mode (./mvnw quarkus:dev), you should see at the bottom:

Tests paused

Press [r] to resume testing, [o] Toggle test output, [:] for the terminal, [h] for more options>Hit the r key, and watch Quarkus execute your tests automatically and even continuously.

Unfortunately, this first run didn’t end well:

2022-11-15 14:13:17,924 ERROR [io.qua.test] (Test runner thread) ==================== TEST REPORT #1 ====================

2022-11-15 14:13:17,925 ERROR [io.qua.test] (Test runner thread) Test VillainResourceTest#testHelloEndpoint() failed

: java.lang.AssertionError: 1 expectation failed.

Response body doesn't match expectation.

Expected: is "Hello from Quarkus REST"

Actual: Hello Villain Resource

at io.restassured.internal.ValidatableResponseImpl.body(ValidatableResponseImpl.groovy)

at io.quarkus.workshop.superheroes.villain.VillainResourceTest.testHelloEndpoint(VillainResourceTest.java:18)

2022-11-15 14:13:17,927 ERROR [io.qua.test] (Test runner thread) >>>>>>>>>>>>>>>>>>>> Summary: <<<<<<<<<<<<<<<<<<<<

io.quarkus.workshop.superheroes.villain.VillainResourceTest#testHelloEndpoint(VillainResourceTest.java:18) VillainResourceTest#testHelloEndpoint() 1 expectation failed.

Response body doesn't match expectation.

Expected: is "Hello from Quarkus REST"

Actual: Hello Villain Resource

2022-11-15 14:13:17,929 ERROR [io.qua.test] (Test runner thread) >>>>>>>>>>>>>>>>>>>> 1 TEST FAILED <<<<<<<<<<<<<<<<<<<<

2022-11-15 14:13:18,155 ERROR [io.qua.test] (Test runner thread) ==================== TEST REPORT #2 ====================

2022-11-15 14:13:18,155 ERROR [io.qua.test] (Test runner thread) Test VillainResourceTest#testHelloEndpoint() failed

: java.lang.AssertionError: 1 expectation failed.

Response body doesn't match expectation.

Expected: is "Hello from Quarkus REST"

Actual: Hello Villain Resource

at io.restassured.internal.ValidatableResponseImpl.body(ValidatableResponseImpl.groovy)

at io.quarkus.workshop.superheroes.villain.VillainResourceTest.testHelloEndpoint(VillainResourceTest.java:18)

2022-11-15 14:13:18,156 ERROR [io.qua.test] (Test runner thread) >>>>>>>>>>>>>>>>>>>> Summary: <<<<<<<<<<<<<<<<<<<<

io.quarkus.workshop.superheroes.villain.VillainResourceTest#testHelloEndpoint(VillainResourceTest.java:18) VillainResourceTest#testHelloEndpoint() 1 expectation failed.

Response body doesn't match expectation.

Expected: is "Hello from Quarkus REST"

Actual: Hello Villain Resource

2022-11-15 14:13:18,157 ERROR [io.qua.test] (Test runner thread) >>>>>>>>>>>>>>>>>>>> 1 TEST FAILED <<<<<<<<<<<<<<<<<<<<It fails! It’s expected, you changed the output of VillainResource.hello() earlier.

Adjust the test body condition accordingly:

package io.quarkus.workshop.superheroes.villain;

import io.quarkus.test.junit.QuarkusTest;

import org.junit.jupiter.api.Test;

import static io.restassured.RestAssured.given;

import static org.hamcrest.CoreMatchers.is;

@QuarkusTest

public class VillainResourceTest {

@Test

public void testHelloEndpoint() {

given()

.when().get("/api/villains")

.then()

.statusCode(200)

.body(is("Hello Villain Resource"));

}

}Save the file, and watch the dev mode automatically rerunning your test (and passing)

--

2022-11-15 14:15:22,997 INFO [io.qua.test] (Test runner thread) All tests are now passing

--

All 1 test is passing (0 skipped), 1 test was run in 186ms. Tests completed at 14:15:23.

Press [r] to re-run, [o] Toggle test output, [:] for the terminal, [h] for more options>Continuous testing is a big part of Quarkus development. Quarkus detects and runs the tests for you.

You can also run the tests from a terminal using:

./mvnw testPackaging and Running the Application

The application is packaged using the ./mvnw package command (it also runs the tests).

That command generates:

-

target/rest-villains-1.0.0-SNAPSHOT.jar: containing just the classes and resources of the projects, it’s the regular artifact produced by the Maven build (it is not an executable jar); -

target/quarkus-app/: this directory uses the fast jar packaging. It contains an executable jar (quarkus-run.jar), and all the dependencies (structured intoapp,libandquarkus).

This fast jar takes advantage of the build-time principle of Quarkus (we discuss it soon) to improve the application performances and which can be easily transposed to container layers.

Stop the application running in dev mode (by hitting q or CTRL+C), and run the application using: java -jar target/quarkus-app/quarkus-run.jar.

|

Before running the application, don’t forget to stop the hot reload mode (hit CTRL+C), or you will have a port conflict. |

|

Troubleshooting

You might come across the following error while developing: If this is the case, it’s just a matter of adding the node name of your machine to the /etc/hosts. For that, first, get the name of your node with the following command: Then |

In another terminal, check that the application runs using:

curl http://localhost:8080/api/villains

Hello Villain ResourceTransactions and ORM

The Villain API’s role is to allow CRUD operations on Super Villains. In this module we will create a Villain entity and persist/update/delete/retrieve it from a PostgreSQL database in a transactional way.

This microservice uses an imperative/classic execution model. Interactions with the database will uses Hibernate ORM and will block until the responses from the database are retrieved.

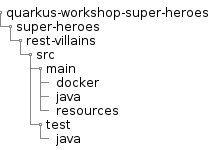

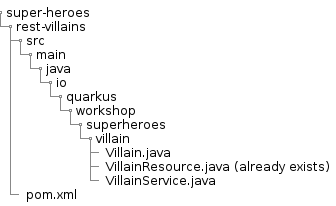

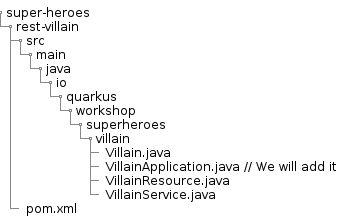

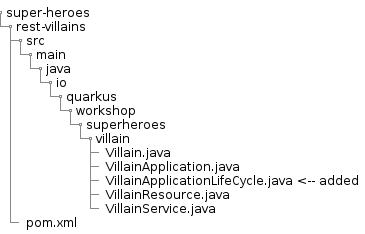

Directory Structure

In this module we will add extra classes to the Villain API project. You will end-up with the following directory structure:

Installing the PostgreSQL Dependency, Hibernate with Panache and Hibernate Validator

This microservice:

-

Interacts with a PostgreSQL database - so it needs a driver

-

Uses Hibernate with Panache - needs the extension providing it

-

Validates payloads and entities - needs a validator

-

Consumes and produces JSON - needs a JSON mapper

Hibernate ORM is the de-facto JPA implementation and offers you the full breadth of an Object Relational Mapper. It makes complex mappings possible, but it does not make simple and common mappings trivial. Hibernate ORM with Panache focuses on making your entities trivial and fun to write in Quarkus.[9]

Because JPA and Bean Validation work well together, we will use Bean Validation to constrain our business model.

To add the required dependencies, just run the following command under the super-heroes/rest-villains directory:

./mvnw quarkus:add-extension -Dextensions="jdbc-postgresql,hibernate-orm-panache,hibernate-validator"

No need to add an extension for JSON, we already included rest-jackson.

|

This will add the following dependencies in the pom.xml file:

<dependencies>

<!-- ... -->

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-hibernate-orm-panache</artifactId>

</dependency>

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-hibernate-validator</artifactId>

</dependency>

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-jdbc-postgresql</artifactId>

</dependency>

<!-- ... -->

</dependencies>From now on, you can choose to either edit your pom directly or use the quarkus:add-extension command.

Villain Entity

To define a Panache entity, simply extend PanacheEntity, annotate it with @Entity and add your columns as public fields (no need to have getters and setters).

The Villain entity should look like this:

package io.quarkus.workshop.superheroes.villain;

import io.quarkus.hibernate.orm.panache.PanacheEntity;

import jakarta.persistence.Column;

import jakarta.persistence.Entity;

import jakarta.validation.constraints.Min;

import jakarta.validation.constraints.NotNull;

import jakarta.validation.constraints.Size;

import java.util.Random;

@Entity

public class Villain extends PanacheEntity {

@NotNull

@Size(min = 3, max = 50)

public String name;

public String otherName;

@NotNull

@Min(1)

public int level;

public String picture;

@Column(columnDefinition = "TEXT")

public String powers;

@Override

public String toString() {

return "Villain{" +

"id=" + id +

", name='" + name + '\'' +

", otherName='" + otherName + '\'' +

", level=" + level +

", picture='" + picture + '\'' +

", powers='" + powers + '\'' +

'}';

}

}Notice that you can put all your JPA column annotations and Bean Validation constraint annotations on the public fields.

Adding Operations

Thanks to Panache, once you have written the Villain entity, here are the most common operations you will be able to do:

// creating a villain

Villain villain = new Villain();

villain.name = "Lex Luthor";

villain.level = 9;

// persist it

villain.persist();

// getting a list of all Villain entities

List<Villain> villains = Villain.listAll();

// finding a specific villain by ID

villain = Villain.findById(id);

// counting all villains

long countAll = Villain.count();But we are missing a business method: we need to return a random villain.

For that it’s just a matter to add the following method to our Villain.java entity:

public static Villain findRandom() {

long countVillains = count();

Random random = new Random();

int randomVillain = random.nextInt((int) countVillains);

return findAll().page(randomVillain, 1).firstResult();

}|

You would need to add the following import statement if not done automatically by your IDE |

Picking a random villain is achieved as follows:

-

Gets the number of villains stored in the database (

count()) -

Picks a random number between 0 and

count() -

Asks Hibernate with Panache to find all villains in a paginated way and return the random page containing 1 villain.

Configuring Hibernate

Quarkus development mode is really useful for applications that mix front end or services and database access.

We use quarkus.hibernate-orm.database.generation=drop-and-create in conjunction with import.sql so every change to your app and in particular to your entities, the database schema will be properly recreated and your data (stored in import.sql) will be used to repopulate it from scratch.

This is best to perfectly control your environment and works magic with Quarkus live reload mode:

your entity changes or any change to your import.sql is immediately picked up and the schema updated without restarting the application!

For that, make sure to have the following configuration in your application.properties (located in src/main/resources):

# drop and create the database at startup (use `update` to only update the schema)

quarkus.hibernate-orm.database.generation=drop-and-createVillain Service

To manipulate the Villain entity we will develop a transactional VillainService class.

The idea is to wrap methods modifying the database (e.g. entity.persist()) within a transaction.

Marking a CDI bean method @Transactional will do that for you and make that method a transaction boundary.

@Transactional can be used to control transaction boundaries on any bean at the method level or at the class level to ensure every method is transactional.

You can control whether and how the transaction is started with parameters on @Transactional:

-

@Transactional(REQUIRED)(default): starts a transaction if none was started, stays with the existing one otherwise. -

@Transactional(REQUIRES_NEW): starts a transaction if none was started ; if an existing one was started, suspends it and starts a new one for the boundary of that method. -

@Transactional(MANDATORY): fails if no transaction was started ; works within the existing transaction otherwise. -

@Transactional(SUPPORTS): if a transaction was started, joins it ; otherwise works with no transaction. -

@Transactional(NOT_SUPPORTED): if a transaction was started, suspends it and works with no transaction for the boundary of the method ; otherwise works with no transaction. -

@Transactional(NEVER): if a transaction was started, raises an exception ; otherwise works with no transaction.

Creates a new VillainService.java file in the same package with the following content:

package io.quarkus.workshop.superheroes.villain;

import static jakarta.transaction.Transactional.TxType.REQUIRED;

import static jakarta.transaction.Transactional.TxType.SUPPORTS;

import java.util.List;

import jakarta.enterprise.context.ApplicationScoped;

import jakarta.transaction.Transactional;

import jakarta.validation.Valid;

import org.eclipse.microprofile.config.inject.ConfigProperty;

@ApplicationScoped

@Transactional(REQUIRED)

public class VillainService {

@Transactional(SUPPORTS)

public List<Villain> findAllVillains() {

return Villain.listAll();

}

@Transactional(SUPPORTS)

public Villain findVillainById(Long id) {

return Villain.findById(id);

}

@Transactional(SUPPORTS)

public Villain findRandomVillain() {

Villain randomVillain = null;

while (randomVillain == null) {

randomVillain = Villain.findRandom();

}

return randomVillain;

}

public Villain persistVillain(@Valid Villain villain) {

villain.persist();

return villain;

}

public Villain updateVillain(@Valid Villain villain) {

Villain entity = Villain.findById(villain.id);

entity.name = villain.name;

entity.otherName = villain.otherName;

entity.level = villain.level;

entity.picture = villain.picture;

entity.powers = villain.powers;

return entity;

}

public void deleteVillain(Long id) {

Villain.deleteById(id);

}

}The @ApplicationScoped annotation declares a bean.

The other component of the application can access this bean.

Arc, the dependency injection framework integrated in Quarkus, handles the creation and the access to this class.

Notice that both methods that persist and update a villain, pass a Villain object as a parameter.

Thanks to the Bean Validation’s @Valid annotation, the Villain object will be checked to see if it’s valid or not.

If it’s not, the transaction will be rolled back.

Accessing a database in dev mode

Our project now requires a connection to a PostgreSQL database. In dev mode, no need to start a database or configure anything. Quarkus does that for us (just make sure you have Docker up and running).

Start the application in dev mode with ./mvnw quarkus:dev.

In the log, you will see the following:

2021-09-21 15:58:44,640 INFO [org.tes.doc.DockerClientProviderStrategy] (build-38) Loaded org.testcontainers.dockerclient.UnixSocketClientProviderStrategy from ~/.testcontainers.properties, will try it first

2021-09-21 15:58:45,068 INFO [org.tes.doc.DockerClientProviderStrategy] (build-38) Found Docker environment with local Unix socket (unix:///var/run/docker.sock)

2021-09-21 15:58:45,070 INFO [org.tes.DockerClientFactory] (build-38) Docker host IP address is localhost

2021-09-21 15:58:45,116 INFO [org.tes.DockerClientFactory] (build-38) Connected to docker:

Server Version: 20.10.8

API Version: 1.41

Operating System: Docker Desktop

Total Memory: 5943 MB

2021-09-21 15:58:45,118 INFO [org.tes.uti.ImageNameSubstitutor] (build-38) Image name substitution will be performed by: DefaultImageNameSubstitutor (composite of 'ConfigurationFileImageNameSubstitutor' and 'PrefixingImageNameSubstitutor')

2021-09-21 15:58:45,453 INFO [org.tes.uti.RegistryAuthLocator] (build-38) Credential helper/store (docker-credential-desktop) does not have credentials for index.docker.io

2021-09-21 15:58:45,957 INFO [org.tes.DockerClientFactory] (build-38) Ryuk started - will monitor and terminate Testcontainers containers on JVM exit

2021-09-21 15:58:45,958 INFO [org.tes.DockerClientFactory] (build-38) Checking the system...

2021-09-21 15:58:45,958 INFO [org.tes.DockerClientFactory] (build-38) ✔︎ Docker server version should be at least 1.6.0

2021-09-21 15:58:46,083 INFO [org.tes.DockerClientFactory] (build-38) ✔︎ Docker environment should have more than 2GB free disk space

2021-09-21 15:58:46,143 INFO [🐳 .2]] (build-38) Creating container for image: postgres:13.2

2021-09-21 15:58:46,217 INFO [🐳 .2]] (build-38) Starting container with ID: a7fd54795185ab17baf487388c1e3280fdfea3f6ef8670c0336d367dba3e1d9e

2021-09-21 15:58:46,545 INFO [🐳 .2]] (build-38) Container postgres:13.2 is starting: a7fd54795185ab17baf487388c1e3280fdfea3f6ef8670c0336d367dba3e1d9e

2021-09-21 15:58:48,043 INFO [🐳 .2]] (build-38) Container postgres:13.2 started in PT1.959377S

2021-09-21 15:58:48,044 INFO [io.qua.dev.pos.dep.PostgresqlDevServicesProcessor] (build-38) Dev Services for the default datasource (postgresql) startedQuarkus detects the need for a database and starts one using a Docker container. It automatically configures the application, which means we are good to go and implement our REST API.

|

If the application fails to start properly and the logs contain something like try launching the application again

after having the |

VillainResource Endpoint

The VillainResource was bootstrapped with only one method hello().

We need to add extra methods that will allow CRUD operations on villains.

Here are the new methods to add to the VillainResource class:

package io.quarkus.workshop.superheroes.villain;

import org.jboss.logging.Logger;

import org.jboss.resteasy.reactive.RestPath;

import org.jboss.resteasy.reactive.RestResponse;

import jakarta.validation.Valid;

import jakarta.ws.rs.DELETE;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.POST;

import jakarta.ws.rs.PUT;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.Context;

import jakarta.ws.rs.core.UriBuilder;

import jakarta.ws.rs.core.UriInfo;

import java.util.List;

import static jakarta.ws.rs.core.MediaType.TEXT_PLAIN;

@Path("/api/villains")

public class VillainResource {

Logger logger;

VillainService service;

public VillainResource(Logger logger, VillainService service) {

this.service = service;

this.logger = logger;

}

@GET

@Path("/random")

public RestResponse<Villain> getRandomVillain() {

Villain villain = service.findRandomVillain();

logger.debug("Found random villain " + villain);

return RestResponse.ok(villain);

}

@GET

public RestResponse<List<Villain>> getAllVillains() {

List<Villain> villains = service.findAllVillains();

logger.debug("Total number of villains " + villains.size());

return RestResponse.ok(villains);

}

@GET

@Path("/{id}")

public RestResponse<Villain> getVillain(@RestPath Long id) {

Villain villain = service.findVillainById(id);

if (villain != null) {

logger.debug("Found villain " + villain);

return RestResponse.ok(villain);

} else {

logger.debug("No villain found with id " + id);

return RestResponse.noContent();

}

}

@POST

public RestResponse<Void> createVillain(@Valid Villain villain, @Context UriInfo uriInfo) {

villain = service.persistVillain(villain);

UriBuilder builder = uriInfo.getAbsolutePathBuilder().path(Long.toString(villain.id));

logger.debug("New villain created with URI " + builder.build().toString());

return RestResponse.created(builder.build());

}

@PUT

public RestResponse<Villain> updateVillain(@Valid Villain villain) {

villain = service.updateVillain(villain);

logger.debug("Villain updated with new valued " + villain);

return RestResponse.ok(villain);

}

@DELETE

@Path("/{id}")

public RestResponse<Void> deleteVillain(@RestPath Long id) {

service.deleteVillain(id);

logger.debug("Villain deleted with " + id);

return RestResponse.noContent();

}

@GET

@Path("/hello")

@Produces(TEXT_PLAIN)

public String hello() {

return "Hello Villain Resource";

}

}Note that we added @Path("/hello") to the hello method to not conflict with the getAllVillains() method.

Dependency Injection

Dependency injection in Quarkus is based on ArC which is a CDI-based dependency injection solution tailored for Quarkus' architecture.[10] You can learn more about it in the Contexts and Dependency Injection guide.[11]

ArC handles injection at build time.

You can use field injection and inject the VillainService and the logger using:

@Inject Logger logger;

@Inject VillainService service;But in your previous class, we used constructor injection.

Both the VillainService and the Logger are injected as constructor parameter:

public VillainResource(Logger logger, VillainService service) {

this.service = service;

this.logger = logger;

}Adding Data

To load some SQL statements when Hibernate ORM starts, add the following import.sql in the root of the resources directory.

It contains SQL statements terminated by a semicolon.

This is useful to have a data set ready for the tests or demos.

ALTER SEQUENCE villain_seq RESTART WITH 50;

INSERT INTO villain(id, name, otherName, picture, powers, level)

VALUES (nextval('villain_seq'), 'Buuccolo', 'Majin Buu',

'https://www.superherodb.com/pictures2/portraits/10/050/15355.jpg',

'Accelerated Healing, Adaptation, Agility, Flight, Immortality, Intelligence, Invulnerability, Reflexes, Self-Sustenance, Size Changing, Spatial Awareness, Stamina, Stealth, Super Breath, Super Speed, Super Strength, Teleportation',

22);

INSERT INTO villain(id, name, otherName, picture, powers, level)

VALUES (nextval('villain_seq'), 'Darth Vader', 'Anakin Skywalker',

'https://www.superherodb.com/pictures2/portraits/10/050/10444.jpg',

'Accelerated Healing, Agility, Astral Projection, Cloaking, Danger Sense, Durability, Electrokinesis, Energy Blasts, Enhanced Hearing, Enhanced Senses, Force Fields, Hypnokinesis, Illusions, Intelligence, Jump, Light Control, Marksmanship, Precognition, Psionic Powers, Reflexes, Stealth, Super Speed, Telekinesis, Telepathy, The Force, Weapons Master',

13);

INSERT INTO villain(id, name, otherName, picture, powers, level)

VALUES (nextval('villain_seq'), 'The Rival (CW)', 'Edward Clariss',

'https://www.superherodb.com/pictures2/portraits/10/050/13846.jpg',

'Accelerated Healing, Agility, Bullet Time, Durability, Electrokinesis, Endurance, Enhanced Senses, Intangibility, Marksmanship, Phasing, Reflexes, Speed Force, Stamina, Super Speed, Super Strength',

10);Ok, but that’s just a few entries.

Download the SQL file import.sql and copy it under src/main/resources.

Now, you have more than 500 villains that will be loaded in the database.

If you didn’t yet, start the application in dev mode by executing the following command under the rest-villains directory:

./mvnw quarkus:devThen, open your browser to http://localhost:8080/api/villains. You should see lots of heroes…

CRUD Tests in VillainResourceTest

To test the VillainResource endpoint, we just need to extend the VillainResourceTest we already have.

No need to configure anything, Quarkus will start a test database for you.

In io.quarkus.workshop.superheroes.villain.VillainResourceTest, you will add the following test methods to the VillainResourceTest class:

-

shouldNotGetUnknownVillain: giving a random Villain identifier, theVillainResourceendpoint should return a 204 (No content) -

shouldGetRandomVillain: checks that theVillainResourceendpoint returns a random villain -

shouldNotAddInvalidItem: passing an invalidVillainshould fail when creating it (thanks to the@Validannotation) -

shouldGetInitialItems: checks that theVillainResourceendpoint returns the list of heroes -

shouldAddAnItem: checks that theVillainResourceendpoint creates a validVillain -

shouldUpdateAnItem: checks that theVillainResourceendpoint updates a newly createdVillain -

shouldRemoveAnItem: checks that theVillainResourceendpoint deletes a villain from the database

The code is as follows:

package io.quarkus.workshop.superheroes.villain;

import io.quarkus.test.junit.QuarkusTest;

import io.restassured.common.mapper.TypeRef;

import org.hamcrest.core.Is;

import org.junit.jupiter.api.MethodOrderer;

import org.junit.jupiter.api.Order;

import org.junit.jupiter.api.Test;

import org.junit.jupiter.api.TestMethodOrder;

import java.util.List;

import java.util.Random;

import static io.restassured.RestAssured.get;

import static io.restassured.RestAssured.given;

import static jakarta.ws.rs.core.HttpHeaders.ACCEPT;

import static jakarta.ws.rs.core.HttpHeaders.CONTENT_TYPE;

import static jakarta.ws.rs.core.MediaType.APPLICATION_JSON;

import static jakarta.ws.rs.core.MediaType.TEXT_PLAIN;

import static jakarta.ws.rs.core.Response.Status.BAD_REQUEST;

import static jakarta.ws.rs.core.Response.Status.CREATED;

import static jakarta.ws.rs.core.Response.Status.NO_CONTENT;

import static jakarta.ws.rs.core.Response.Status.OK;

import static org.hamcrest.CoreMatchers.is;

import static org.junit.jupiter.api.Assertions.assertEquals;

import static org.junit.jupiter.api.Assertions.assertNotNull;

import static org.junit.jupiter.api.Assertions.assertTrue;

@QuarkusTest

@TestMethodOrder(MethodOrderer.OrderAnnotation.class)

public class VillainResourceTest {

private static final String JSON = "application/json;charset=UTF-8";

private static final String DEFAULT_NAME = "Super Chocolatine";

private static final String UPDATED_NAME = "Super Chocolatine (updated)";

private static final String DEFAULT_OTHER_NAME = "Super Chocolatine chocolate in";

private static final String UPDATED_OTHER_NAME = "Super Chocolatine chocolate in (updated)";

private static final String DEFAULT_PICTURE = "super_chocolatine.png";

private static final String UPDATED_PICTURE = "super_chocolatine_updated.png";

private static final String DEFAULT_POWERS = "does not eat pain au chocolat";

private static final String UPDATED_POWERS = "does not eat pain au chocolat (updated)";

private static final int DEFAULT_LEVEL = 42;

private static final int UPDATED_LEVEL = 43;

private static final int NB_VILLAINS = 570;

private static String villainId;

@Test

public void testHelloEndpoint() {

given().header(ACCEPT, TEXT_PLAIN).when().get("/api/villains/hello").then().statusCode(200).body(is("Hello Villain Resource"));

}

@Test

void shouldNotGetUnknownVillain() {

Long randomId = new Random().nextLong();

given().pathParam("id", randomId).when().get("/api/villains/{id}").then().statusCode(NO_CONTENT.getStatusCode());

}

@Test

void shouldGetRandomVillain() {

given().when().get("/api/villains/random").then().statusCode(OK.getStatusCode()).contentType(APPLICATION_JSON);

}

@Test

void shouldNotAddInvalidItem() {

Villain villain = new Villain();

villain.name = null;

villain.otherName = DEFAULT_OTHER_NAME;

villain.picture = DEFAULT_PICTURE;

villain.powers = DEFAULT_POWERS;

villain.level = 0;

given()

.body(villain)

.header(CONTENT_TYPE, JSON)

.header(ACCEPT, JSON)

.when()

.post("/api/villains")

.then()

.statusCode(BAD_REQUEST.getStatusCode());

}

@Test

@Order(1)

void shouldGetInitialItems() {

List<Villain> villains = get("/api/villains")

.then()

.statusCode(OK.getStatusCode())

.contentType(APPLICATION_JSON)

.extract()

.body()

.as(getVillainTypeRef());

assertEquals(NB_VILLAINS, villains.size());

}

@Test

@Order(2)

void shouldAddAnItem() {

Villain villain = new Villain();

villain.name = DEFAULT_NAME;

villain.otherName = DEFAULT_OTHER_NAME;

villain.picture = DEFAULT_PICTURE;

villain.powers = DEFAULT_POWERS;

villain.level = DEFAULT_LEVEL;

String location = given()

.body(villain)

.header(CONTENT_TYPE, JSON)

.header(ACCEPT, JSON)

.when()

.post("/api/villains")

.then()

.statusCode(CREATED.getStatusCode())

.extract()

.header("Location");

assertTrue(location.contains("/api/villains"));

// Stores the id

String[] segments = location.split("/");

villainId = segments[segments.length - 1];

assertNotNull(villainId);

given()

.pathParam("id", villainId)

.when()

.get("/api/villains/{id}")

.then()

.statusCode(OK.getStatusCode())

.contentType(APPLICATION_JSON)

.body("name", Is.is(DEFAULT_NAME))

.body("otherName", Is.is(DEFAULT_OTHER_NAME))

.body("level", Is.is(DEFAULT_LEVEL))

.body("picture", Is.is(DEFAULT_PICTURE))

.body("powers", Is.is(DEFAULT_POWERS));

List<Villain> villains = get("/api/villains")

.then()

.statusCode(OK.getStatusCode())

.contentType(APPLICATION_JSON)

.extract()

.body()

.as(getVillainTypeRef());

assertEquals(NB_VILLAINS + 1, villains.size());

}

@Test

@Order(3)

void testUpdatingAnItem() {

Villain villain = new Villain();

villain.id = Long.valueOf(villainId);

villain.name = UPDATED_NAME;

villain.otherName = UPDATED_OTHER_NAME;

villain.picture = UPDATED_PICTURE;

villain.powers = UPDATED_POWERS;

villain.level = UPDATED_LEVEL;

given()

.body(villain)

.header(CONTENT_TYPE, JSON)

.header(ACCEPT, JSON)

.when()

.put("/api/villains")

.then()

.statusCode(OK.getStatusCode())

.contentType(APPLICATION_JSON)

.body("name", Is.is(UPDATED_NAME))

.body("otherName", Is.is(UPDATED_OTHER_NAME))

.body("level", Is.is(UPDATED_LEVEL))

.body("picture", Is.is(UPDATED_PICTURE))

.body("powers", Is.is(UPDATED_POWERS));

List<Villain> villains = get("/api/villains")

.then()

.statusCode(OK.getStatusCode())

.contentType(APPLICATION_JSON)

.extract()

.body()

.as(getVillainTypeRef());

assertEquals(NB_VILLAINS + 1, villains.size());

}

@Test

@Order(4)

void shouldRemoveAnItem() {

given().pathParam("id", villainId).when().delete("/api/villains/{id}").then().statusCode(NO_CONTENT.getStatusCode());

List<Villain> villains = get("/api/villains")

.then()

.statusCode(OK.getStatusCode())

.contentType(APPLICATION_JSON)

.extract()

.body()

.as(getVillainTypeRef());

assertEquals(NB_VILLAINS, villains.size());

}

private TypeRef<List<Villain>> getVillainTypeRef() {

return new TypeRef<List<Villain>>() {

// Kept empty on purpose

};