Exploring why native executables produced with Mandrel 23.1 are bigger than those produced with Mandrel 23.0

This article is a follow-up to Exploring why native executables produced with Mandrel 23.0 are bigger than with Mandrel 22.3.

Starting with Quarkus 3.5 the default Mandrel version was updated from 23.0 to 23.1.

This update brought a number of bugfixes as well as new features like:

- Preview of Foreign Function & Memory API downcalls (part of “Project Panama”, JEP 442) on AMD64. Must be enabled with

--enable-preview. - New option

-H:±IndirectBranchTargetMarkerto mark indirect branch targets on AMD64 with an endbranch instruction. This is a prerequisite for future Intel CET support. - Throw

MissingReflectionRegistrationErrorwhen attempting to create a proxy class without having it registered at build-time, instead of aVMError. - Support for

-XX:+HeapDumpOnOutOfMemoryError. - New

--parallelismoption to control how many threads are used by the build process. - Simulation of class initializer: Class initializer of classes that are not marked for initialization at image build time are simulated at image build time to avoid executing them at image run time.

- and more

However, it also brought an unwanted side effect. The native executables produced with Mandrel 23.1 are bigger than the ones produced with Mandrel 23.0. To better understand why that happens we perform a thorough analysis to attribute the size increase to specific changes in Mandrel’s code base.

TL;DR

According to our analysis the binary size increase is attributed to two distinct changes, both of which are necessary for getting more accurate profiles when using the async-sampler.

- Add support for profiling of topmost frame

- ProfilingSampler does not need local variable values (specifically the commit “Always store bci in frame info”)

Better understanding what is different between the generated native executables

To perform the analysis we use the Quarkus startstop test (specifically commit a8bae846881607e376c7c8a96116b6b50ee50b70) which generates, starts, tests, and stops small Quarkus applications and measures various time-related metrics (e.g. time-to-first-OK-request) and memory usage.

We get the test with:

git clone https://github.com/quarkus-qe/quarkus-startstop

cd quarkus-startstop

git checkout a8bae846881607e376c7c8a96116b6b50ee50b70

and build it with:

mvn clean package -Pnative -Dquarkus.version=3.5.0\

-Dquarkus.native.builder-image=quay.io/quarkus/ubi-quarkus-mandrel-builder-image:jdk-17

changing the builder image tag to jdk-20 and jdk-21 for building with Mandrel 23.0 (based on JDK 20) and Mandrel 23.1 (based on JDK 21) respectively.

The reason we also use jdk-20 although deprecated is to see the effects of the base JDK when using the same code base (jdk-17 and jdk-20 are based on the same Mandrel source code but are built using a different base JDK version).

Looking at the build output (generated by Quarkus in target/my-app-native-image-sources/my-app-build-output-stats.json) the main differences between the three builds are in the following metrics:

| Mandrel version | 23.0.2.1 (jdk-17) | 23.0.1.2 (jdk-20) | 23.1.1.0 (jdk-21) | Increase jdk-17 to jdk-20 % | Increase jdk-20 to jdk-21 % |

|---|---|---|---|---|---|

| Image Heap Size | 29790208 | 30982144 | 33546240 | 4 | 8.3 |

| Objects count | 351565 | 353273 | 356059 | 0.5 | 0.8 |

| Resources Size | 169205 | 174761 | 175392 | 3.3 | 0.36 |

| Resources Count | 28 | 28 | 79 | 0 | 182 |

| Total Image Size | 60006728 | 61734352 | 64224536 | 2.88 | 4 |

Which indicates that the base JDK plays significant role in the image size increase, leaving the question open on whether the further increase in the generated binary size between jdk-20 and jdk-21 is due to the JDK difference or due to changes in Mandrel itself.

It is also interesting that despite the resource count increase between Mandrel 23.0 (jdk-20) and Mandrel 23.1 (jdk-21) the resource size is not affected that much. As a result, we focus our analysis on the Image Heap Size which increases disproportionally to the objects count between the different Mandrel versions indicating that either some objects became bigger, or the few new objects being added to the heap are quite big.

Dashboards

GraalVM and Mandrel provide the -H:+DashboardAll and -H:+DashboardJson flags that can be used to generate dashboards that contain more information about the generated native executable.

The resulting dashboard contains a number of metrics and looks like this:

{

"points-to": {

"type-flows": [

...

]

},

"code-breakdown": {

"code-size": [

{

"name": "io.smallrye.mutiny.CompositeException.getFirstOrFail(Throwable[]) Throwable",

"size": 575

},

...

]

},

"heap-breakdown": {

"heap-size": [

{

"name": "Lio/vertx/core/impl/VerticleManager$$Lambda$bf09d38f5d19578a0d041ffd0a524c1cbe1843df;",

"size": 24,

"count": 1

},

...

]

}

}

Using the aforementioned flags we generate dashboards using both Mandrel 23.1 and 23.0 and compare the results.

To generate the dashboards using Mandrel 23.0 we use the following command:

mvn package -Pnative \

-Dquarkus.version=3.5.0 \

-Dquarkus.native.builder-image=quay.io/quarkus/ubi-quarkus-mandrel-builder-image:jdk-20 \

-Dquarkus.native.additional-build-args=-H:+DashboardAll,-H:+DashboardJson,-H:DashboardDump=path/to/23.0.dashboard.json

Similarly to generate the dashboards using Mandrel 23.1 we use the following command:

mvn package -Pnative \

-Dquarkus.version=3.5.0 \

-Dquarkus.native.builder-image=quay.io/quarkus/ubi-quarkus-mandrel-builder-image:jdk-21 \

-Dquarkus.native.additional-build-args=-H:+DashboardAll,-H:+DashboardJson,-H:DashboardDump=path/to/23.1.dashboard.json

Note: Make sure to change path/to/ to the path where you would like the dashboard json files to be stored, each file is about 370MB big.

Analyzing and visualizing the data

To process the data from the dashboards we used a Jupyter notebook, like we do in this article. To grab the notebook follow this link.

Loading the data from the json files

import json

import pandas as pd

# load data from JSON file

with open('23.0.dashboard.json', 'r') as f:

data23_0 = json.load(f)

with open('23.1.dashboard.json', 'r') as f:

data23_1 = json.load(f)

# create dataframes from json data

df23_0 = pd.DataFrame(data23_0)

df23_1 = pd.DataFrame(data23_1)

Is the heap image bigger because the objects in the heap are bigger than before or because we store more objects in it?

# Get heap-size lists from dataframes

heap_size_23_0 = df23_0['heap-breakdown']['heap-size']

heap_size_23_1 = df23_1['heap-breakdown']['heap-size']

# create dataframes from heap_size lists

heap_df23_0 = pd.DataFrame(heap_size_23_0).rename(columns={'size': 'size-23.0', 'count': 'count-23.0'})

heap_df23_1 = pd.DataFrame(heap_size_23_1).rename(columns={'size': 'size-23.1', 'count': 'count-23.1'})

What’s the average object size?

print("Average object size for Mandrel 23.0: {:.2f}".format(heap_df23_0['size-23.0'].mean()))

print("Average object size for Mandrel 23.1: {:.2f}".format(heap_df23_1['size-23.1'].mean()))

Average object size for Mandrel 23.0: 8137.46

Average object size for Mandrel 23.1: 8880.06

What’s the minimum and maximum object size in each case?

print("Minimum object size for Mandrel 23.0:", heap_df23_0['size-23.0'].min())

print("Minimum object size for Mandrel 23.1:", heap_df23_1['size-23.1'].min())

max_size_23_0 = heap_df23_0['size-23.0'].max()

max_size_23_1 = heap_df23_1['size-23.1'].max()

print("Maximum object size for Mandrel 23.0:", max_size_23_0)

print("Maximum object size for Mandrel 23.1:", max_size_23_1)

Minimum object size for Mandrel 23.0: 16

Minimum object size for Mandrel 23.1: 16

Maximum object size for Mandrel 23.0: 14149496

Maximum object size for Mandrel 23.1: 16933168

We observe that the maximum object size when compiling with Mandrel 23.1 is about 2.6MB bigger than the maximum object size when compiling with Mandrel 23.0.

max_size_diff = (max_size_23_1 - max_size_23_0) / (1024 * 1024)

print("Max size difference in MB: {:.2f}".format(max_size_diff))

Max size difference in MB: 2.65

Which objects are the bigger ones?

As a result, next we search to see which objects are the bigger ones in both cases and what is their corresponding size in the other Mandrel version.

max_size_23_0_rows = heap_df23_0.loc[heap_df23_0['size-23.0'] == max_size_23_0]

print("Objects with size equal to max_size_23_0 in heap_size_23_0:")

print(max_size_23_0_rows)

Objects with size equal to max_size_23_0 in heap_size_23_0:

name size-23.0 count-23.0

1340 [B 14149496 110914

max_size_23_1_rows = heap_df23_1.loc[heap_df23_1['size-23.1'] == max_size_23_1]

print("Objects with size equal to max_size_23_1 in heap_size_23_1:")

print(max_size_23_1_rows)

Objects with size equal to max_size_23_1 in heap_size_23_1:

name size-23.1 count-23.1

1351 [B 16933168 111454

Not surprisingly, we detect that the object type with the maximum size in both cases is [B, i.e. byte arrays.

More byte arrays of similar size or a few larger ones?

Next we look at the average size of the byte arrays in both versions to see if the increase can be attributed to more similarly sized arrays being added to the image or just a few larger ones.

max_size_23_0_row = max_size_23_0_rows.iloc[0]

max_size_23_1_row = max_size_23_1_rows.iloc[0]

print("Average size of byte arrays in Mandrel 23.0: {:.2f}".format(max_size_23_0_row['size-23.0']/max_size_23_0_row['count-23.0']))

print("Average size of byte arrays in Mandrel 23.1: {:.2f}".format(max_size_23_1_row['size-23.1']/max_size_23_1_row['count-23.1']))

Average size of byte arrays in Mandrel 23.0: 127.57

Average size of byte arrays in Mandrel 23.1: 151.93

We observe that the average byte array size when building with Mandrel 23.1 is bigger, which is an indication that some larger byte arrays are being added to the image heap.

Generating heap dumps and analyzing them in Java Mission Control (JMC)

Since the dashboards don’t provide more info we rebuild our test with -Dquarkus.native.additional-build-args=-R:+DumpHeapAndExit using both Mandrel versions.

This options instructs the generated native images to create a heap dump and exit.

mvn package -Pnative \

-Dquarkus.version=3.5.0 \

-Dquarkus.native.builder-image=quay.io/quarkus/ubi-quarkus-mandrel-builder-image:jdk-20 \

-Dquarkus.native.additional-build-args=-R:+DumpHeapAndExit

...

./target/quarkus-runner

Heap dump created at 'quarkus-runner.hprof'.

mv quarkus-runner.hprof quarkus-runner-23-0.hprof

We do the same with Mandrel 23.1 using the jdk-21 tag and open the dumps in Java Mission Control (JMC).

To install JMC one may use sdk install jmc.

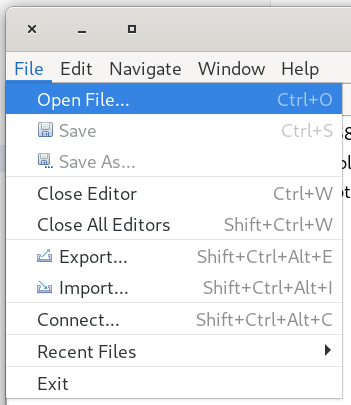

After starting JMC we navigate to File->Open and select the heap dumps we just generated.

Once the heap dumps are loaded we click on the byte[] class to filter the results and focus on the objects of this type.

![JMC focus on byte[] for 23.1](/assets/images/posts/mandrel-23-1-image-size-increase/jmc-focus-byte-23-1.png)

At this point on the right side of the window we can see the referrers sorted by the total size of the byte arrays they reference.

![JMC focus on byte[] for 23.1](/assets/images/posts/mandrel-23-1-image-size-increase/jmc-focus-byte-23-1-2.png)

We observe that the majority of the byte arrays when using Mandrel 23.1 is referenced by com.oracle.svm.core.code.ImageCodeInfo.codeInfoEncodings (12%) and com.oracle.svm.core.code.ImageCodeInfo.frameInfoEncodings (11%), while when using Mandrerl 23.0 the corresponding percentages are 12% and 6%.

![JMC focus on byte[] for 23.0](/assets/images/posts/mandrel-23-1-image-size-increase/jmc-focus-byte-23-0-2.png)

We also observe that when using Mandrel 23.1 the size of com.oracle.svm.core.code.ImageCodeInfo.frameInfoEncodings is ~2.5MB larger than the corresponding size when using Mandrel 23.0.

Attributing Binary Size Increase to Specific Code Changes

As a result, we focus our search on changes in Mandrel’s source code that could affect the frame info encodings using:

git log -- substratevm/src/com.oracle.svm.core/src/com/oracle/svm/core/code/FrameInfo*

this way we detected the following two pull requests:

- Add support for profiling of topmost frame which adds ~1MB of data to the image.

- ProfilingSampler does not need local variable values (specifically the commit “Always store bci in frame info”) which adds ~1.7MB of data to the image.

Both of these changes are necessary to improve the accuracy of the async-sampler.

Conclusion

Similarly to when Quarkus upgraded from 22.3 to 23.0, we observe an increase in the size of the generated native executables when going from 23.0 to 23.1.

Once more the changes resulting to that increase in the binary size appear to be well justified.

As native-image becomes more mature and feature rich it seems inevitable to avoid increasing the size of the generated binaries.

If you think that this kind of info should only be included when the user opts-in, please provide your feedback in this discussion.